Machine Generated Data

Tags

Color Analysis

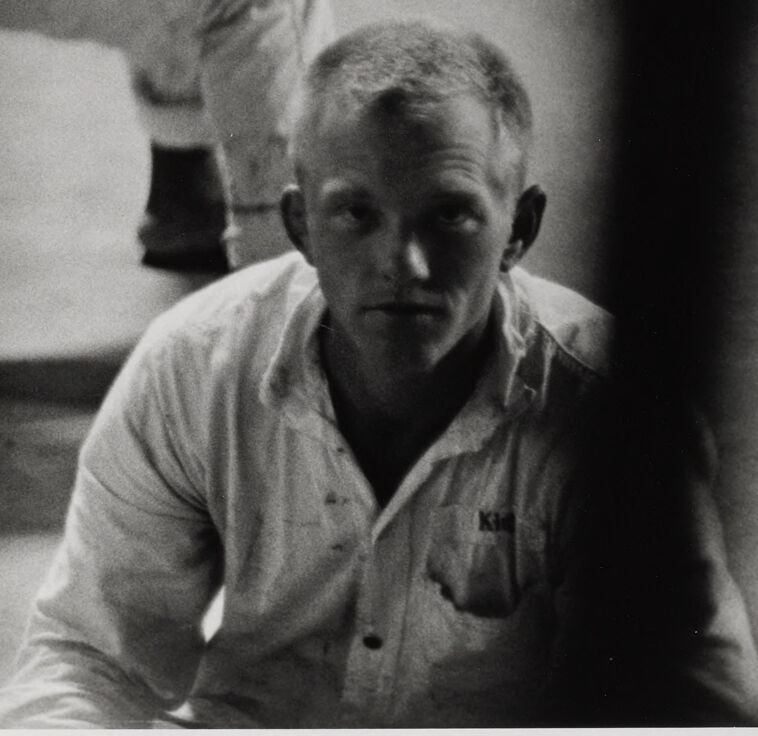

Face analysis

Amazon

Microsoft

AWS Rekognition

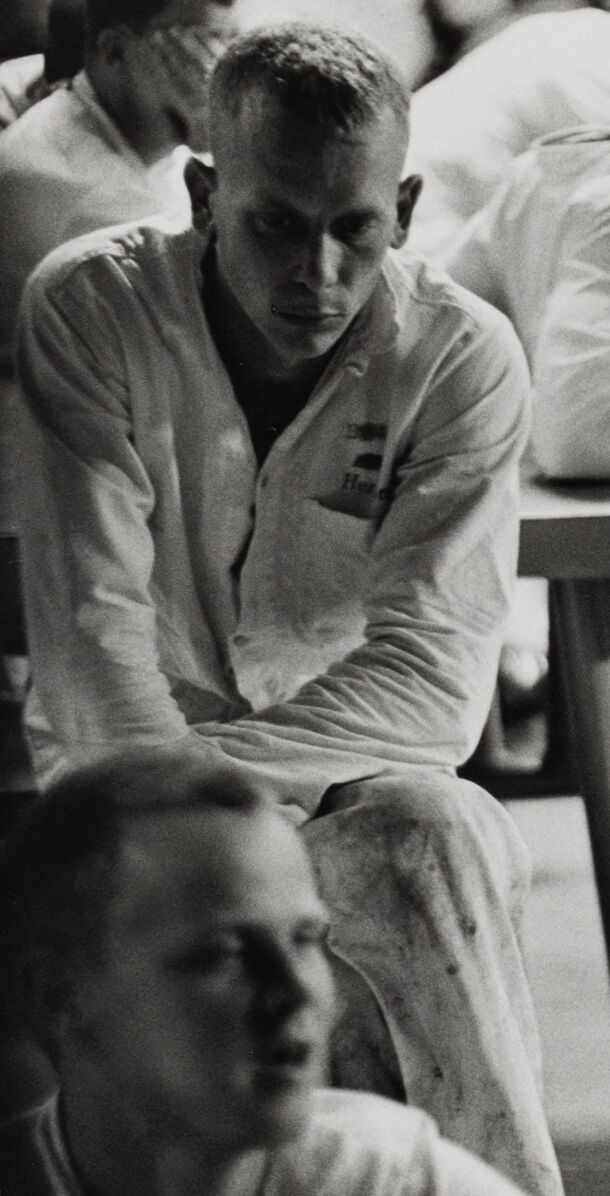

| Age | 26-43 |

| Gender | Male, 99.9% |

| Angry | 5.7% |

| Sad | 14.7% |

| Happy | 1.3% |

| Surprised | 2.3% |

| Confused | 10% |

| Disgusted | 1.7% |

| Calm | 64.4% |

Feature analysis

Amazon

| Person | 99.6% | |

Categories

Imagga

| paintings art | 71.2% | |

| people portraits | 19.8% | |

| pets animals | 8.3% | |

| food drinks | 0.3% | |

| nature landscape | 0.3% | |

| streetview architecture | 0.1% | |

| text visuals | 0.1% | |

Captions

Microsoft

created on 2018-02-09

| a group of people sitting in front of a crowd | 86% | |

| a group of people sitting in front of a crowd of people | 83.1% | |

| a group of people in front of a crowd | 83% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-29

The image is a black-and-white photograph showing a group of people dressed in similar, light-colored clothing. They are seated and standing in a spacious area that appears to be indoors, possibly a communal or institutional setting. The individuals are positioned around tables and benches, some sitting alone while others appear to be interacting in small groups. The atmosphere of the scene could be interpreted as solemn or contemplative.

Created by gpt-4 on 2024-11-28

The image shows a black and white photograph of several individuals seated in an enclosed space filled with rows of benches or tables. The individuals appear to be dressed in uniform attire, predominantly white, suggestive of a group environment such as a canteen, institution, or organized assembly. Some individuals are seated facing the camera while others are facing away, engaged in various activities or interactions. The attire and the setting might indicate a controlled environment, such as a correctional facility, military base, or similar institution. The focus in the photograph varies, with the foreground being less in focus compared to the central area, which draws attention to the center of the image. The lighting is subdued, with natural light seemingly coming from the right side, creating shadows and adding to the atmospheric quality of the scene.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-26

This is a black and white photograph that appears to be from a historical context. The image shows several people in what looks like an institutional or prison setting, wearing white uniforms or clothing. The subjects are seated on what appear to be benches or chairs in a room with other people visible in the background. The lighting and composition create a somber mood, with strong contrast between light and shadow. The photograph has a documentary-style quality typical of mid-20th century photojournalism.

Created by claude-3-haiku-20240307 on 2024-11-27

The image appears to be a black and white photograph of a crowded room, possibly in a hospital or medical facility. The room is filled with people sitting in various positions, some appearing to be patients or staff. The focal point of the image is a man sitting in the foreground, who appears to be deep in thought or contemplation. The background is blurred, creating a sense of depth and focus on the central figure. The overall mood of the image is somber and introspective.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-24

This image is a black-and-white photograph of a group of men in a prison or detention setting. The men are dressed in white shirts and pants, with some sitting on benches and others on the floor. The atmosphere appears to be one of confinement and possibly despair.

In the foreground, two men are prominently featured, one sitting on the floor and the other on a bench. Behind them, several other men are visible, some with their heads down or looking away. The background of the image is blurry, but it appears to show more men in the same attire, suggesting a large group or community.

The overall mood of the image is somber and reflective, with the men's expressions and body language conveying a sense of resignation or hopelessness. The use of black and white photography adds to the starkness and simplicity of the scene, emphasizing the harsh realities of life in a prison or detention center.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-26

The image depicts a black-and-white photograph of a group of men in white uniforms sitting on benches or on the floor. The men are dressed in white shirts and pants, with some wearing shoes and others barefoot. They appear to be in a large room, possibly a barracks or a prison, with a high ceiling and a concrete floor.

The men are seated on wooden benches or on the floor, with some leaning against the benches or each other. They seem to be in a state of relaxation, with some looking down or away from the camera, while others gaze directly at it. The atmosphere appears to be one of calmness and tranquility, with the men seemingly engaged in quiet conversation or simply enjoying each other's company.

In the background, there are more men in white uniforms, although they are out of focus and appear to be in the distance. The overall mood of the image is one of serenity and camaraderie, suggesting that the men are part of a community or group that values peace and harmony.