Machine Generated Data

Tags

Amazon

created on 2021-12-15

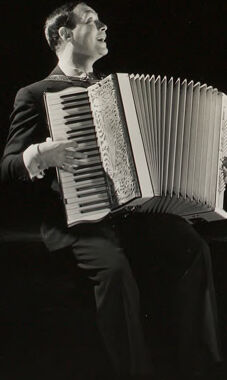

| Person | 98.8 | |

|

| ||

| Human | 98.8 | |

|

| ||

| Person | 95.7 | |

|

| ||

| Accordion | 88.1 | |

|

| ||

| Musical Instrument | 88.1 | |

|

| ||

Clarifai

created on 2023-10-15

Imagga

created on 2021-12-15

| accordion | 100 | |

|

| ||

| keyboard instrument | 100 | |

|

| ||

| wind instrument | 100 | |

|

| ||

| musical instrument | 100 | |

|

| ||

| concertina | 36.8 | |

|

| ||

| free-reed instrument | 29.5 | |

|

| ||

| man | 26.2 | |

|

| ||

| person | 22.4 | |

|

| ||

| male | 21.3 | |

|

| ||

| adult | 20.7 | |

|

| ||

| people | 16.7 | |

|

| ||

| black | 14.4 | |

|

| ||

| studio | 13.7 | |

|

| ||

| business | 13.4 | |

|

| ||

| model | 13.2 | |

|

| ||

| holding | 13.2 | |

|

| ||

| portrait | 12.9 | |

|

| ||

| face | 12.8 | |

|

| ||

| attractive | 12.6 | |

|

| ||

| hair | 11.9 | |

|

| ||

| music | 11.7 | |

|

| ||

| fashion | 11.3 | |

|

| ||

| hand | 10.6 | |

|

| ||

| businessman | 10.6 | |

|

| ||

| lady | 10.5 | |

|

| ||

| musical | 10.5 | |

|

| ||

| clothing | 9.8 | |

|

| ||

| pretty | 9.8 | |

|

| ||

| human | 9.7 | |

|

| ||

| color | 9.4 | |

|

| ||

| men | 9.4 | |

|

| ||

| smiling | 9.4 | |

|

| ||

| happy | 9.4 | |

|

| ||

| instrument | 9.3 | |

|

| ||

| one | 9 | |

|

| ||

| musician | 8.8 | |

|

| ||

| women | 8.7 | |

|

| ||

| play | 8.6 | |

|

| ||

| expression | 8.5 | |

|

| ||

| old | 8.4 | |

|

| ||

| retro | 8.2 | |

|

| ||

| style | 8.2 | |

|

| ||

| sexy | 8 | |

|

| ||

| lifestyle | 7.9 | |

|

| ||

| happiness | 7.8 | |

|

| ||

| art | 7.8 | |

|

| ||

| band | 7.8 | |

|

| ||

| money | 7.7 | |

|

| ||

| costume | 7.6 | |

|

| ||

| casual | 7.6 | |

|

| ||

| dark | 7.5 | |

|

| ||

| sound | 7.5 | |

|

| ||

| entertainment | 7.4 | |

|

| ||

| light | 7.3 | |

|

| ||

| 20s | 7.3 | |

|

| ||

| make | 7.3 | |

|

| ||

| success | 7.2 | |

|

| ||

| suit | 7.2 | |

|

| ||

| handsome | 7.1 | |

|

| ||

| device | 7.1 | |

|

| ||

| paper | 7.1 | |

|

| ||

| pipe | 7 | |

|

| ||

Google

created on 2021-12-15

| Free reed aerophone | 95.5 | |

|

| ||

| Accordionist | 95.3 | |

|

| ||

| Squeezebox | 92 | |

|

| ||

| Black | 89.6 | |

|

| ||

| Rectangle | 87.8 | |

|

| ||

| Accordion | 85.4 | |

|

| ||

| Folk instrument | 84.2 | |

|

| ||

| Style | 83.8 | |

|

| ||

| Black-and-white | 83.6 | |

|

| ||

| Musical instrument | 83.5 | |

|

| ||

| Font | 83.5 | |

|

| ||

| Tints and shades | 77.1 | |

|

| ||

| Technology | 76.4 | |

|

| ||

| Electronic device | 75.8 | |

|

| ||

| Musician | 75.6 | |

|

| ||

| Beauty | 75.2 | |

|

| ||

| Entertainment | 74.9 | |

|

| ||

| Music | 71.6 | |

|

| ||

| Monochrome | 69.6 | |

|

| ||

| Monochrome photography | 69.5 | |

|

| ||

Microsoft

created on 2021-12-15

| text | 99.2 | |

|

| ||

| electronics | 77.5 | |

|

| ||

| black and white | 72.3 | |

|

| ||

| computer | 43.7 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 7-17 |

| Gender | Male, 93.2% |

| Calm | 90.7% |

| Sad | 4.1% |

| Surprised | 2.4% |

| Confused | 0.9% |

| Angry | 0.7% |

| Fear | 0.5% |

| Happy | 0.5% |

| Disgusted | 0.3% |

AWS Rekognition

| Age | 32-48 |

| Gender | Male, 99.4% |

| Sad | 49.1% |

| Confused | 17.1% |

| Calm | 14.6% |

| Fear | 8.7% |

| Surprised | 3.7% |

| Angry | 3% |

| Disgusted | 2.7% |

| Happy | 1.1% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| food drinks | 54.1% | |

|

| ||

| events parties | 35.4% | |

|

| ||

| interior objects | 3.7% | |

|

| ||

| text visuals | 2.5% | |

|

| ||

| people portraits | 2% | |

|

| ||

| paintings art | 1.8% | |

|

| ||

Captions

Microsoft

created on 2021-12-15

| a person standing in front of a computer screen | 72.2% | |

|

| ||

| a person standing in front of a screen | 72.1% | |

|

| ||

| a person standing in front of a television | 65.1% | |

|

| ||

Text analysis

Amazon

1075-2

7075.2 U.F.

7075.2 U.F

1075-2

7075.2

U.F

1075-2