Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 50.3% |

| Happy | 49.7% |

| Angry | 49.6% |

| Surprised | 49.5% |

| Calm | 50.1% |

| Disgusted | 49.6% |

| Sad | 49.6% |

| Confused | 49.5% |

Feature analysis

Amazon

| Person | 99.7% | |

Categories

Imagga

| nature landscape | 85.8% | |

| beaches seaside | 8.8% | |

| cars vehicles | 2.6% | |

| streetview architecture | 1.4% | |

| paintings art | 0.7% | |

| pets animals | 0.5% | |

| sunrises sunsets | 0.1% | |

| food drinks | 0.1% | |

Captions

Microsoft

created on 2018-02-09

| a group of people posing for a photo | 88.9% | |

| a black and white photo of a group of people posing for the camera | 84.4% | |

| a group of people posing for the camera | 84.3% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-29

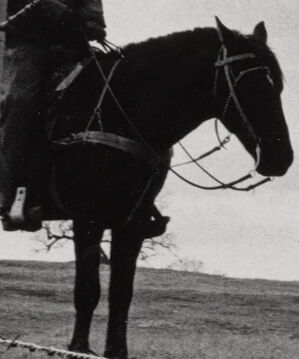

The image shows a group of individuals dressed in white uniforms working outdoors in a field. They appear to be performing manual labor, possibly digging or clearing an area. There are two men on horseback overseeing the group, and another group of similarly dressed individuals can be seen in the background, also partaking in the same activity under supervision. Barbed wire is visible in the foreground, suggesting the area might be heavily secured or restricted. The landscape is open and grassy, and the sky is cloudy.

Created by gpt-4 on 2024-11-28

The image shows a black and white photograph capturing a group of individuals dressed in white overalls, engaged in an activity on an open field. The individuals in the foreground are lined up along a piece of fabric or tarp that is extended across the ground. The people appear to be pulling on this fabric as a collective effort. There is a barbed-wire fence that divides the foreground from the background, indicating some form of boundary or demarcation within the scene. In the background, several individuals are positioned further away, some significantly elevated on the field. They seem to be in similar attire to those in the foreground, suggesting they are part of the same group or activity. The background field has two noticeable lines of people: one closer to the foreground and another farther away, both groups seem engaged in similar activities involving the fabric or tarp. The sky is overcast, and the terrain appears to be open and rural, with the absence of trees or buildings providing a stark, unadorned landscape. The lack of identifiable modern elements gives the image a timeless quality, where it could be situated in various historical contexts. The image conveys a sense of coordinated effort and has a documentary or reportage feel to it.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-26

This is a powerful black and white photograph that appears to be from a prison work detail or chain gang. The image shows prisoners dressed in white uniforms working on what appears to be agricultural or road work. They are being supervised by guards on horseback. There is barbed wire visible in the foreground, and the scene takes place on what looks like farmland or an open field. The composition of the image creates a stark contrast between the grouped prisoners in white and the mounted guards, highlighting the power dynamics at play. This type of photograph likely dates from the mid-20th century American South, where prison labor was commonly used for agricultural and infrastructure work.

Created by claude-3-haiku-20240307 on 2024-11-27

The image appears to depict a scene from a historical event or setting. In the foreground, there is a group of people in white clothing, some seated and others standing, gathered along a dirt path or field. A person on horseback can be seen in the distance, and further back there are more people in the distance, some walking and others appearing to be in formation. The overall scene has a somber, contemplative atmosphere, with the sky appearing overcast. The image is in black and white, capturing a sense of historical documentation.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-23

The image depicts a black-and-white photograph of a group of men in white uniforms, possibly prisoners, working together to pull a rope or cable. They are standing on a dirt hill, with some men holding tools such as shovels. In the background, there are several men on horseback, possibly guards or overseers, watching over the workers. The scene appears to be set in a rural or agricultural area, with a barbed wire fence visible in the foreground. The overall atmosphere of the image suggests a sense of hard labor and supervision.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-25

The image depicts a black-and-white photograph of a group of African American prisoners in white uniforms, engaged in manual labor on a farm or plantation. The prisoners are gathered near a barbed-wire fence, with some standing and others kneeling or sitting on the ground. They appear to be working in a field, possibly planting or harvesting crops. In the background, there are several mounted police officers on horseback, watching over the prisoners. The officers are dressed in uniforms and appear to be armed with rifles or other weapons. The sky above is cloudy and overcast, suggesting a gloomy or oppressive atmosphere. Overall, the image conveys a sense of hardship, labor, and surveillance, highlighting the harsh conditions faced by African American prisoners during this time period.