Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Imagga

AWS Rekognition

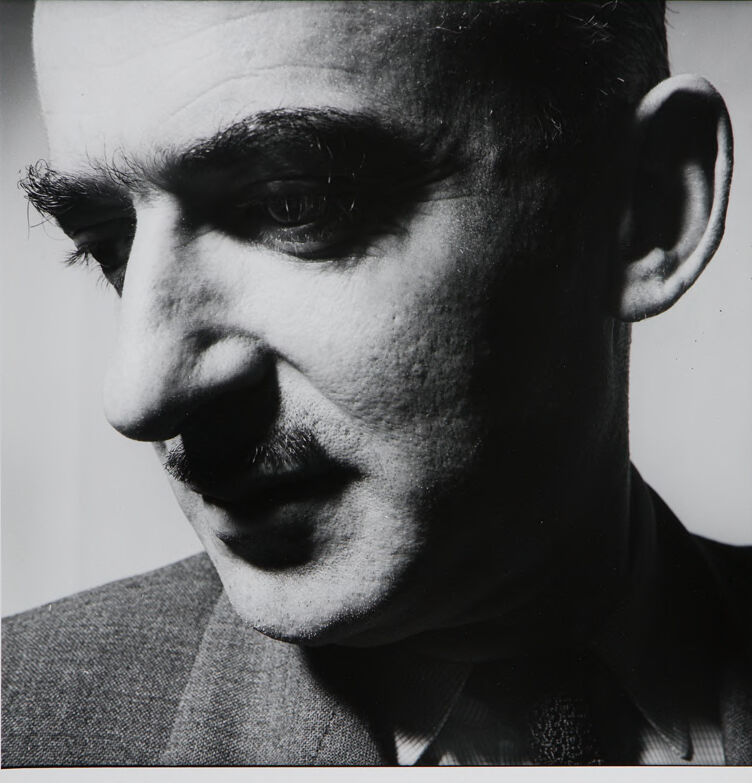

| Age | 36-44 |

| Gender | Male, 97.9% |

| Calm | 88.2% |

| Sad | 5.5% |

| Angry | 3.2% |

| Disgusted | 1.1% |

| Confused | 0.7% |

| Happy | 0.5% |

| Surprised | 0.5% |

| Fear | 0.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 95.5% | |

Categories

Imagga

created on 2022-01-09

| pets animals | 100% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a close up of a man with a beard looking at the camera | 66% | |

| a close up of a man | 65.9% | |

| a man looking at the camera | 65.8% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

portrait of the artist's son.

Salesforce

Created by general-english-image-caption-blip on 2025-05-19

a photograph of a man with a mustache and a mustache on his face

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-08

The image shows a black-and-white photograph with the visible portion including an ear, part of a suit jacket with a textured fabric, and a tie. The overall composition suggests a formal attire. The photo is bordered by a white frame, giving it a vintage Polaroid-like appearance.

Created by gpt-4o-2024-08-06 on 2025-06-08

The image depicts a black-and-white photo framed by a white border, resembling a Polaroid picture. The visible sections show a person's right ear and part of the right side of their neck. The individual is wearing a suit or blazer, as indicated by the fabric and the collar shown in the lower part of the image. The photo suggests a formal or professional attire.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-13

The image appears to be a black and white portrait photograph of a man's face. The subject has a serious expression, with intense eyes and a strong jawline. The lighting creates dramatic shadows and highlights the subject's features. The image has a moody, contemplative feel to it.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-13

The black and white image shows a close-up portrait of a man. He has an intense, brooding expression on his face, with furrowed brows and piercing eyes that gaze off to the side. The man has a mustache and appears to be wearing a suit jacket or coat. The lighting creates strong shadows and highlights, lending a dramatic, film noir-like quality to the portrait. The tight cropping and angled perspective add to the striking, enigmatic mood of the photograph.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-13

This is a striking black and white portrait photograph that focuses on a partial side view of a person's face. The image has strong contrast and dramatic lighting that creates deep shadows and highlights. The composition emphasizes the subject's prominent features - a strong nose, defined eyebrow, and visible ear. They appear to be wearing what looks like a textured wool or tweed jacket. The photograph has an artistic, almost film noir quality to it, with careful attention paid to angles and shadows. The close-up, cropped perspective and the stark lighting create an intimate yet somewhat mysterious mood.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-24

This image is a black-and-white photograph of a man's face, with the right side of his face in shadow. The man has dark hair and a mustache, and he is wearing a suit jacket and tie. He is looking to the left of the camera, and his expression is serious. The background of the image is white, and there is a thin border around the edge of the photo. The overall effect of the image is one of sophistication and elegance, and it appears to be a formal portrait of the man.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-24

The image is a black and white photograph of a man's face, captured in a close-up shot. The man is positioned on the left side of the frame, with his head turned slightly to the right. He has dark hair and a mustache, and he is wearing a suit jacket over a collared shirt. The background of the image is a plain, light-colored wall.

The photograph is presented in a Polaroid-style frame, with a white border around the edges. The overall effect is one of simplicity and elegance, with the focus squarely on the man's face. The image appears to be a professional portrait, possibly taken for a business or formal occasion.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-24

The image is a black-and-white portrait of a man. The man is wearing a suit and tie. The man's face is the main focus of the image. The man's face is slightly tilted, and his eyes are looking to the left. His eyebrows are arched, and his mouth is slightly open. The man's hair is short and black. The man's skin is smooth, and he has a mole on his cheek.

Created by amazon.nova-lite-v1:0 on 2025-05-24

The image is a black-and-white photograph of a man's face. The man is wearing a suit and tie, and his hair is neatly styled. He has a mustache and a goatee, and his eyes are slightly closed. The image is taken from a close-up angle, and the man's face takes up most of the frame. The lighting is dramatic, with the man's face illuminated by a bright light source from the left side, casting shadows on his face. The image has a vintage or classic feel, and the man's expression is serious or contemplative.

Google Gemini

Created by gemini-2.0-flash on 2025-04-29

The image is a black and white portrait of a middle-aged man, presented within a white frame that adds a modern touch to the vintage feel of the photograph. The man is captured in a three-quarter profile, looking downward, which casts a shadow over parts of his face.

He has a prominent nose and a neatly trimmed mustache. His eyebrows are thick and well-defined, lending a serious and contemplative expression to his face. The light catches the texture of his skin, revealing slight imperfections.

He is wearing a suit jacket and a collared shirt with a patterned tie. The dark tones of the suit contrast with the lighter shade of the shirt, creating depth and dimension in the portrait. The background is a plain, neutral tone, drawing the viewer's attention solely to the subject. The overall composition emphasizes the man's features and evokes a sense of introspection.

Created by gemini-2.0-flash-lite on 2025-04-29

Here's a description of the image:

The image is a black and white portrait of a man. The photo is a close-up, focusing on the man's face and the top of his shoulders. He has dark hair, a prominent nose, a mustache, and bushy eyebrows. His gaze is directed downwards, giving the impression of introspection or contemplation. The man is wearing a suit jacket and what appears to be a collared shirt and a tie. The lighting creates strong shadows, highlighting the texture of his skin and the shape of his face. The photograph has a square format and is framed in a white border, possibly a print or a Polaroid.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-25

The image is a black-and-white portrait of a man. The photograph is tightly framed, focusing on his face and upper torso. The man appears to be middle-aged or older, with a serious and intense expression. He has short, dark hair and a prominent mustache. He is wearing a suit jacket and a tie, suggesting a formal or professional setting. The lighting is dramatic, with shadows and highlights emphasizing the contours of his face, particularly his eyes and the lines around his mouth. The background is plain, keeping the focus entirely on the subject. The overall tone of the image is somber and contemplative.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-24

The image is a black and white portrait of a man taken in a close-up view. The lighting highlights the texture of his skin and facial features, casting noticeable shadows. He is looking slightly to his right, and his expression appears contemplative or serious. He is wearing a suit, which suggests a formal or professional setting. The background is plain and does not distract from the subject. The overall composition focuses on the subject's face and upper torso.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-24

This is a black-and-white portrait photograph of a man. The image is framed with a light-colored border. The man is positioned slightly off-center, with his face turned toward the left side of the frame. He is wearing a dark suit with a tie and a collared shirt. The lighting creates stark contrasts, with one side of his face brightly illuminated and the other side in deep shadow, highlighting the contours of his face. His expression is serious, and his eyes are looking slightly downward. The background is plain and unobtrusive, drawing attention to the subject's face and upper body.