Machine Generated Data

Tags

Color Analysis

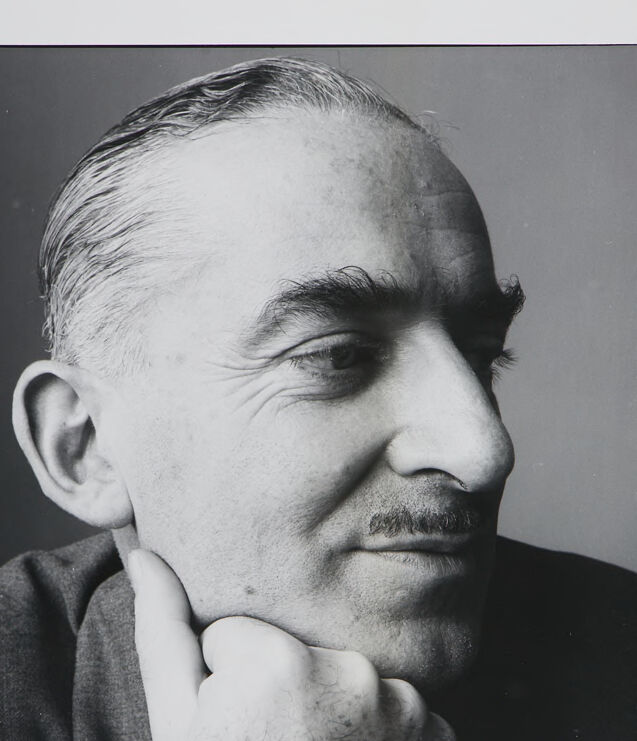

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 47-53 |

| Gender | Male, 100% |

| Calm | 98.3% |

| Happy | 0.6% |

| Disgusted | 0.3% |

| Angry | 0.3% |

| Sad | 0.1% |

| Surprised | 0.1% |

| Confused | 0.1% |

| Fear | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 98.2% | |

Categories

Imagga

created on 2022-01-09

| paintings art | 62% | |

| people portraits | 37.9% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a man making a face for the camera | 83.1% | |

| a man looking at the camera | 83% | |

| a man with a beard looking at the camera | 69.1% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-14

portrait of the writer and poet.

Salesforce

Created by general-english-image-caption-blip on 2025-05-17

a photograph of a man with a mustache and mustaches on his face

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-17

The image is a black-and-white portrait photograph with a close-up view of a person's head. It includes visible details such as an ear and a hand resting under the chin. The individual appears to be wearing a jacket or similar clothing, and the edges of the photograph are bordered by a white frame.

Created by gpt-4o-2024-08-06 on 2025-06-17

The image is a black and white photograph that shows a portion of an individual. The person has short hair that is neatly combed back, and they are wearing a dark-colored garment with a smooth texture. One visible ear is clearly defined, and the person's hand is resting under their chin. The photograph is framed with a white border, which has distinct angled corners, giving it a classic, vintage appearance.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-17

The image shows a close-up portrait of a man with a serious expression on his face. He has a prominent brow, deep-set eyes, and a well-defined nose and chin. His hair is neatly combed back, and he appears to be wearing a dark suit or jacket. The image is in black and white, giving it a classic, timeless quality.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-17

This is a black and white portrait photograph showing a middle-aged man in a contemplative pose. He is resting his chin on his hand and looking off to the side with a slight smile. He has a thin mustache, slicked-back hair, and is wearing what appears to be a dark sweater or jacket. The photograph appears to be professionally taken, with good lighting that creates depth and contrast. The composition is classic portrait style, captured from a side angle that highlights the subject's profile and facial features. The background is simple and neutral, keeping the focus on the subject.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of a man with his hand on his chin, looking to the right. The man has short hair that is combed back, and he is wearing a dark-colored shirt. He is holding his right hand up to his chin, with his index finger touching his chin and his thumb resting on the back of his hand.

The background of the image is a plain wall, and the photo is surrounded by a white border. The overall atmosphere of the image is one of contemplation or thoughtfulness, as the man appears to be deep in thought.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

The image is a black and white photograph of a man with his hand on his chin. The man has short, graying hair that is combed back. He is wearing a dark-colored shirt or jacket. His right hand is raised to his chin, with his thumb resting on his cheek and his fingers curled around his jawline. The background of the image is a plain gray wall. The overall atmosphere of the image suggests that it may be a portrait or a headshot of the man, possibly taken for professional or artistic purposes.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-31

The image is a black-and-white photograph of a man. He is looking sideways with his hand resting on his chin. His hair is short and combed back. He has a mustache and a beard. He is wearing a dark shirt. The photograph is cropped close to the subject, with only his head and shoulders visible. The background is dark, with no visible details. The image has a vintage look, with a slightly grainy texture.

Created by amazon.nova-pro-v1:0 on 2025-05-31

The image is a black-and-white photograph of a man. He has a mustache and is wearing a dark shirt. His hair is short and gray. He is looking to the side, with his head slightly tilted. His right hand is resting on his chin, and his fingers are gently touching his cheek. His facial expression is contemplative, suggesting deep thought or reflection. The background is plain, focusing attention on the subject.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-17

Here is a description of the image:

The image is a black and white photograph of a middle-aged man in a close-up shot. He appears to be deep in thought, with his right hand resting on his chin. He has a receding hairline, dark, bushy eyebrows, and a neatly trimmed mustache. The background is a soft gray. The photo is framed with a white border. The image has a vintage or classic feel to it.

Created by gemini-2.0-flash on 2025-05-17

Here is a description of the image:

The image is a black and white portrait of an older man. He has receding, gray hair, thick eyebrows, and a mustache. His head is turned to the right, and he is gazing off into the distance. His right hand is raised to his chin in a thoughtful or contemplative pose. He is wearing a dark shirt or jacket. The photo is framed with a white border, resembling a polaroid print. The background is a plain, neutral gray.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

The image is a black-and-white portrait photograph of an older man. He has short, neatly combed hair and a mustache. He is wearing a dark suit jacket and a tie. His left hand is resting on his chin, suggesting a contemplative or thoughtful pose. The lighting highlights the contours of his face, giving the image a dramatic and serious tone. The background is plain and dark, which helps to focus attention on the man's face and expression. The photograph appears to be professionally taken, with careful attention to composition and lighting.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-04

This is a black-and-white portrait of a man. The photograph is cropped to focus on his head and shoulders. The man has a thoughtful expression, with his head slightly tilted and his hand resting on his chin. His hair is short and graying, and he has a mustache. The lighting highlights the texture of his skin and the contours of his face. The background is a plain, dark shade, which makes the subject stand out. The image has a classic, timeless quality, likely from an earlier era.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-04

This image is a black-and-white portrait of a man. He appears to be middle-aged or older, with receding hair and a thoughtful expression. His head is slightly tilted, and his eyes are looking to the side, not directly at the camera. He has a thin mustache and is resting his chin on his hand, with his fingers curled inward. The background is plain and unobtrusive, which helps to focus attention on the man's face. The lighting is soft, highlighting the contours of his face and giving the image a classic, timeless quality. The photograph is framed with a white border, suggesting it may be a print or a photograph displayed in a gallery or collection.