Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

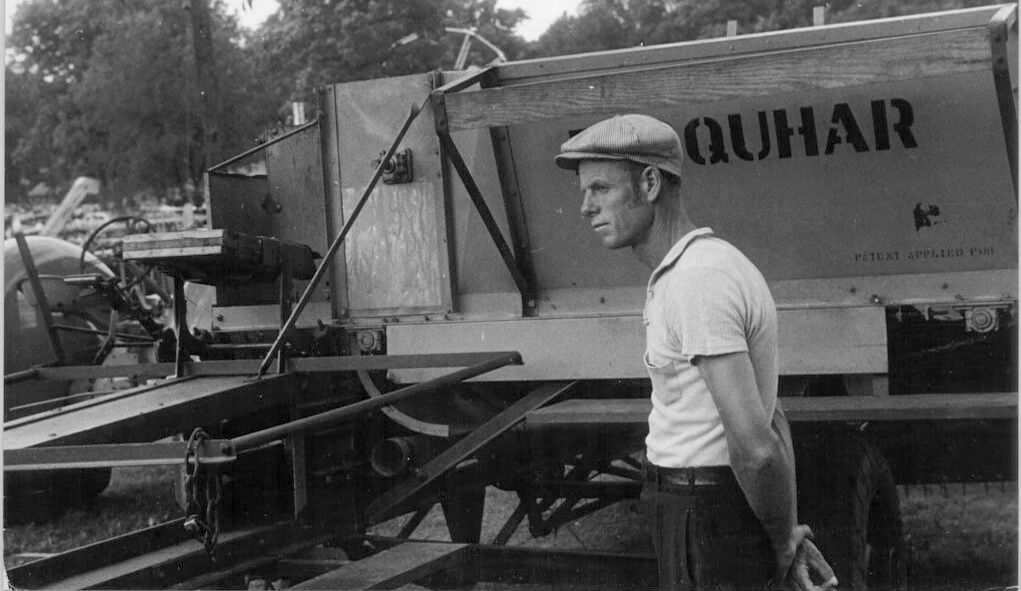

| Age | 42-50 |

| Gender | Male, 99.6% |

| Calm | 99.7% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Confused | 0% |

| Angry | 0% |

| Disgusted | 0% |

| Happy | 0% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Adult | 99.3% | |

Categories

Imagga

created on 2023-10-06

| streetview architecture | 62.1% | |

| cars vehicles | 28.7% | |

| paintings art | 4.5% | |

| nature landscape | 2.6% | |

| people portraits | 1.4% | |

Captions

Microsoft

created by unknown on 2018-05-11

| a man standing in front of a truck | 85.9% | |

| a man standing in front of a boat | 79.6% | |

| a man that is standing in front of a truck | 79.2% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-31

a photograph of a man in a hat and a hat on a truck

Created by general-english-image-caption-blip-2 on 2025-07-07

a man standing next to a tractor with a sign that says qurar

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-09

The black-and-white image depicts a man standing near an agricultural machine or trailer with "FAQUHAR" written prominently on its side. The equipment appears to be mounted on a frame with wheels, likely designed for farming or industrial purposes. In the background, there are trees and more machinery, suggesting an outdoor setting, possibly on a farm or during an agricultural expo. The man is wearing a flat cap and a short-sleeved polo shirt, with his hands held behind his back.

Created by gpt-4o-2024-08-06 on 2025-06-09

The black and white image shows a man standing next to a piece of agricultural machinery. The machinery has the word "QUHAR" partially visible on its side, suggesting it might be farm equipment. The man is wearing a flat cap, a collared short-sleeve shirt, and dark trousers. The scene appears to be outdoors, with trees and other equipment visible in the background. The man has his hands behind his back and is looking towards the machinery.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-18

The image shows a man standing next to a large truck or trailer with the name "Quhar" written on the side. The man is wearing a cap and a white t-shirt, and appears to be examining or inspecting the vehicle. The background includes trees and suggests an outdoor, rural setting. The image has a black and white, vintage appearance.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-18

This is a black and white photograph showing someone working with what appears to be farm equipment or machinery. The person is wearing a white t-shirt and a cap, standing next to some mechanical equipment that has "ROHAR" (or similar text) visible on its side. The image has an industrial or agricultural feel to it, likely from the mid-20th century based on the photographic style and clothing. Trees can be seen in the background, and the machinery appears to have various mechanical components and levers visible.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-12

The image is a black-and-white photograph of a man standing in front of a large piece of machinery, possibly a combine harvester or other agricultural equipment.

- The man is wearing a light-colored shirt and dark pants, and he has a flat cap on his head.

- He is looking to the left of the camera with a serious expression.

- Behind him is a large piece of machinery with the word "QUHAR" written on the side in large letters.

- The background of the image is out of focus, but it appears to be a rural area with trees and a cloudy sky.

The overall atmosphere of the image suggests that it was taken during the day, possibly in the late morning or early afternoon, and that the man is engaged in some kind of work or activity related to the machinery behind him.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-12

The image depicts a black-and-white photograph of a man standing in front of a large piece of machinery, with trees visible in the background. The man is dressed in a white polo shirt, dark pants, and a flat cap, and he appears to be engaged in some form of work or maintenance activity.

The machinery behind him features a large metal box with the word "QUHAR" printed on it, although the context and purpose of this equipment are unclear. The overall atmosphere of the image suggests a rural or industrial setting, possibly from an earlier era given the black-and-white format and the style of clothing worn by the individual.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-06-05

The black-and-white image features a man standing in front of a machinery vehicle with the word "QUHAR" printed on it. He is wearing a hat and a white t-shirt, and he has his hand on his hip. He is looking to the left, and his mouth is slightly open. Behind him are several trees, and the ground is covered with grass. The image has a vintage filter.

Created by amazon.nova-lite-v1:0 on 2025-06-05

The image is a black-and-white photograph of a man standing beside a large, vintage agricultural vehicle. The vehicle appears to be a type of thresher or combine harvester, with a large wooden box on top labeled "QUHAR." The man is wearing a cap and a white t-shirt, and he is standing with his hands clasped behind his back. The vehicle has various mechanical parts and attachments, and there are trees and other vegetation in the background.

Google Gemini

Created by gemini-2.0-flash on 2025-05-24

Here's a description of the image:

Overall Impression:

The image appears to be a vintage black and white photograph. It depicts a man standing next to a piece of farm machinery or equipment. The setting looks like it could be a rural or agricultural environment.

Detailed Description:

Subject: The main subject is a man standing in profile facing left. He is wearing a cap and appears to be wearing a casual shirt and trousers. His hands are clasped behind his back.

Farm Machinery: The man is standing next to a large piece of machinery with the word "QUHAR" prominently displayed on its side. The equipment seems to be complex with various mechanical parts, beams, and a large wheel in the back.

Background: The background includes trees, suggesting an outdoor setting. It's possible the equipment is in a field or a farmyard.

Inferences and Context:

Given the machinery and the man's attire, it's reasonable to infer that the photograph is related to farming or agriculture. The equipment might be a type of harvester, trailer, or other farm implement. The man could be the operator, owner, or a worker associated with the machinery. The style of the clothing and equipment suggests the photograph may have been taken several decades ago.

Created by gemini-2.0-flash-lite on 2025-05-24

Here's a description of the image:

Overall Impression:

The image is a black and white photograph, likely taken in the mid-20th century. It features a man standing next to a large piece of machinery, possibly an agricultural machine. The scene has a rural, industrial feel.

Key Elements:

- The Man: A fair-skinned man, wearing a cap, short-sleeved shirt, and dark pants. He appears to be looking off to the side with a serious expression. His hands are clasped behind his back.

- The Machine: A large, boxy machine with "QUHAR" prominently displayed on its side. There are various levers, pipes, and other mechanical components visible. The words "PATENT APPLIED FOR" are written below the name.

- Background: Trees and foliage are visible in the background, suggesting an outdoor setting. The presence of other machinery might indicate a farm or industrial fair setting.

Composition and Atmosphere:

The photograph is well-composed, with the man positioned as the central focus. The overall tone is somewhat somber and serious. The details suggest manual labor. The black-and-white format adds a sense of historical authenticity.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-31

The image is a black-and-white photograph depicting a man standing next to a large piece of agricultural machinery. The man is wearing a cap and a short-sleeved shirt, and he appears to be in a rural setting with trees in the background. The machinery he is standing next to has the word "QUHAR" prominently displayed on it, along with the phrase "PATENT APPLIED FOR." The equipment appears to be some type of agricultural processing machine, possibly related to harvesting or processing crops. The man's posture suggests he is either operating or inspecting the machinery. The overall scene conveys a sense of work and industry in a rural environment.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-07

The image is a black-and-white photograph depicting a man standing next to a piece of agricultural machinery. The man is wearing a flat cap and a short-sleeved shirt, with his hands resting on his hips. He appears to be looking off into the distance, possibly in a rural or farm setting. The machinery behind him is labeled with the word "QUHAR," and it seems to be a type of farm implement, possibly a grain or seed applicator, given the design and components visible. The background features trees and some farm infrastructure, suggesting an agricultural environment. The overall style of the photograph suggests it may have been taken in the mid-20th century.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-07

This black-and-white photograph captures a man standing beside a vintage agricultural machine, likely a combine harvester or similar farm equipment. The man is wearing a white short-sleeved shirt, dark pants, and a flat cap. The machinery has a large metal structure with the word "QUHAR" prominently displayed on its side. The background features trees, indicating an outdoor, rural setting. The overall scene suggests a historical context, possibly from the mid-20th century.