Machine Generated Data

Tags

Amazon

created on 2022-01-23

Clarifai

created on 2023-10-26

Imagga

created on 2022-01-23

| portrait | 27.8 | |

|

| ||

| person | 25.3 | |

|

| ||

| face | 24.1 | |

|

| ||

| negative | 23 | |

|

| ||

| man | 22.8 | |

|

| ||

| adult | 21.3 | |

|

| ||

| male | 21.3 | |

|

| ||

| device | 19.9 | |

|

| ||

| looking | 19.2 | |

|

| ||

| film | 18.8 | |

|

| ||

| head | 18.5 | |

|

| ||

| hair | 18.2 | |

|

| ||

| black | 18 | |

|

| ||

| people | 17.8 | |

|

| ||

| human | 17.2 | |

|

| ||

| eyes | 17.2 | |

|

| ||

| expression | 17.1 | |

|

| ||

| attractive | 15.4 | |

|

| ||

| old | 15.3 | |

|

| ||

| senior | 15 | |

|

| ||

| photographic paper | 13.8 | |

|

| ||

| happy | 13.8 | |

|

| ||

| close | 13.7 | |

|

| ||

| computer | 13.4 | |

|

| ||

| laptop | 13.3 | |

|

| ||

| look | 13.1 | |

|

| ||

| one | 12.7 | |

|

| ||

| model | 12.4 | |

|

| ||

| fashion | 12.1 | |

|

| ||

| sexy | 12 | |

|

| ||

| sad | 11.6 | |

|

| ||

| lady | 11.4 | |

|

| ||

| pretty | 11.2 | |

|

| ||

| smiling | 10.8 | |

|

| ||

| holding | 10.7 | |

|

| ||

| smile | 10.7 | |

|

| ||

| brunette | 10.5 | |

|

| ||

| work | 10.2 | |

|

| ||

| cute | 10 | |

|

| ||

| hand | 9.9 | |

|

| ||

| working | 9.7 | |

|

| ||

| elderly | 9.6 | |

|

| ||

| home | 9.6 | |

|

| ||

| serious | 9.5 | |

|

| ||

| mouth | 9.4 | |

|

| ||

| photographic equipment | 9.3 | |

|

| ||

| dark | 9.2 | |

|

| ||

| blackboard | 9.2 | |

|

| ||

| retired | 8.7 | |

|

| ||

| boy | 8.7 | |

|

| ||

| hands | 8.7 | |

|

| ||

| business | 8.5 | |

|

| ||

| emotion | 8.3 | |

|

| ||

| blond | 8.2 | |

|

| ||

| style | 8.2 | |

|

| ||

| office | 8 | |

|

| ||

| lifestyle | 7.9 | |

|

| ||

| behind | 7.8 | |

|

| ||

| technology | 7.4 | |

|

| ||

| lips | 7.4 | |

|

| ||

| paper | 7.3 | |

|

| ||

| pose | 7.2 | |

|

| ||

| aged | 7.2 | |

|

| ||

| eye | 7.1 | |

|

| ||

| handsome | 7.1 | |

|

| ||

| mustache | 7.1 | |

|

| ||

| call | 7 | |

|

| ||

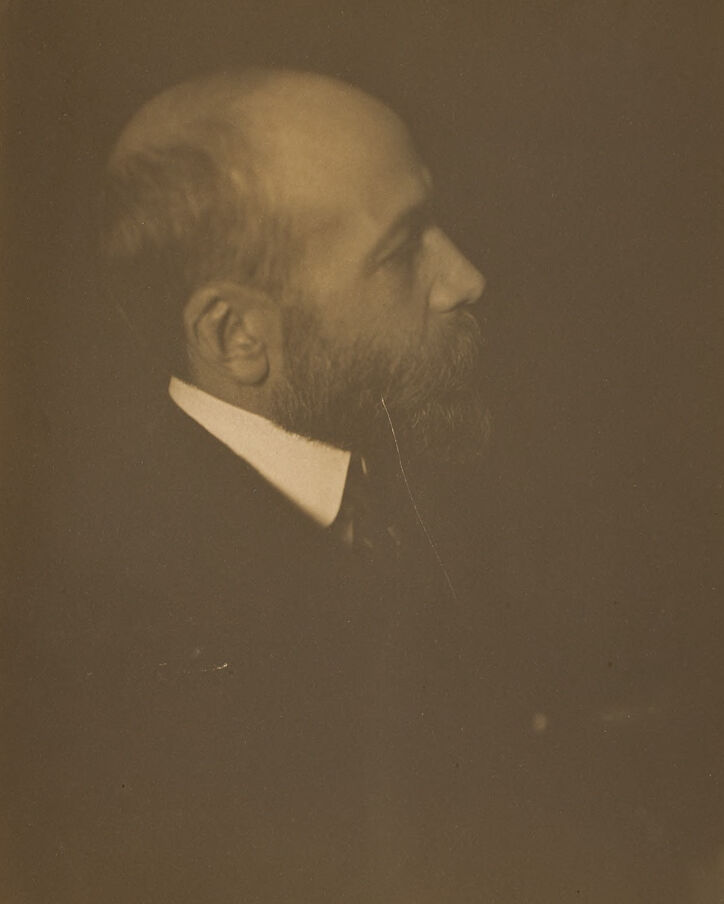

Google

created on 2022-01-23

| Jaw | 87.8 | |

|

| ||

| Beard | 86.6 | |

|

| ||

| Art | 82.3 | |

|

| ||

| Rectangle | 82 | |

|

| ||

| Tints and shades | 77.3 | |

|

| ||

| Facial hair | 69.6 | |

|

| ||

| Moustache | 66.9 | |

|

| ||

| Visual arts | 66.5 | |

|

| ||

| Vintage clothing | 65.2 | |

|

| ||

| Stock photography | 64.5 | |

|

| ||

| Self-portrait | 64 | |

|

| ||

| Collar | 62.1 | |

|

| ||

| Room | 59.2 | |

|

| ||

| History | 58.9 | |

|

| ||

| Square | 57 | |

|

| ||

| Portrait | 56.8 | |

|

| ||

| Illustration | 56.4 | |

|

| ||

| Paper product | 55.2 | |

|

| ||

| Font | 54.7 | |

|

| ||

| Painting | 53.7 | |

|

| ||

Microsoft

created on 2022-01-23

| human face | 98.8 | |

|

| ||

| text | 98.1 | |

|

| ||

| person | 94.9 | |

|

| ||

| man | 85.7 | |

|

| ||

| portrait | 83.1 | |

|

| ||

| black and white | 75.9 | |

|

| ||

| black | 70.2 | |

|

| ||

| white | 65.9 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 41-49 |

| Gender | Male, 100% |

| Calm | 99.6% |

| Sad | 0.2% |

| Confused | 0.1% |

| Surprised | 0% |

| Angry | 0% |

| Fear | 0% |

| Disgusted | 0% |

| Happy | 0% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 99.6% | |

|

| ||

Captions

Microsoft

created on 2022-01-23

| an old photo of a man | 65.8% | |

|

| ||

| old photo of a man | 61.3% | |

|

| ||

| a man in a suit and tie | 61.2% | |

|

| ||