Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 25-35 |

| Gender | Male, 99.6% |

| Calm | 85.1% |

| Sad | 10.9% |

| Confused | 1% |

| Happy | 0.7% |

| Fear | 0.6% |

| Disgusted | 0.6% |

| Angry | 0.5% |

| Surprised | 0.5% |

Feature analysis

Amazon

| Painting | 83.7% | |

Categories

Imagga

| paintings art | 100% | |

Captions

Microsoft

created by unknown on 2022-01-23

| an old photo of a person | 67.7% | |

| old photo of a person | 64.5% | |

| a person posing for a photo | 62.8% | |

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-17

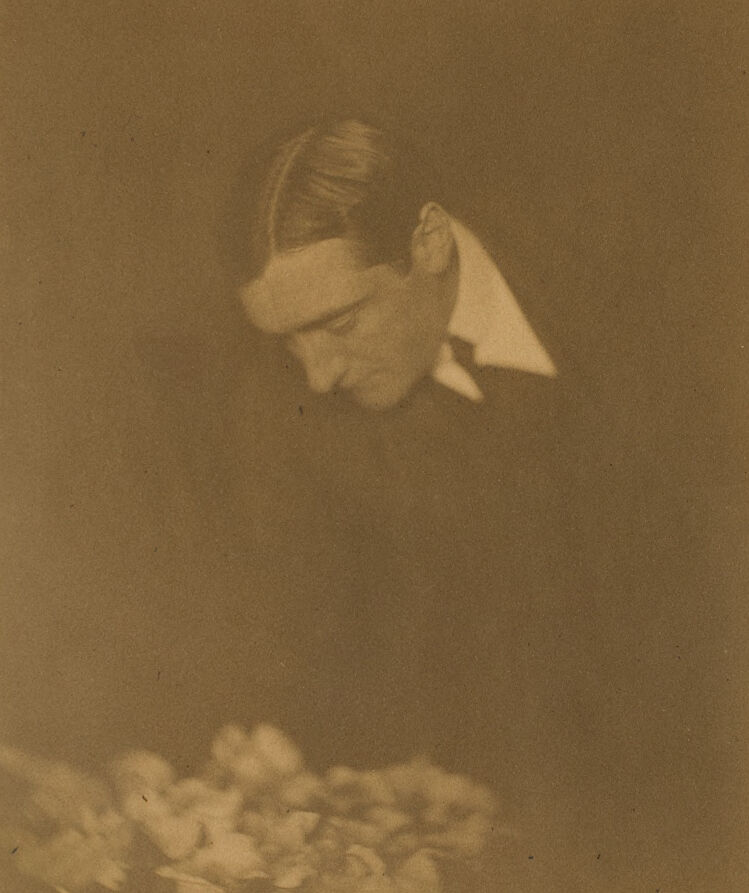

Here's a description of the image:

The image is a sepia-toned photograph, likely vintage, featuring a man in the upper portion and a grouping of something (possibly flowers) at the bottom.

- The Man: The man is the focal point of the image. His face is turned downward, creating an impression of introspection or contemplation. He has dark hair, neatly slicked back, and is wearing a dark jacket or coat, with the crisp, white points of his collar visible. His expression is obscured by the angle, heightening the sense of mystery.

- The Flowers (Possibly): Below the man's face, there's a soft, blurred shape that appears to be a cluster of blossoms. The details are indistinct, but the form suggests flowers, adding a touch of delicate beauty to the overall composition.

- Tone and Composition: The image is bathed in a warm sepia tone, enhancing its vintage feel. The composition is well-balanced, with the man’s head positioned near the top of the frame and the implied flowers balancing at the bottom. The background is mostly dark and undifferentiated, giving focus to the man and flowers. The photograph is likely a portrait or a staged image aimed at capturing a specific mood or theme.

Created by gemini-2.0-flash on 2025-05-17

The vintage photograph, rendered in sepia tones, depicts a man looking down at a bouquet of flowers. The man, positioned in the upper portion of the frame, has neatly combed hair, a defined jawline, and is dressed in a dark suit with a crisp white collar. His gaze is directed downward, suggesting a somber or contemplative mood.

The bouquet of flowers is located in the lower portion of the image, and the flowers are a pale color, contrasting slightly with the darker background. The overall lighting is soft, creating a gentle and diffused effect. The sepia tone adds a sense of nostalgia and age to the photograph.