Machine Generated Data

Tags

Amazon

created on 2022-01-23

Clarifai

created on 2023-10-26

Imagga

created on 2022-01-23

| person | 23.1 | |

|

| ||

| man | 22.8 | |

|

| ||

| male | 19.3 | |

|

| ||

| sport | 18.3 | |

|

| ||

| outdoor | 15.3 | |

|

| ||

| people | 15.1 | |

|

| ||

| protection | 13.6 | |

|

| ||

| danger | 13.6 | |

|

| ||

| statue | 13.5 | |

|

| ||

| uniform | 12.8 | |

|

| ||

| military | 12.6 | |

|

| ||

| player | 12.5 | |

|

| ||

| athlete | 12.3 | |

|

| ||

| sky | 12.1 | |

|

| ||

| attendant | 12 | |

|

| ||

| clothing | 12 | |

|

| ||

| old | 11.8 | |

|

| ||

| adult | 11.8 | |

|

| ||

| toxic | 11.7 | |

|

| ||

| mask | 11.7 | |

|

| ||

| weapon | 11.1 | |

|

| ||

| soldier | 10.7 | |

|

| ||

| protective | 10.7 | |

|

| ||

| nuclear | 10.7 | |

|

| ||

| travel | 10.6 | |

|

| ||

| summer | 10.3 | |

|

| ||

| competition | 10.1 | |

|

| ||

| industrial | 10 | |

|

| ||

| dirty | 9.9 | |

|

| ||

| ballplayer | 9.9 | |

|

| ||

| radioactive | 9.8 | |

|

| ||

| radiation | 9.8 | |

|

| ||

| war | 9.7 | |

|

| ||

| chemical | 9.6 | |

|

| ||

| gas | 9.6 | |

|

| ||

| sculpture | 9.6 | |

|

| ||

| beach | 9.3 | |

|

| ||

| black | 9 | |

|

| ||

| sunset | 9 | |

|

| ||

| outdoors | 9 | |

|

| ||

| brass | 8.9 | |

|

| ||

| helmet | 8.9 | |

|

| ||

| destruction | 8.8 | |

|

| ||

| accident | 8.8 | |

|

| ||

| boy | 8.7 | |

|

| ||

| dangerous | 8.6 | |

|

| ||

| horse | 8.5 | |

|

| ||

| trombone | 8.3 | |

|

| ||

| world | 8.2 | |

|

| ||

| wind instrument | 8.1 | |

|

| ||

| military uniform | 7.9 | |

|

| ||

| stalker | 7.9 | |

|

| ||

| grass | 7.9 | |

|

| ||

| art | 7.9 | |

|

| ||

| planner | 7.9 | |

|

| ||

| child | 7.8 | |

|

| ||

| ancient | 7.8 | |

|

| ||

| death | 7.7 | |

|

| ||

| industry | 7.7 | |

|

| ||

| dark | 7.5 | |

|

| ||

| silhouette | 7.4 | |

|

| ||

| tradition | 7.4 | |

|

| ||

| musical instrument | 7.3 | |

|

| ||

| religion | 7.2 | |

|

| ||

| history | 7.2 | |

|

| ||

| game | 7.1 | |

|

| ||

| portrait | 7.1 | |

|

| ||

| contestant | 7.1 | |

|

| ||

| day | 7.1 | |

|

| ||

| animal | 7.1 | |

|

| ||

| gun | 7 | |

|

| ||

Google

created on 2022-01-23

| Musical instrument | 89 | |

|

| ||

| Vintage clothing | 75.8 | |

|

| ||

| Folk instrument | 70.6 | |

|

| ||

| String instrument | 67.3 | |

|

| ||

| Stock photography | 66.6 | |

|

| ||

| History | 66.1 | |

|

| ||

| Uniform | 63.3 | |

|

| ||

| Soldier | 60.8 | |

|

| ||

| Visual arts | 59.7 | |

|

| ||

| Art | 58.7 | |

|

| ||

| Suit | 57.5 | |

|

| ||

| Drum | 54.7 | |

|

| ||

| Military organization | 53.5 | |

|

| ||

| Crew | 50.8 | |

|

| ||

| Retro style | 50.8 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 38-46 |

| Gender | Male, 99.8% |

| Calm | 94.6% |

| Surprised | 3.4% |

| Happy | 0.6% |

| Disgusted | 0.5% |

| Angry | 0.5% |

| Confused | 0.2% |

| Fear | 0.1% |

| Sad | 0.1% |

AWS Rekognition

| Age | 35-43 |

| Gender | Male, 100% |

| Confused | 40.9% |

| Calm | 33.8% |

| Sad | 11.3% |

| Angry | 5.5% |

| Fear | 3.6% |

| Surprised | 2.2% |

| Happy | 1.5% |

| Disgusted | 1.4% |

AWS Rekognition

| Age | 49-57 |

| Gender | Male, 99.9% |

| Calm | 98.6% |

| Sad | 0.5% |

| Confused | 0.5% |

| Disgusted | 0.1% |

| Angry | 0.1% |

| Surprised | 0.1% |

| Happy | 0.1% |

| Fear | 0% |

AWS Rekognition

| Age | 30-40 |

| Gender | Male, 99.9% |

| Calm | 97.2% |

| Sad | 1.2% |

| Confused | 0.6% |

| Angry | 0.3% |

| Disgusted | 0.3% |

| Happy | 0.2% |

| Surprised | 0.1% |

| Fear | 0.1% |

AWS Rekognition

| Age | 40-48 |

| Gender | Male, 100% |

| Calm | 43.6% |

| Sad | 19.1% |

| Fear | 14% |

| Angry | 5.6% |

| Happy | 5.4% |

| Disgusted | 4.4% |

| Surprised | 4.1% |

| Confused | 3.8% |

Microsoft Cognitive Services

| Age | 30 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 44 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 44 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 61 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 33 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Unlikely |

| Joy | Very unlikely |

| Headwear | Likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 86.8% | |

|

| ||

| people portraits | 10.3% | |

|

| ||

| interior objects | 1.6% | |

|

| ||

Captions

Microsoft

created by unknown on 2022-01-23

| a vintage photo of a group of people posing for the camera | 93.3% | |

|

| ||

| a vintage photo of a group of people posing for a picture | 93.2% | |

|

| ||

| an old photo of a group of people posing for the camera | 93.1% | |

|

| ||

Clarifai

created by general-english-image-caption-blip on 2025-05-15

| a photograph of a group of people standing around a barrel of beer | -100% | |

|

| ||

Google Gemini

Created by gemini-2.0-flash on 2025-05-08

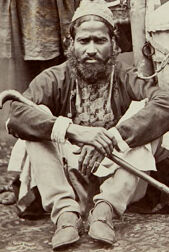

The image is a full shot of a group of five men, likely photographed in a studio setting. The men are dressed in traditional attire, suggesting a cultural or regional identity. The backdrop is a plain, light-colored curtain, creating a neutral space around the subjects. The floor is covered with what appears to be an Oriental rug, adding a touch of detail to the scene.

In the center of the composition, a man stands with his hand resting on a large, drum-like object. To his left, another man holds a tall pole with what looks like fabric attached to it. On the right, a man stands with a large bundle of items slung over his shoulder. Two men are seated in the foreground, one leaning on a walking stick and the other holding a small object in his hands.

The image is a historical photograph, likely taken in the late 19th or early 20th century, given the style and composition. The men appear to be posing for the camera, and their expressions are serious and stoic. The lighting is soft and even, highlighting the details of their clothing and features.

Created by gemini-2.0-flash-lite on 2025-05-08

Here's a description of the image:

Overall Impression:

The image is a sepia-toned photograph, likely dating from the 19th century based on the style and color. It depicts a group of five men posed in front of a plain, possibly fabric, backdrop. They are dressed in traditional clothing and appear to be of South Asian descent.

Detailed Description:

The Men: The men are the central focus of the image.

- They are all wearing long shirts or tunics, and some have wrapped waists.

- They have distinct facial features and expressions.

- One man is carrying a large bundle on his back.

- One man is seated, one is leaning on a staff, and the others are standing.

- They appear to have beards and traditional head coverings.

Props and Items:

- One man is standing beside a tall pole which has cloth wrapped around it.

- Another object that looks like a drum or container is visible.

- There are some walking staffs.

Background:

- The background is plain and slightly faded, which helps emphasize the subjects.

- The ground is patterned, suggesting a rug or similar surface.

Overall, the photograph provides a glimpse into a specific time and place, showcasing the cultural attire and appearance of these men.