Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 44-62 |

| Gender | Male, 50.4% |

| Happy | 49.6% |

| Confused | 49.5% |

| Surprised | 50.1% |

| Fear | 49.6% |

| Disgusted | 49.5% |

| Angry | 49.5% |

| Sad | 49.5% |

| Calm | 49.8% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Horse | 98% | |

Categories

Imagga

created on 2019-11-11

| paintings art | 71% | |

| pets animals | 23% | |

| streetview architecture | 3.4% | |

| interior objects | 1% | |

Captions

Microsoft

created by unknown on 2019-11-11

| a vintage photo of a person | 53.7% | |

| a vintage photo of a person in a desert | 45.8% | |

| a vintage photo of a person riding a horse | 29.8% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-27

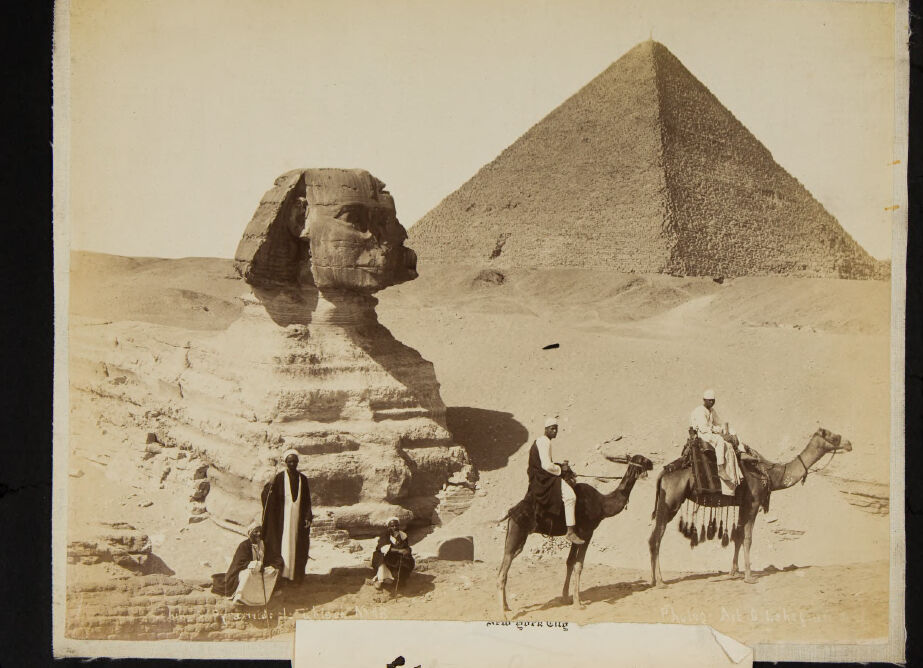

a photograph of a group of people standing around a sphinxee

Created by general-english-image-caption-blip-2 on 2025-07-10

an old photo of people riding camels in front of a pyramid

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-07

This sepia-toned photograph depicts a historic scene of the Great Sphinx of Giza and one of the Pyramids of Giza, situated in the Egyptian desert. The Sphinx, a massive limestone statue with a lion's body and a human head, is shown prominently in the foreground. Its features appear weathered due to age and erosion.

To the right of the Sphinx, the pyramid dominates the background, towering over the desert landscape. The pyramid's steep sides and rough texture reflect its ancient construction.

In the foreground, there are four figures dressed in traditional clothing. Two individuals are sitting near the Sphinx, and one is standing. On the far right, two others are sitting atop camels. The camels are adorned with decorative harnesses, adding a sense of cultural imagery to the scene.

The photograph likely dates back to the late 19th or early 20th century, judging by its style and attire of the figures. This image captures the essence of Egypt's iconic monuments and the timeless appeal of its ancient history.

Created by gpt-4o-2024-08-06 on 2025-06-07

The image depicts an iconic historical scene in the desert of Egypt, featuring the Great Sphinx of Giza and one of the Pyramids of Giza in the background. The Sphinx, a massive limestone statue with the body of a lion and the head of a human, is prominently displayed to the left side of the photograph. In the foreground, there are three men dressed in traditional attire. Two of them are seated at the base of the Sphinx, while the third stands on the ground near them. To the right, two more individuals are riding camels, also wearing traditional garments. The pyramid in the background rises sharply to a point, contrasting with the flat, sandy terrain surrounding the monuments. The photograph appears to be a vintage sepia-toned print, capturing a historic and cultural snapshot of Egypt.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-12

The image shows a famous historical landmark - the Great Sphinx of Giza and the Pyramid of Giza in the background. In the foreground, there are several people riding camels, dressed in traditional Middle Eastern attire. The scene captures the grand scale and ancient history of this iconic Egyptian site, with the massive Sphinx statue and the towering pyramid creating an impressive and awe-inspiring landscape.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-12

This is a historic sepia-toned photograph showing the Great Sphinx and one of the pyramids of Giza in Egypt. The image appears to be from the late 19th or early 20th century. In the foreground, there are several people with camels positioned in front of the Sphinx, which provides a sense of scale for these massive ancient monuments. The Sphinx is shown in profile, displaying its iconic limestone form, while the pyramid rises majestically in the background against a clear sky. The desert landscape surrounding the monuments is clearly visible, and the photograph captures the timeless quality of these ancient Egyptian structures. The composition of the image, with the human figures and camels in the foreground, was a popular style for tourist and documentary photographs of this era.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-06

The image depicts a sepia-toned photograph of the Great Sphinx of Giza and the Pyramid of Khafre in Egypt. The Sphinx is positioned on the left side of the image, with its face turned towards the right. It appears to be constructed from limestone and features a large, flat head with a long, narrow body that tapers to a point at the base.

In the foreground, several individuals are visible, including a man riding a camel, a man sitting on the ground, and a child standing next to the Sphinx. The man on the camel is dressed in traditional Middle Eastern attire, consisting of a white robe and a headscarf. He is holding the reins of the camel in his right hand and appears to be gazing out at the pyramid in the background.

The Pyramid of Khafre is situated behind the Sphinx, rising high into the sky. It is constructed from large stone blocks and features a smooth, flat surface. The pyramid is surrounded by a series of smaller pyramids and other structures, which are not clearly visible in the image.

The background of the image is a clear blue sky, with no clouds or other obstructions visible. The overall atmosphere of the image is one of serenity and tranquility, with the majestic Sphinx and pyramid serving as a backdrop for the everyday activities of the people in the foreground.

Overall, the image provides a unique glimpse into the history and culture of ancient Egypt, showcasing the iconic landmarks and everyday life of the people who lived there.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-06

This image is a vintage photograph of the Great Sphinx of Giza and the Pyramid of Khafre, with several people and camels in the foreground. The photograph is sepia-toned and appears to be from the late 19th or early 20th century.

The Great Sphinx of Giza is a large limestone statue with the body of a lion and the head of a human. It is located in front of the Pyramid of Khafre, which is one of the three pyramids built by the pharaohs of Ancient Egypt. The pyramid is made of limestone and granite blocks, and it is covered in smooth white limestone to create a shining, reflective surface.

In the foreground of the photograph, there are several people and camels. The people are dressed in traditional Egyptian clothing, including long robes and headscarves. They appear to be posing for the photograph, and some of them are looking directly at the camera. The camels are also posing, with their heads held high and their ears perked up.

The background of the photograph is a clear blue sky, with no clouds or other features visible. The overall effect of the photograph is one of grandeur and majesty, capturing the beauty and wonder of these ancient monuments.

The photograph is mounted on a piece of cardboard or paper, and it has a rough, uneven edge around the border. There is a small piece of paper attached to the bottom of the photograph, but it is not clear what it says. The photograph appears to be in good condition, with no major tears or damage visible.

Overall, this photograph is a fascinating glimpse into the past, capturing the beauty and majesty of these ancient monuments. It is a valuable historical artifact that provides insight into the culture and history of Ancient Egypt.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-07

The image shows a black-and-white photograph of three people sitting on camels in front of the Great Sphinx of Giza and the Great Pyramid of Giza. The photograph is placed in a photo album. The people are wearing traditional clothing and hats. The man in the middle is wearing a white hat, while the other two are wearing black hats. The Great Sphinx of Giza is on the left, and the Great Pyramid of Giza is on the right.

Created by amazon.nova-pro-v1:0 on 2025-06-07

The image is a photograph of a desert landscape featuring the Great Pyramid of Giza and the Sphinx, with a few people in the foreground. The photograph is mounted on a black surface, likely a scrapbook page. The photograph has a sepia tone, giving it an old-fashioned appearance. Two people are riding camels, and two others are standing near the Sphinx. The photograph has a watermark in the bottom right corner.

Google Gemini

Created by gemini-2.0-flash on 2025-05-11

Here's a description of the image:

This is a sepia-toned photograph, likely from a historical photo album, featuring the iconic Sphinx and a pyramid in Egypt. In the foreground, the Sphinx rises majestically from the sandy terrain. The detail of its weathered features is visible, showcasing the ancient craftsmanship.

To the right of the Sphinx, two men are riding camels. They are dressed in traditional attire with turbans, fitting the North African setting. Near the base of the Sphinx, three other figures can be seen, with one standing and two seated, appearing to be either resting or perhaps acting as attendants.

In the background, a large pyramid dominates the skyline. Its triangular shape contrasts with the organic, rounded form of the Sphinx. The entire scene is bathed in a soft, muted light, lending an antique feel to the image. A white rectangular paper slip is partially covering the lower middle area of the image.

Created by gemini-2.0-flash-lite on 2025-05-11

This is an old photograph depicting the Great Sphinx of Giza and the Great Pyramid of Giza in Egypt. The Sphinx is in the foreground on the left, and the pyramid is in the background on the right. Several figures are present, including some on camels, with the desert landscape surrounding the monuments. The photo is mounted in a dark, possibly leather-bound album. It is a sepia-toned photograph, typical of historical photography, likely dating back to the 19th century.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-07

The image depicts a historical photograph of the Great Sphinx of Giza and one of the Pyramids of Giza in the background. The Sphinx, a large limestone statue of a mythical creature with a lion's body and a human head, is prominently featured in the foreground. The pyramid, likely the Great Pyramid of Khufu, stands majestically behind the Sphinx.

In the foreground, there are several people, including individuals standing and sitting near the Sphinx, as well as two people riding camels. The people are dressed in traditional attire, suggesting the photograph was taken during a time when such clothing was common. The overall scene captures a moment in time, showcasing the grandeur of these ancient Egyptian monuments and the people interacting with them. The photograph appears to be aged, indicating it was taken quite some time ago.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-19

This image is a vintage photograph that captures the iconic Sphinx and the Great Pyramid of Giza in Egypt. The photograph is sepia-toned, giving it a historical and aged appearance. In the foreground, there are three men dressed in traditional attire. Two of them are seated on camels, while the third is standing on the ground. The Sphinx, with its lion's body and human head, is prominently positioned to the left. The Great Pyramid is visible in the background, standing majestically in the desert landscape. The image appears to be taken under clear, sunny conditions, and the scene is characterized by the arid and open environment typical of the Giza Plateau. The photograph seems to be part of a collection, as indicated by the visible edges and creases along the long edges, suggesting it may be from an old album or scrapbook.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-19

This image is a vintage photograph, likely from the late 19th or early 20th century, depicting the iconic Great Sphinx and a pyramid in the background, likely the Pyramid of Khafre, both located in Giza, Egypt. The photograph appears to be part of a scrapbook or album, as it is mounted on a page with some visible wear and tear.

In the foreground, there are several figures: three people sitting or kneeling near the base of the Sphinx, and two people on camels, one of whom is leading the other camel. The individuals are dressed in traditional Middle Eastern attire, including long robes and head coverings. The scene captures a classic tourist or travel photograph of the time, showcasing the grandeur of the ancient Egyptian monuments and the local culture. The photograph has a sepia tone, which adds to its historical and nostalgic feel.