Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

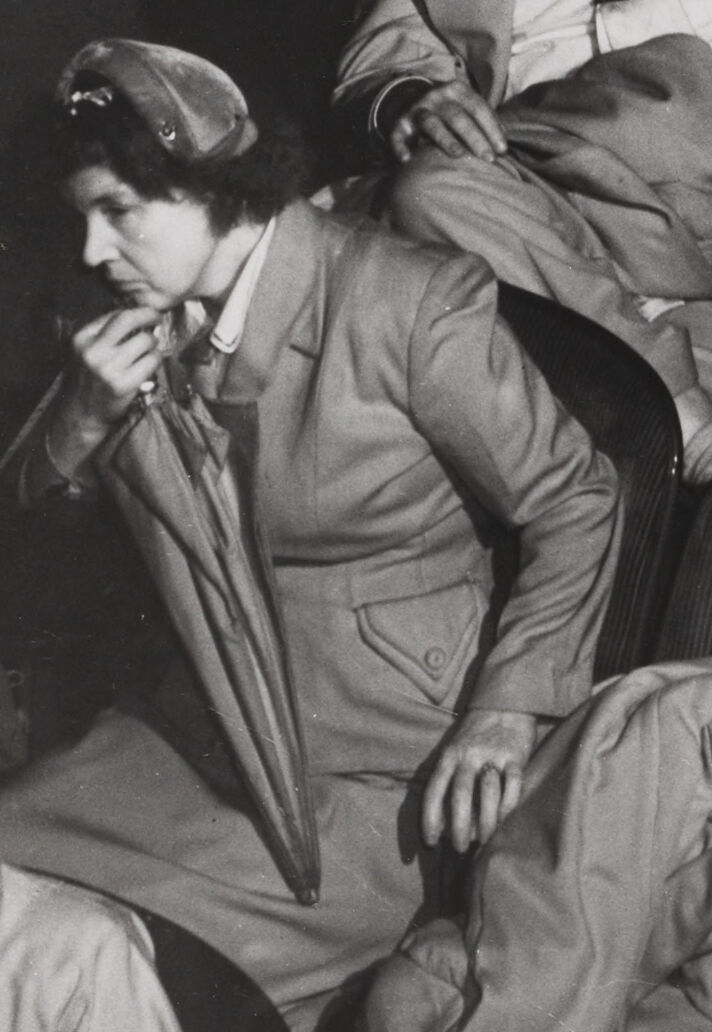

| Age | 26-43 |

| Gender | Male, 94.6% |

| Happy | 6.3% |

| Disgusted | 0.4% |

| Sad | 43.4% |

| Angry | 3.1% |

| Calm | 42.7% |

| Surprised | 1.6% |

| Confused | 2.6% |

Feature analysis

Amazon

| Person | 98.9% | |

Categories

Imagga

| events parties | 97.8% | |

| people portraits | 2.2% | |

Captions

Microsoft

created by unknown on 2018-02-09

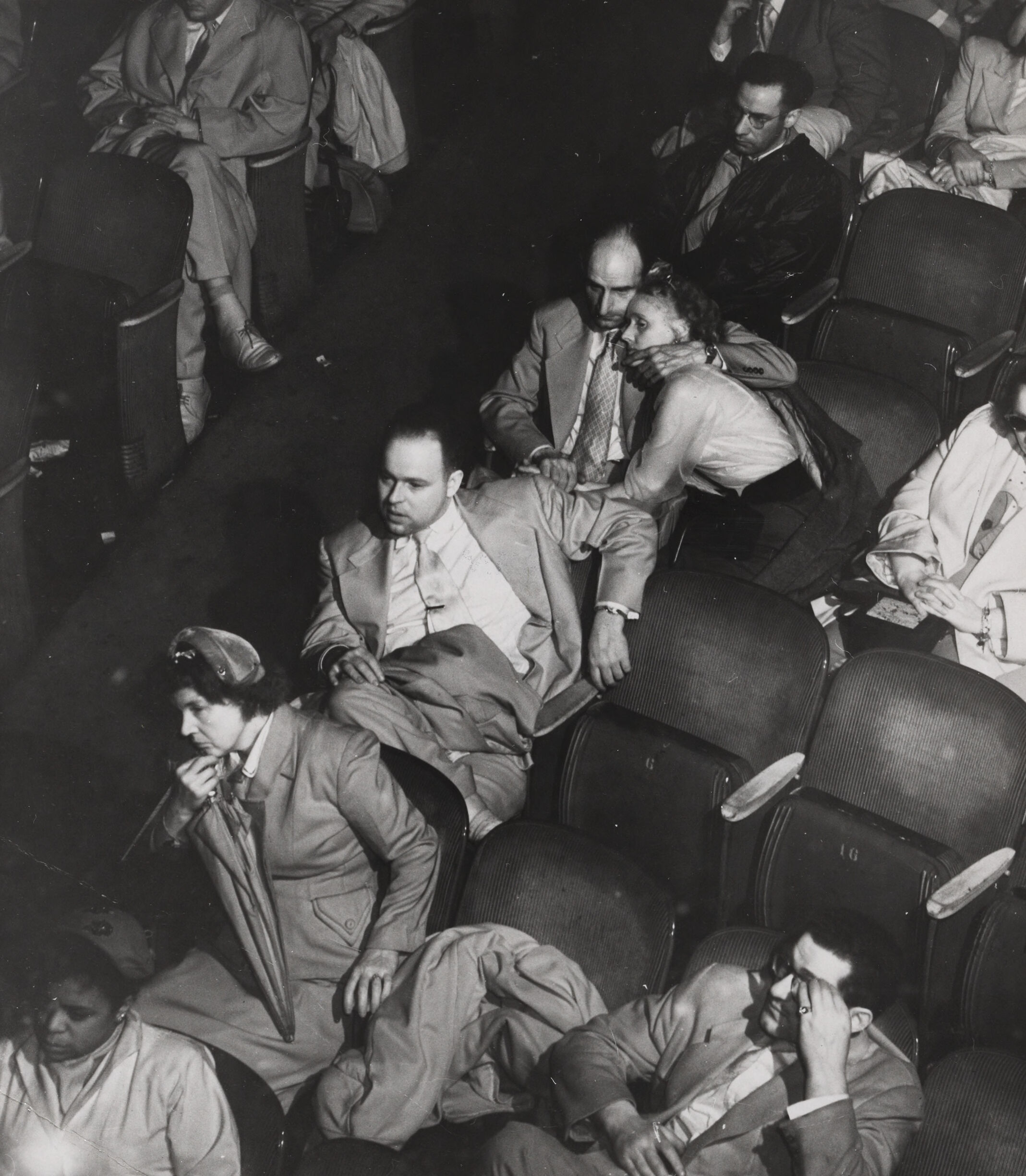

| a vintage photo of a group of people sitting posing for the camera | 96.2% | |

| a group of people sitting posing for the camera | 96.1% | |

| a group of people sitting posing for a photo | 96% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-12

| a photograph of a group of people sitting in a row of seats | -100% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-29

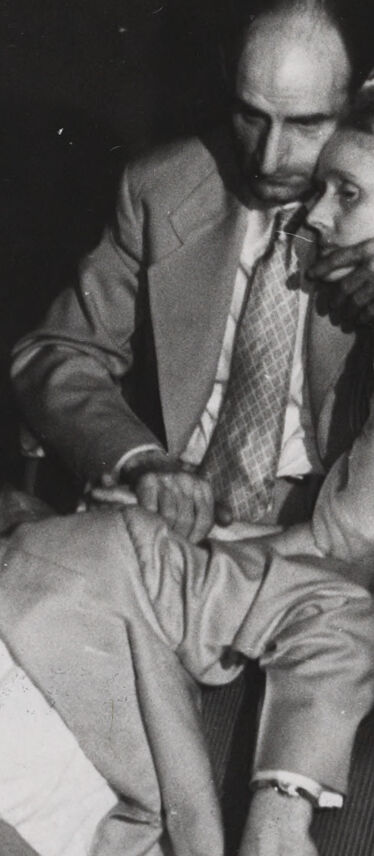

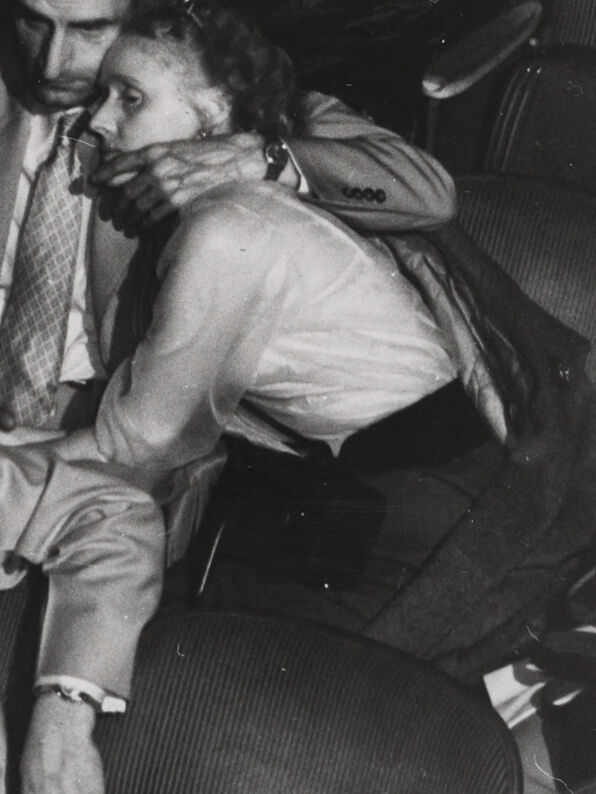

The image depicts a group of people seated in a theater or cinema. They are dressed in formal or semi-formal attire, such as suits and coats. Some individuals appear to be interacting with each other while others are looking ahead. The rows of seats and the overall environment suggest a public seating area meant for spectators.

Created by gpt-4 on 2024-11-28

The image is a black and white photograph showing a group of individuals seated in a theater or auditorium. The seats are arranged in rows, and most of the individuals appear to be wearing formal attire that suggests the photo may have been taken in an earlier era, likely mid-20th century given the fashion. The attire mainly consists of suits, ties, and possibly overcoats. Some individuals are looking towards something off-camera with their attention seemingly captured by whatever is occurring. The quality and style of the image suggest it might have historical significance or could be from a past event. There is no visible modern technology or contemporary clothing that would indicate a recent timeframe.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-26

This is a black and white photograph showing people taking cover in what appears to be a theater or auditorium seating area. The image captures a moment of apparent alarm or emergency, with several individuals ducking down between and behind the rows of seats. The people are dressed in 1950s-style clothing, including suits and light-colored outerwear. The theater seats appear to be the classic fold-down style common in mid-century venues. The lighting and composition create a dramatic scene that suggests this was either a drill or a real emergency situation. The photograph appears to be taken from an elevated position looking down at the scene.

Created by claude-3-haiku-20240307 on 2024-11-27

The image depicts a crowded and chaotic scene, with a group of people sitting and standing in close proximity to one another. The people appear to be men wearing suits and coats, suggesting a formal or professional setting. Some of the men look distressed or weary, while others appear to be engaged in conversation. There is a sense of tension and unease in the atmosphere. Several suitcases and other personal belongings are visible, suggesting this may be a transportation-related setting, such as a train station or airport.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-23

This image is a black-and-white photograph of a crowded theater or auditorium, with people seated in rows of chairs. The majority of the individuals are dressed in formal attire, consisting of suits and ties for the men, and dresses or skirts for the women. Notably, many of the people appear to be in a state of distress or discomfort, with some covering their mouths or looking away from the camera.

The overall atmosphere of the image suggests that something unpleasant or disturbing is occurring, possibly related to the event or performance being held in the theater. The formal dress code and the reactions of the audience members imply that this may have been a significant or high-profile event, and the photographer's capture of the scene provides a glimpse into a moment of tension or unease.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-25

The image depicts a black-and-white photograph of a crowded theater or auditorium, with people seated in rows of chairs. The audience appears to be engaged in a performance or presentation, with some individuals looking at the stage or speaker, while others are turned away or appear to be in conversation with each other.

The atmosphere in the image is one of anticipation and engagement, with the audience fully focused on the event unfolding before them. The use of black and white photography adds a sense of timelessness and nostalgia to the image, evoking a bygone era when such events were a common part of everyday life. Overall, the image captures a moment in time when people came together to share in a collective experience, and it serves as a reminder of the power of live performances to bring people together and create lasting memories.