Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

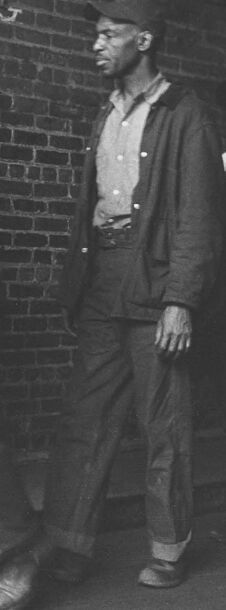

| Age | 57-77 |

| Gender | Male, 53.6% |

| Angry | 45.1% |

| Sad | 51% |

| Confused | 45.2% |

| Happy | 48.3% |

| Disgusted | 45.2% |

| Surprised | 45.1% |

| Calm | 45.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.8% | |

Categories

Imagga

created on 2018-03-23

| people portraits | 69.6% | |

| paintings art | 16.7% | |

| events parties | 8.9% | |

| streetview architecture | 1.6% | |

| interior objects | 0.9% | |

| text visuals | 0.7% | |

| food drinks | 0.5% | |

| pets animals | 0.4% | |

| cars vehicles | 0.3% | |

| nature landscape | 0.3% | |

Captions

Microsoft

created by unknown on 2018-03-23

| a group of people posing for a photo | 96.8% | |

| a man standing in front of a group of people posing for a photo | 91.4% | |

| a group of people posing for a picture | 91.3% | |

Salesforce

Created by general-english-image-caption-blip-2 on 2025-06-27

a group of men standing in front of a brick wall

Created by general-english-image-caption-blip on 2025-05-05

a photograph of a group of men standing in front of a brick wall

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-08

This image shows a group of men standing and walking near a brick wall. The wall features chalk writing that reads "NO SMOKING INSIDE." The men are dressed in workwear, including jackets, overalls, and shirts, suggesting a factory or industrial setting. One individual on the left appears to be holding paperwork, possibly engaged in conversation or conducting an inspection. The scene gives an impression of daily life in a workplace environment.

Created by gpt-4o-2024-08-06 on 2025-06-08

The image depicts several individuals standing and walking against a brick wall in an industrial setting. The wall contains a prominent handwritten sign that reads "NO SMOKING INSIDE THE GATE." Most of the individuals are wearing workwear, such as jackets, shirts, and overalls, suggesting they are workers or laborers. One person is holding a book or notepad and appears to be engaged in conversation with another individual. The overall scene seems to capture a moment of interaction and movement in a work environment.

Anthropic Claude

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-24

This appears to be a black and white historical photograph showing a group of men standing against a brick wall. The image appears to be from the mid-20th century, based on the clothing styles. The men are wearing casual work clothes, including jackets, caps, and work pants. One man on the left is wearing glasses and a lighter-colored suit jacket. The group includes both Black and white individuals, which is notable given the era when this photo was likely taken. There appears to be some writing or markings on the brick wall behind them. The overall composition suggests this might be outside a workplace or industrial setting.

Created by claude-3-haiku-20240307 on 2024-12-29

The image depicts a group of people standing in front of a brick wall with graffiti that says "No booking inside". The group consists of several men, some wearing hats, overalls, and other working-class attire, suggesting they may be laborers or working-class individuals. The lighting and overall aesthetic suggest this is an older, black-and-white photograph, likely from an earlier era. The image conveys a sense of working-class struggle or social commentary, with the graffiti on the wall hinting at some kind of issue or conflict.

Created by claude-3-opus-20240229 on 2024-12-29

The black and white image shows a group of six men standing together outside a brick building. The building has a sign that says "No smoking inside". The men are wearing hats, jackets, and overalls typical of working class attire from an earlier era, likely the early to mid 20th century based on their clothing style. One man is gesturing as if explaining or demonstrating something to the others. The setting and their posed stance makes this seem like it could be a candid shot capturing a moment of conversation or interaction between the men, perhaps coworkers or acquaintances, rather than a formally posed photograph.

Created by claude-3-5-sonnet-20241022 on 2024-12-29

This appears to be a historical black and white photograph, likely from the mid-20th century. The image shows a group of men standing against a brick wall with text that reads "NO SMOKING" painted on it. The men are dressed in work clothes typical of the era - overalls, work shirts, and caps. On the left is someone in a suit and hat who appears to be taking notes or recording something. The setting suggests this might be at a factory, warehouse, or industrial workplace. The composition and style of the photograph has a documentary or photojournalistic quality to it, possibly capturing a moment related to labor or working conditions of the time period.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-31

This image depicts a black-and-white photograph of a group of men standing in front of a brick wall. The man on the left is dressed in a suit and hat, holding a cigarette in his right hand. To his right stands another man wearing a plaid shirt and a cap, while three more men are positioned to the right, each with distinct attire. The man on the far right is partially obscured by the others.

In the background, a brick wall features white chalk writing that reads "No Smoking Inside Gate." The overall atmosphere of the image suggests a workplace or industrial setting, possibly during the mid-20th century.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-31

The image depicts a group of men standing outside a brick building, with one man smoking and another holding a clipboard. The men are dressed in casual attire, with some wearing hats and jackets. The background features a brick wall with the words "NO SMOKING INSIDE" written on it in white paint.

- A group of men are standing outside a brick building.

- The men are dressed in casual attire, with some wearing hats and jackets.

- One man is smoking, while another is holding a clipboard.

- The background features a brick wall with the words "NO SMOKING INSIDE" written on it in white paint.

- The men are standing in front of a brick wall with the words "NO SMOKING INSIDE" written on it in white paint.

- The wall is made of dark-colored bricks and has a rough texture.

- The words "NO SMOKING INSIDE" are written in bold, white letters.

- One man is smoking, while another is holding a clipboard.

- The man smoking is wearing a hat and a jacket, and is standing with his feet shoulder-width apart.

- The man holding the clipboard is wearing a hat and a shirt, and is standing with his arms crossed.

- The men are all looking at the man smoking.

- They appear to be watching him with interest or concern.

- Some of the men are leaning against the wall or standing with their feet shoulder-width apart.

Overall, the image suggests that the men are waiting for something to happen or are taking a break from their work. The presence of the "NO SMOKING INSIDE" sign on the wall implies that they are not allowed to smoke inside the building, and are instead smoking outside.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-30

A black-and-white photo of a group of men standing on the side of a brick wall. The man in front, wearing a hat, is holding a pen and paper and is writing something. Behind him is a man wearing a hat and a bow tie, holding a pen and paper. The other men are wearing hats and some of them are wearing glasses. The wall has words written on it.

Created by amazon.nova-lite-v1:0 on 2025-05-30

The image is a black-and-white photograph of a group of men standing outside, likely in a public space. They are standing in front of a brick wall with graffiti that reads "No Smoking Inside." The men are dressed in hats, coats, and shoes, suggesting that it might be a colder season. One man, wearing a bow tie, is holding a book or notebook and appears to be talking to another man, who is holding a cigarette. The other men are standing behind them, looking in different directions. The image captures a moment of conversation and interaction among the men, with the graffiti on the wall providing a sense of context for their location.

Google Gemini

Created by gemini-2.0-flash on 2025-05-05

Here's a description of the image:

General Description:

The image is a black and white photograph featuring a group of men standing or walking along a brick wall. The style and clothing suggest it was taken sometime in the mid-20th century.

Specific Details:

People: The group consists of several men with varying ages and appearances.

To the left, a man in a suit, glasses, a hat, and a bow tie appears to be taking notes on a clipboard.

Next to him, a younger man in a newsboy cap and a plaid shirt is speaking with the man on the left.

Another older man in a cap is walking forward in work clothes.

The rest of the men are lining up against the wall.

Setting: The background is dominated by a brick wall with the words "NO SMOKING INSIDE" painted on it.

Lighting: The image has a distinct contrast, with brighter areas illuminating the men and the wall, and darker shadows in the background.

Overall Impression:

The photograph evokes a sense of industrial or working-class life from the past. It could potentially depict workers outside a factory or workplace. The man taking notes adds an element of documentation or observation to the scene.

Created by gemini-2.0-flash-lite on 2025-05-05

Here's a description of the image:

Overall Impression:

The image is a black and white photograph, likely taken in the mid-20th century, capturing a moment on the street, possibly near a factory or industrial site. The scene appears to be one of everyday life, with a focus on the working class.

Composition and Setting:

- Location: The setting is an exterior scene, possibly near a brick building.

- Lighting: The lighting is a mix of natural and possibly artificial light. The background appears to fade into shadow, suggesting the entrance to a building.

- Foreground: The group of people is in the foreground.

People and Activity:

- Main Focus: There are several men in the image. Some appear to be workers, dressed in work clothes (overalls, simple shirts, caps).

- Interaction: One man, wearing a suit and glasses, is holding a notepad and pen, seemingly taking notes or checking something. It is likely the man taking notes is conducting an interview, taking a census, or taking notes on who is in the area.

- Expressions: The men have various expressions on their faces. They appear mostly neutral, with a sense of weariness or reserve.

Details and Observations:

- Signage: Part of a sign is visible on the brick wall: "NO SMOKING INSIDE".

- Clothing: The dress of the people suggests a time period when casual work clothing was common.

- Overall Tone: The image has a documentary or candid feel to it, capturing a slice of life from the past. The style is simple, direct, and gives a sense of realism.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-29

The image is a black-and-white photograph depicting a group of men standing outside a brick building. The wall behind them has a hand-painted sign that reads "No Smoking Inside This Site." The men are dressed in work clothes typical of mid-20th century laborers, including overalls, work shirts, and hats.

From left to right:

- The first man is wearing a suit and hat, holding a cigarette, and appears to be in conversation with another man.

- The second man is wearing a plaid shirt and a cap, standing with his hands in his pockets.

- The third man, slightly blurred, is wearing a work shirt and pants, with a cap, and is walking past the group.

- The fourth man is dressed in a work shirt and pants, with a cap, and is looking towards the camera.

- The fifth man is wearing overalls and a cap, standing with his hands in his pockets.

- The sixth man is wearing a vest over a shirt and pants, with his hands also in his pockets.

The overall setting suggests a break time or a moment of rest for these workers, possibly at a construction or industrial site. The clothing and the "No Smoking" sign indicate a period setting, likely from the mid-20th century.