Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 25-39 |

| Gender | Male, 53.4% |

| Calm | 90.1% |

| Sad | 5.9% |

| Surprised | 2.1% |

| Confused | 0.7% |

| Fear | 0.5% |

| Happy | 0.3% |

| Angry | 0.3% |

| Disgusted | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.7% | |

Categories

Imagga

created on 2021-12-14

| paintings art | 88.7% | |

| food drinks | 6.1% | |

| interior objects | 4.1% | |

Captions

Microsoft

created by unknown on 2021-12-14

| a man standing in front of a television | 82.1% | |

| a man standing in front of a television screen | 79.6% | |

| a screen shot of a man | 79.5% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-12

photograph of a man cooking in a restaurant.

Salesforce

Created by general-english-image-caption-blip on 2025-05-04

a photograph of a man is preparing to make a clay potted piggy

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-10

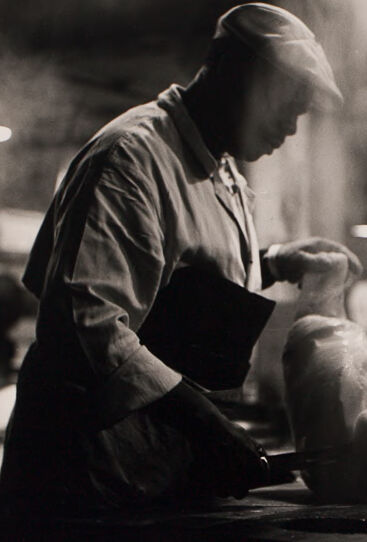

This black-and-white photograph depicts a worker, wearing a cap and apron, engaged in processing poultry in what appears to be an industrial setting, possibly a meat-packing or food processing factory. The worker is focused on handling the carcass of a bird, which lies on a table. The background is dimly lit, with other workers and industrial lamps creating an atmospheric, smoky environment. The composition highlights the labor-intensive nature of the scene, with dramatic contrasts in light and shadow emphasizing the worker's silhouette and movements.

Created by gpt-4o-2024-08-06 on 2025-06-10

The image is a black and white photograph depicting a dimly lit interior scene of a meat processing facility. The focal point of the photo is a person wearing a hat, standing at a table. The individual is in profile, with their face turned away, focused on their task. They are wearing a light-colored long-sleeve shirt or coat and an apron. The individual is holding a whole plucked chicken, poised on the table as if involved in the process of preparing or inspecting it. The background shows an industrial setting with additional workers and overhead lighting. The overall atmosphere is one of concentration and manual labor.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-15

The image shows a black and white photograph of a person, likely a chef or cook, working in a kitchen. The person is wearing a white uniform and is hunched over a surface, intently focused on their task. The image has a moody, atmospheric quality with dramatic lighting and shadows creating a sense of intensity and concentration in the scene.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-15

This is a black and white photograph that appears to be taken in an industrial or workshop setting. The image shows a worker wearing what looks like work clothes and a cap, bent over and focused on their task. The lighting creates a dramatic effect, with the figure silhouetted against a darker background. There are some lights visible in the background, creating spots of illumination. The composition suggests this might be from a documentary or artistic series about industrial workers or craftsmen. The atmospheric quality of the photograph, with its play of light and shadow, gives it an artistic, almost film noir quality.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of a man working in a factory, likely taken in the early 20th century.

- The man is wearing a cap and a long-sleeved shirt.

- He is standing at a workbench, with his hands resting on the surface.

- His head is bowed, and he appears to be focused on his task.

- On the workbench in front of him is a large, cylindrical object that appears to be made of metal.

- The object has a rounded top and a flat bottom.

- It is unclear what the object is used for, but it may be a piece of machinery or a tool.

- In the background, there are several other people working in the factory.

- They are all wearing similar clothing to the man in the foreground.

- The room is dimly lit, with only a few lights visible.

- The atmosphere appears to be one of industry and productivity, with the workers focused on their tasks.

Overall, the image provides a glimpse into the daily lives of factory workers in the early 20th century. It highlights the hard work and dedication required to perform manual labor, as well as the importance of teamwork and collaboration in achieving common goals.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

This image is a black-and-white photograph of a man in a butcher shop, likely from the early 20th century. The man, dressed in a white shirt and apron, is intently focused on his work, with his head bowed and hands engaged in the task at hand. He appears to be handling a piece of meat, possibly a chicken or turkey, which lies on a table in front of him.

In the background, another man can be seen, also dressed in a white shirt and hat, although his features are not as clearly defined due to the distance and lighting. The atmosphere of the image suggests a busy and industrious environment, with the men working diligently to prepare meat for sale or consumption.

The overall mood of the image is one of concentration and dedication, as the men go about their daily tasks with precision and care. The use of black and white photography adds a sense of timelessness and nostalgia to the image, evoking a bygone era when life was simpler and more labor-intensive.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-05

The image is a black-and-white photograph of a scene inside a factory. The photograph features a man who is working on a machine, likely a lathe or a similar tool. He is wearing a white apron and a hat, and he is holding a tool in his hand. The man is focused on his work, and the image captures a moment of concentration and dedication to his task. In the background, there are other workers who are also busy with their own tasks, and the factory is filled with the sounds of machinery and the smell of metal and oil. The photograph is a testament to the hard work and dedication of the workers who built and maintained the machines that powered the industrial revolution.

Created by amazon.nova-pro-v1:0 on 2025-06-05

The image is a black-and-white photograph of a factory setting. It appears to be a busy work environment, with several workers engaged in their tasks. The main focus of the image is on a worker in the foreground, who is dressed in a long-sleeved shirt and a cap. He is holding a tool, possibly a saw, and is working on a piece of material, which could be a piece of meat or a similar object. Another worker in the background is also engaged in a similar task. The factory is well-lit, with bright lights illuminating the workspace. The overall atmosphere of the image is one of hard work and productivity.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-02

Here's a description of the image:

Overall Impression:

The image is a black and white photograph, likely taken in an industrial or commercial kitchen setting. It evokes a sense of work, possibly manual labor. The lighting creates a dramatic effect, with strong contrasts between light and shadow.

Detailed Description:

- Subject: The primary subject is a man, likely a butcher or worker in a meat processing plant, who is standing at a worktable.

- Action: He is holding a large object, which looks like a cut piece of meat. He appears to be working on it with a knife, a cutting board or a similar tool.

- Environment: The setting is dimly lit with visible steam or mist in the air. There is another person in the background. It looks like a commercial kitchen or a food processing facility.

- Composition: The composition is well-balanced, with the main figure in the foreground, set against the backdrop of the workplace. The use of light and shadow adds depth and draws the viewer's eye to the focal point.

- Style: The photograph is likely a documentary-style photo, capturing a candid moment of the worker at work.

Overall, it's a compelling image capturing a moment of labor in a work setting, with a strong focus on the subject and the work being done.

Created by gemini-2.0-flash on 2025-05-02

Here's a description of the image:

The black and white photograph captures a scene in what appears to be a poultry processing or meat-packing plant. A man wearing a cap and a light-colored coat stands at a work surface. He's holding a cleaver and seems to be processing a chicken. Steam or mist is rising in the background, partially obscuring the view and contributing to the atmospheric quality of the image.

In the background, there are more workers visible, all dressed in similar attire, suggesting a production line or a shared workspace. Overhead, there are several light fixtures that contribute to the lighting of the scene.

The composition is focused on the primary worker and the task he's performing, with the background figures and details providing context to the industrial setting. The image has a somewhat gritty and documentary feel, typical of industrial photography.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-31

The image is a black and white photograph depicting a person engaged in the process of shaping or crafting a piece of pottery. The individual is seated and appears to be using a pottery wheel, with their hands actively working on a clay object. The person is wearing a light-colored shirt and a cap, and their face is not clearly visible, as the focus is on their hands and the pottery piece.

In the background, there is another person, also wearing a cap, who seems to be engaged in a similar activity, though they are not the primary focus of the image. The setting appears to be a workshop or studio, with overhead lights illuminating the space. The atmosphere suggests a place of craftsmanship and artistry, likely a pottery workshop or studio where multiple artisans are working on their creations.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-06-30

This is a black-and-white photograph depicting a worker in an industrial or factory setting. The individual is wearing a cap and a long-sleeved shirt, and is focused on a task, possibly handling or preparing a piece of machinery or an object on a workbench. The background shows another worker, partially out of focus, and additional equipment or machinery, suggesting a busy and possibly noisy industrial environment. The lighting in the photograph emphasizes the worker in the foreground, creating a sense of depth and focus on their activity. The overall atmosphere of the image conveys a sense of industry, labor, and concentration.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-06-30

This black-and-white photograph appears to depict a scene from an industrial or manufacturing setting, possibly a foundry or a workshop. The main subject is a man wearing a light-colored shirt and a cap, who is working intently on a mold or a casting. He is using a tool, likely a chisel or a similar implement, to work on the surface of the mold. The mold itself seems to be made of a light material, possibly clay or plaster, and is shaped like a human figure.

In the background, another person, also wearing a cap and a light-colored shirt, is sitting and appears to be observing or working on something else. The background is somewhat blurred, with several light sources creating a hazy or smoky atmosphere, suggesting the presence of heat, steam, or dust typical of an industrial environment. The overall composition and lighting give the image a dramatic and focused feel, emphasizing the concentration and skill of the workers.