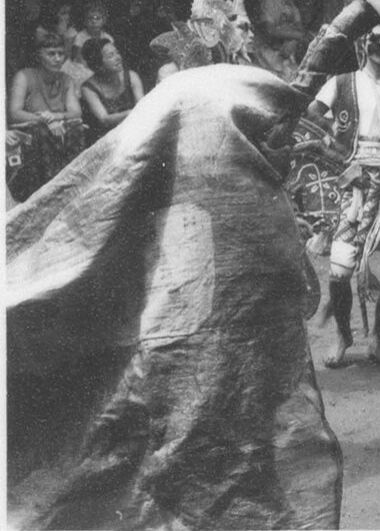

Machine Generated Data

Tags

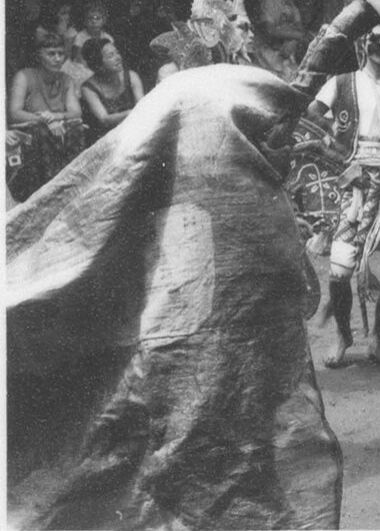

Amazon

created on 2023-10-05

| Adult | 97.4 | |

|

| ||

| Male | 97.4 | |

|

| ||

| Man | 97.4 | |

|

| ||

| Person | 97.4 | |

|

| ||

| Adult | 97.2 | |

|

| ||

| Male | 97.2 | |

|

| ||

| Man | 97.2 | |

|

| ||

| Person | 97.2 | |

|

| ||

| Adult | 96.7 | |

|

| ||

| Person | 96.7 | |

|

| ||

| Bride | 96.7 | |

|

| ||

| Female | 96.7 | |

|

| ||

| Wedding | 96.7 | |

|

| ||

| Woman | 96.7 | |

|

| ||

| Person | 95.1 | |

|

| ||

| Male | 94.4 | |

|

| ||

| Person | 94.4 | |

|

| ||

| Boy | 94.4 | |

|

| ||

| Child | 94.4 | |

|

| ||

| Person | 94.4 | |

|

| ||

| Person | 94.1 | |

|

| ||

| Person | 92.7 | |

|

| ||

| Person | 92.5 | |

|

| ||

| Adult | 88.9 | |

|

| ||

| Person | 88.9 | |

|

| ||

| Bride | 88.9 | |

|

| ||

| Female | 88.9 | |

|

| ||

| Woman | 88.9 | |

|

| ||

| Person | 80.1 | |

|

| ||

| Face | 78 | |

|

| ||

| Head | 78 | |

|

| ||

| Stilts | 69.9 | |

|

| ||

| Outdoors | 69.5 | |

|

| ||

| Person | 66.3 | |

|

| ||

| People | 63 | |

|

| ||

| Weapon | 57.4 | |

|

| ||

| Hula | 56.2 | |

|

| ||

| Toy | 56.2 | |

|

| ||

| Sword | 55.9 | |

|

| ||

| Electrical Device | 55.9 | |

|

| ||

| Microphone | 55.9 | |

|

| ||

Clarifai

created on 2018-05-10

| people | 100 | |

|

| ||

| group together | 99.5 | |

|

| ||

| many | 99.3 | |

|

| ||

| group | 98 | |

|

| ||

| military | 96.9 | |

|

| ||

| man | 95.6 | |

|

| ||

| soldier | 95.3 | |

|

| ||

| adult | 94.3 | |

|

| ||

| wear | 92.7 | |

|

| ||

| outfit | 90.7 | |

|

| ||

| war | 89.3 | |

|

| ||

| uniform | 88.1 | |

|

| ||

| weapon | 87.8 | |

|

| ||

| leader | 87 | |

|

| ||

| administration | 86.5 | |

|

| ||

| military uniform | 83.3 | |

|

| ||

| crowd | 83.2 | |

|

| ||

| skirmish | 82 | |

|

| ||

| recreation | 79.2 | |

|

| ||

| spectator | 76.9 | |

|

| ||

Imagga

created on 2023-10-05

| weapon | 30.9 | |

|

| ||

| man | 25.5 | |

|

| ||

| protection | 19.1 | |

|

| ||

| military | 18.3 | |

|

| ||

| male | 17 | |

|

| ||

| mask | 16.5 | |

|

| ||

| danger | 16.4 | |

|

| ||

| clothing | 15.9 | |

|

| ||

| city | 15.8 | |

|

| ||

| person | 15.7 | |

|

| ||

| soldier | 15.6 | |

|

| ||

| people | 15.1 | |

|

| ||

| adult | 14.9 | |

|

| ||

| destruction | 14.7 | |

|

| ||

| urban | 14 | |

|

| ||

| sport | 13.6 | |

|

| ||

| device | 13.6 | |

|

| ||

| industrial | 12.7 | |

|

| ||

| harness | 12.7 | |

|

| ||

| accident | 12.7 | |

|

| ||

| toxic | 12.7 | |

|

| ||

| protective | 12.7 | |

|

| ||

| dirty | 12.6 | |

|

| ||

| nuclear | 12.6 | |

|

| ||

| gas | 12.5 | |

|

| ||

| horse | 12.3 | |

|

| ||

| smoke | 12.1 | |

|

| ||

| outdoors | 11.9 | |

|

| ||

| radioactive | 11.8 | |

|

| ||

| radiation | 11.7 | |

|

| ||

| chemical | 11.6 | |

|

| ||

| uniform | 11.5 | |

|

| ||

| travel | 11.3 | |

|

| ||

| gun | 11.2 | |

|

| ||

| stalker | 10.9 | |

|

| ||

| disaster | 10.7 | |

|

| ||

| statue | 10.7 | |

|

| ||

| war | 10.6 | |

|

| ||

| instrument | 10.5 | |

|

| ||

| rifle | 10.3 | |

|

| ||

| safety | 10.1 | |

|

| ||

| camouflage | 10 | |

|

| ||

| suit | 9.9 | |

|

| ||

| activity | 9.8 | |

|

| ||

| history | 9.8 | |

|

| ||

| building | 9.5 | |

|

| ||

| power | 9.2 | |

|

| ||

| world | 8.9 | |

|

| ||

| battle | 8.8 | |

|

| ||

| support | 8.8 | |

|

| ||

| men | 8.6 | |

|

| ||

| industry | 8.5 | |

|

| ||

| outdoor | 8.4 | |

|

| ||

| old | 8.4 | |

|

| ||

| dark | 8.3 | |

|

| ||

| bow and arrow | 8.1 | |

|

| ||

| bow | 8.1 | |

|

| ||

| sword | 8.1 | |

|

| ||

| protect | 7.7 | |

|

| ||

| sculpture | 7.6 | |

|

| ||

| historical | 7.5 | |

|

| ||

| leisure | 7.5 | |

|

| ||

| tourism | 7.4 | |

|

| ||

| environment | 7.4 | |

|

| ||

| vacation | 7.4 | |

|

| ||

| street | 7.4 | |

|

| ||

| historic | 7.3 | |

|

| ||

| engineer | 7.2 | |

|

| ||

| black | 7.2 | |

|

| ||

| transportation | 7.2 | |

|

| ||

| architecture | 7 | |

|

| ||

Google

created on 2018-05-10

| black and white | 89.3 | |

|

| ||

| monochrome photography | 68.5 | |

|

| ||

| history | 68.2 | |

|

| ||

| troop | 58.4 | |

|

| ||

| pole | 56.3 | |

|

| ||

| monochrome | 55.9 | |

|

| ||

| stock photography | 54.8 | |

|

| ||

| middle ages | 53.5 | |

|

| ||

| recreation | 51.7 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 23-31 |

| Gender | Male, 93.4% |

| Calm | 97% |

| Surprised | 6.4% |

| Fear | 6.1% |

| Sad | 2.5% |

| Confused | 0.3% |

| Angry | 0.2% |

| Disgusted | 0.2% |

| Happy | 0.2% |

AWS Rekognition

| Age | 22-30 |

| Gender | Female, 67.1% |

| Sad | 99% |

| Calm | 35.2% |

| Surprised | 6.5% |

| Fear | 6% |

| Confused | 2.5% |

| Happy | 1.7% |

| Angry | 0.4% |

| Disgusted | 0.4% |

AWS Rekognition

| Age | 21-29 |

| Gender | Female, 78.8% |

| Calm | 98.3% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.3% |

| Happy | 0.6% |

| Disgusted | 0.4% |

| Confused | 0.1% |

| Angry | 0.1% |

AWS Rekognition

| Age | 23-31 |

| Gender | Female, 98.7% |

| Happy | 78.7% |

| Surprised | 8.3% |

| Fear | 7.7% |

| Angry | 5.9% |

| Sad | 4.3% |

| Calm | 1% |

| Disgusted | 1% |

| Confused | 0.4% |

AWS Rekognition

| Age | 4-10 |

| Gender | Female, 98.6% |

| Happy | 92.9% |

| Surprised | 6.5% |

| Fear | 6.3% |

| Sad | 3.6% |

| Calm | 0.9% |

| Confused | 0.4% |

| Disgusted | 0.3% |

| Angry | 0.3% |

Microsoft Cognitive Services

| Age | 29 |

| Gender | Male |

Feature analysis

Amazon

Adult

Male

Man

Person

Bride

Female

Woman

Boy

Child

| Adult | 97.4% | |

|

| ||

| Adult | 97.2% | |

|

| ||

| Adult | 96.7% | |

|

| ||

| Adult | 88.9% | |

|

| ||

| Male | 97.4% | |

|

| ||

| Male | 97.2% | |

|

| ||

| Male | 94.4% | |

|

| ||

| Man | 97.4% | |

|

| ||

| Man | 97.2% | |

|

| ||

| Person | 97.4% | |

|

| ||

| Person | 97.2% | |

|

| ||

| Person | 96.7% | |

|

| ||

| Person | 95.1% | |

|

| ||

| Person | 94.4% | |

|

| ||

| Person | 94.4% | |

|

| ||

| Person | 94.1% | |

|

| ||

| Person | 92.7% | |

|

| ||

| Person | 92.5% | |

|

| ||

| Person | 88.9% | |

|

| ||

| Person | 80.1% | |

|

| ||

| Person | 66.3% | |

|

| ||

| Bride | 96.7% | |

|

| ||

| Bride | 88.9% | |

|

| ||

| Female | 96.7% | |

|

| ||

| Female | 88.9% | |

|

| ||

| Woman | 96.7% | |

|

| ||

| Woman | 88.9% | |

|

| ||

| Boy | 94.4% | |

|

| ||

| Child | 94.4% | |

|

| ||

Categories

Imagga

| paintings art | 40.7% | |

|

| ||

| nature landscape | 31% | |

|

| ||

| streetview architecture | 12.2% | |

|

| ||

| pets animals | 5.7% | |

|

| ||

| interior objects | 4.3% | |

|

| ||

| people portraits | 2.3% | |

|

| ||

| beaches seaside | 1.9% | |

|

| ||

Captions

Microsoft

created on 2018-05-10

| a vintage photo of a group of people posing for the camera | 95.4% | |

|

| ||

| a vintage photo of a group of people posing for a picture | 95.3% | |

|

| ||

| a group of people posing for a photo | 95.2% | |

|

| ||