Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 11-19 |

| Gender | Female, 100% |

| Sad | 70.9% |

| Disgusted | 17.2% |

| Confused | 3.6% |

| Angry | 3.5% |

| Calm | 3.2% |

| Fear | 1% |

| Happy | 0.3% |

| Surprised | 0.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.3% | |

Categories

Imagga

created on 2022-01-09

| food drinks | 91.6% | |

| people portraits | 4.5% | |

| paintings art | 2.5% | |

Captions

Microsoft

created by unknown on 2022-01-09

| a person holding an apple | 29.4% | |

| a close up of a person holding an apple | 26% | |

| a person standing in front of the apple | 25.9% | |

Clarifai

Created by general-english-image-caption-clip on 2025-07-13

a boy with a basket of potatoes.

Salesforce

Created by general-english-image-caption-blip on 2025-05-22

a photograph of a boy is sitting on a table with apples

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-14

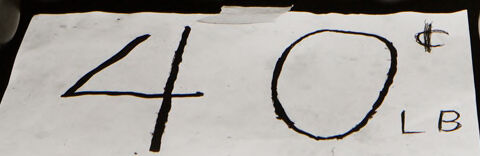

The image depicts a display of ripe tomatoes arranged on a table. In front of the tomatoes is a handwritten sign that reads "40¢ LB," indicating the price per pound. The background features a rustic outdoor setting with what appears to be a shed or building and a patterned fabric.

Created by gpt-4o-2024-08-06 on 2025-06-14

The image depicts a black and white photograph of a table or countertop covered with an assortment of tomatoes. In front of the tomatoes, there is a handwritten sign displaying the price "40¢ LB," indicating that the tomatoes are being sold at 40 cents per pound. The setting appears to be outdoors, possibly at a market or stand, as suggested by the presence of what looks like a camouflage-patterned cloth and a structure in the background. The image captures a rustic and simple market scene.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-16

The image shows a young boy sitting amidst a large pile of tomatoes or similar round produce. The boy has a serious expression on his face as he rests his arms on the tomatoes. A sign in front of the produce indicates the price is 40 cents per pound. The image is in black and white, giving it a classic, timeless feel. The setting appears to be an outdoor market or farm stand.

Created by us.anthropic.claude-3-opus-20240229-v1:0 on 2025-06-16

The black and white image shows a young shirtless boy sitting behind a display of many round objects, likely fruits such as apples or oranges, priced at 40 cents per pound according to the handwritten sign in front of them. The boy has short dark hair and a serious expression as he looks directly at the camera. The setting appears to be a rustic outdoor market or stand, with wooden structures visible in the background.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-16

This is a black and white photograph showing a young person at what appears to be a produce stand or market. They are leaning over a large display of round, smooth fruits or vegetables (possibly tomatoes) with a sign in the foreground that reads "40¢ LB". The subject has a bowl-cut hairstyle and is wearing a sleeveless top. The image has a documentary or photojournalistic quality to it, capturing a moment of everyday life, possibly from several decades ago based on the style and pricing. The lighting and composition create an engaging portrait that draws attention to both the subject and the carefully arranged produce.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-31

The image is a black-and-white photograph of a young boy standing behind a table of tomatoes at an outdoor market. The boy has short hair and is shirtless, with his arms resting on the table. The table is filled with large, round tomatoes, and a sign in front of them reads "40¢ LB" in handwritten letters. In the background, there are other tables and a building, suggesting a bustling outdoor market scene.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-31

The image is a black-and-white photograph of a young boy sitting behind a table with tomatoes. The boy has short hair and is shirtless, leaning on the table with his arms crossed. In front of him are several tomatoes arranged in rows, with a sign that reads "40¢ LB" in black marker on a piece of paper. The background appears to be a yard or outdoor area, with a table covered in a patterned cloth and other objects visible. The overall atmosphere suggests a casual, informal setting, possibly a farmer's market or roadside stand.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-30

The black-and-white image depicts a young boy with short hair, wearing a sleeveless shirt, and leaning on a table filled with round, shiny objects that resemble tomatoes. The boy's face appears to be in a serious expression, and he is looking at something. There is a sign with the text "40 LB" written on it, which is placed on top of the table. Behind the boy, there are some items that are blurry.

Created by amazon.nova-lite-v1:0 on 2025-05-30

A black and white image shows a young boy sitting behind a table of tomatoes. The boy is shirtless and has short hair. He is looking at the camera with a serious expression. The table is filled with tomatoes and has a handwritten price tag that reads "40 LB." There is a piece of paper with the number "40" on it. Behind the boy, there is a blurry background of a bed with a patterned cover.

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

Here is a description of the image.

The black and white photograph features a young boy selling tomatoes at what appears to be a roadside stand. He's positioned behind a table or counter covered in ripe tomatoes, which are the focal point in the foreground. A sign in front of the tomatoes reads "40 ¢ LB" in bold, handwritten lettering.

The boy has a serious, perhaps slightly melancholic expression. He has short, straight hair that falls across his forehead. His arms are bare, and he rests them on the table, giving the impression that he's been working for a while or is tired.

In the background, there's a hint of a rural setting. To the left, a table with a patterned tablecloth is visible, possibly displaying other items for sale. Behind the boy, there's a structure with what appears to be some gardening tools or equipment, suggesting a rural or agricultural context.

The black and white composition adds a timeless, somewhat documentary feel to the image, evoking a sense of simplicity and perhaps even the challenges of rural life.

Created by gemini-2.0-flash-lite on 2025-05-17

Here's a description of the image:

This is a black and white photograph featuring a young boy standing behind a table of tomatoes. The boy appears to be around 10-12 years old, with short hair and a serious expression on his face. He's leaning on the table, shirtless, with his arm resting on the surface.

In the foreground, the table is laden with a large pile of tomatoes. A handwritten sign in front of the tomatoes reads "40¢ LB", suggesting that he is selling them. The background is somewhat blurred, but hints at an outdoor setting, possibly a farmer's market or roadside stand. The overall tone of the photograph is simple and direct, capturing a moment of rural life or entrepreneurial endeavor.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-02

The image is a black-and-white photograph depicting a young boy sitting behind a large pile of tomatoes. The boy is shirtless and appears to be at an outdoor market or a similar setting. He has short hair and is looking directly at the camera with a neutral expression.

In front of him, there is a handwritten sign that reads "40¢ LB," indicating that the tomatoes are being sold for 40 cents per pound. The tomatoes are arranged in a large heap, and the boy is leaning on the table or surface where the tomatoes are displayed.

The background shows some additional market items, possibly more produce, and a person who is partially visible. The setting suggests a casual, possibly rural or small-town market environment. The overall mood of the image conveys a sense of simplicity and everyday life.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-10

The image is a black-and-white photograph of a young boy sitting behind a table filled with tomatoes. The boy has short, wavy hair and is shirtless, looking directly at the camera with a neutral expression. The table is covered with a large number of tomatoes, and in front of them, there is a handwritten sign that reads "40¢ LB," indicating that the tomatoes are being sold at a price of 40 cents per pound. The background appears to be an outdoor setting, possibly a market or farm area, with some blurred objects and structures visible. The overall tone of the image has a vintage feel.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-10

This black-and-white photograph shows a shirtless boy sitting behind a table filled with large, round fruits or vegetables, possibly tomatoes. The boy has short, tousled hair and is looking directly at the camera with a serious expression. His arms are resting on the table, and his hands are clasped together. In the foreground, there is a handwritten sign on the table that reads "40¢ LB," indicating the price of the produce. The background appears to be an outdoor setting with some trees and a structure, possibly a shed or a tent, visible. The overall atmosphere of the image is somewhat rustic and candid.