Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 7-17 |

| Gender | Female, 97.2% |

| Sad | 7% |

| Fear | 0.1% |

| Angry | 0.1% |

| Disgusted | 0.1% |

| Surprised | 0% |

| Calm | 91.4% |

| Confused | 0.2% |

| Happy | 1% |

Feature analysis

Amazon

| Person | 97.3% | |

Categories

Imagga

| paintings art | 80.9% | |

| streetview architecture | 18.4% | |

Captions

Microsoft

created on 2019-10-29

| a vintage photo of a person | 77.7% | |

| a vintage photo of a person holding a book | 48.7% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2025-01-29

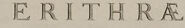

The image is a classical-style illustration titled "Sibilla Erithrėa," depicting the Erythraean Sibyl, a figure from ancient Greek and Roman mythology. The Sibyl is shown seated on a stone bench, draped in flowing, classical robes. She is holding a large book that rests on a table, open to a page with writing. Behind her, a small winged figure appears to be assisting by holding an object, possibly a horn or musical instrument. The composition emphasizes the Sibyl's significant role as a prophetic figure, with an air of contemplation and importance in her posture and surrounding elements.

Created by gpt-4 on 2025-01-29

This image is a greyscale illustration, likely an engraving, depicting a classical scene. It features a figure seated on a stone bench, draped in flowing classical robes, with one foot resting on the ground and the other elevated on the structure. The seated figure's attire suggests Roman or Grecian fashion, with folds meticulously detailed. To the figure's side, an open book rests on a draped table or pedestal. Above this book hovers a cherubic figure, who appears to be in the act of either placing or removing a laurel wreath upon the head of the seated figure. The cherub has small, delicate wings and is dressed in a draped garment.

At the bottom, there are inscriptions that read "SIBILLA ERITHRAEA" followed by a Latin phrase that translates roughly to "The Eritrean Sibyl predicted the coming of Christ." This suggests that the illustration represents the Eritrean Sibyl, a legendary figure reputed to possess prophetic powers in classical antiquity. The work is reflective of neoclassical styles, which often revered and emulated the art and symbolism of ancient Greece and Rome. This piece seems to be a part of a larger collection or portfolio, potentially historical or educational in nature.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-01-29

This is a classical engraving titled "Sibilla Erithræa," depicting the Erythraean Sibyl, one of the famous prophetesses of ancient Greece. The artwork shows a seated figure in classical dress, wearing loose-fitting robes and a braided headdress. They are shown reading from a large book or manuscript that rests on a table. Above the figure is a small cherub or putti figure holding what appears to be a torch or flame. The image is rendered in a neoclassical style with careful attention to the draping of fabric and architectural details. The figure is seated on what appears to be a stone pedestal or platform. The overall composition is very formal and reminiscent of Renaissance art, particularly the works found in the Sistine Chapel. At the bottom of the print is text identifying it as being from the Vatican's collection.

Created by claude-3-opus-20240229 on 2025-01-29

The image depicts a classical sculpture or engraving titled "Sibilla Erithraea". It shows a seated woman in flowing robes holding an open book on her lap. A young child, possibly a cherub or angel, is reaching up towards the book from the woman's side. The artwork has a soft, detailed style reminiscent of Renaissance or Neoclassical art. The Latin inscription below the image translates to "Behold, a young Virgin shall conceive and bear a Son".

Created by claude-3-haiku-20240307 on 2025-01-29

The image appears to depict a classical or allegorical figure, likely representing the Cumaean Sibyl, who was one of the ancient Greek prophetic figures. The figure is seated in a contemplative pose, holding an open book or tablet, while a small winged figure, perhaps a cherub or spirit, hovers above offering some kind of inspiration or divine revelation. The scene has a dramatic and solemn tone, characteristic of neoclassical or academic art styles.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-17

The image depicts a sepia-toned engraving of a woman sitting on a bench, with a cherub holding a book above her head. The woman is dressed in a long, flowing robe and has her hair pulled back. She is seated on a bench, with her left leg crossed over her right. Her right arm is extended, and she appears to be reading from the book held by the cherub.

- The woman is wearing a long, flowing robe that drapes elegantly around her body.

- Her hair is pulled back, revealing her face and neck.

- She is seated on a bench, with her left leg crossed over her right.

- Her right arm is extended, and she appears to be reading from the book held by the cherub.

- The cherub is holding a book above the woman's head, with its left hand grasping the cover and its right hand turning the pages.

- The background of the image is a plain wall, with a subtle texture that adds depth to the scene.

- The overall atmosphere of the image is one of serenity and contemplation, with the woman lost in thought as she reads from the book.

In summary, the image depicts a serene and contemplative scene of a woman reading from a book held by a cherub. The use of sepia tones and the elegant lines of the woman's robe create a sense of timelessness and elegance, while the cherub adds a touch of whimsy and playfulness to the scene.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-17

This image is a black and white illustration of the Greek muse Sibylla Erithrea. She is depicted sitting on a stone bench, wearing a toga and a headband. Her right arm is extended, holding a book, while her left arm rests on her lap. A cherub is shown behind her, holding a quill pen and a circular object.

The background of the image features a wall with a table or desk, upon which the book rests. The overall atmosphere of the image suggests a sense of contemplation and creativity, as Sibylla Erithrea is often associated with prophecy and inspiration. The presence of the cherub adds a touch of whimsy and playfulness to the scene.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-29

The image is a black-and-white illustration of a woman sitting on a bench. She is wearing a robe and a crown, and she is holding a book. She is looking at the book, and her other hand is resting on her lap. The bench she is sitting on has a cloth draped over it. There is a baby in front of her, and the words "SIBILLA ERITHRAEA" are written at the bottom.

Created by amazon.nova-lite-v1:0 on 2025-01-29

The image is a black-and-white drawing of a woman sitting on a bench. She is holding a book and reading it. She is wearing a long dress and a headband. The book she is reading is open and has a lot of text on it. There is a small figure of a baby on top of the book. The drawing is titled "SIBILLA ERITHRA" and has a watermark on the bottom left corner.