Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 22-34 |

| Gender | Female, 99.3% |

| Sad | 2.5% |

| Surprised | 0.5% |

| Angry | 1.1% |

| Confused | 1.4% |

| Fear | 0.3% |

| Happy | 0.4% |

| Calm | 93.1% |

| Disgusted | 0.7% |

Feature analysis

Amazon

| Person | 99% | |

Categories

Imagga

| paintings art | 99.8% | |

Captions

Microsoft

created on 2019-11-16

| an old photo of a person | 63.2% | |

| a person in a white room | 59.3% | |

| a person taking a selfie in a room | 57.3% | |

OpenAI GPT

Created by gpt-4 on 2025-02-13

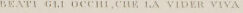

The image is a black and white print of a figure dressed in traditional attire. The individual is wearing a full-sleeved blouse with a bodice and a dark, possibly corseted waistcoat over it. Around the neck, there are multiple necklaces with medallion-like pendants. The clothing suggests a style that may be historical or indicative of a certain cultural dress, possibly from a European context. Below the figure, there is printed text that may indicate the title of the work or information about the subject or artist. The background and foreground are largely plain, focusing attention on the details of the costume and jewelry.

Created by gpt-4o-2024-05-13 on 2025-02-13

This image depicts an elegant historical portrait of a woman dressed in Renaissance-era attire. She is adorned in a garment featuring a square neckline with a laced bodice, puffed sleeves, and a decorative necklace with a pendant. Her head is covered with a veil or headpiece, and she carries an aura of poise and refinement. The portrait is framed with an inscription beneath it, and the overall artwork showcases intricate detailing typical of the period's style.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2025-02-13

The image appears to be a portrait of a woman. She is wearing a headdress and has long, flowing hair. The woman has a calm, serene expression on her face and is holding a necklace or ornament around her neck. The image seems to be a black and white engraving or etching, with a dark background framing the woman's portrait.

Created by claude-3-opus-20240229 on 2025-02-13

The image shows a portrait engraving of a young woman from the 16th century, with the text "Beati gli occhi che la vider viva" below, which translates to "Blessed are the eyes that saw her alive" in English. The woman has long, wavy hair parted in the middle and is wearing an ornate dress with puffed sleeves and a square neckline. She has a serene facial expression and appears to be gazing slightly to the side. The portrait is rendered in fine lines and crosshatching against a plain background.

Created by claude-3-5-sonnet-20241022 on 2025-02-13

This is a historical engraving showing a portrait of a Renaissance-era noblewoman. The artwork features a woman wearing typical 16th-century Italian fashion, including a detailed bodice with cross-lacing, puffed sleeves, and a light-colored head covering. She wears a necklace with what appears to be a pendant or brooch. The portrait is rendered in a classical Renaissance style with fine detail and shading. Below the image is an Italian inscription that reads "BEATI GLI OCCHI CHE LA VIDER VIVA" (Blessed are the eyes that saw her alive). The print appears to be professionally produced, likely from an academic or artistic collection.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-17

The image presents a portrait of a woman, likely from the Renaissance period, characterized by her attire and the style of the engraving. The woman is depicted wearing a head covering, a dress with a corset, and a necklace adorned with a pendant. Her right hand is placed on her chest, adding a sense of elegance to the overall composition. The engraving is rendered in a detailed and realistic manner, showcasing the artist's skill in capturing the subtleties of human features. The background of the image is a muted gray tone, which serves to accentuate the subject's features and create a sense of depth. At the bottom of the image, a caption is inscribed in Latin, reading "BEATI GLI OCCULTI, CHE LA VIDER VIVA." This phrase translates to "Blessed are those who have not seen and yet have believed." It is likely that this caption was added by the artist or the engraver to provide context or meaning to the portrait. Overall, the image presents a captivating and thought-provoking portrait of a woman from the Renaissance period, accompanied by a poignant caption that invites the viewer to reflect on the themes of faith and belief.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-17

This image depicts a vintage portrait of a woman, likely from the Renaissance era, set against a dark background. The woman is attired in a dress with a low neckline and puffed sleeves, complemented by a head covering and a necklace featuring a pendant. Her right hand is positioned over her chest, while her left hand rests on her stomach. The portrait is rendered in black and white, with the woman's attire and accessories detailed in a lighter shade. The overall effect is one of elegance and refinement, characteristic of the Renaissance style. At the bottom of the image, an inscription in Italian reads "BEATI GLI OCCHI, CHE LA VIDER VIVA," which translates to "Blessed are the eyes that saw her alive." This phrase suggests that the woman depicted in the portrait was considered beautiful and admired during her lifetime. The image appears to be a reproduction of an original artwork, possibly a painting or engraving, and is presented on a beige background with a gray border. Overall, the image exudes a sense of classic beauty and timeless elegance.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-13

This image depicts a portrait of a woman, likely a historical figure, dressed in a traditional and elegant attire from the past. The woman is shown wearing a white veil or headscarf, a necklace with a pendant, and a long-sleeved dress with intricate details. The portrait is set against a plain, white background, which helps to emphasize the subject and her attire. The image also includes a caption in a foreign language, possibly Italian, which translates to "Beati gli occhi che la vider viva," meaning "Blessed are the eyes that saw her alive." This suggests that the woman in the portrait was a significant and revered figure during her lifetime. The portrait is framed by a black border, adding a touch of elegance and sophistication to the overall composition. The image has a vintage or antique feel, possibly indicating that it is a reproduction of an older artwork or a photograph taken in the past. Overall, the image portrays a historical figure in a dignified and respectful manner, highlighting her beauty, elegance, and significance in her time. The caption and the portrait's composition suggest that the woman was admired and revered by those who saw her, and her legacy continues to be celebrated and remembered.

Created by amazon.nova-pro-v1:0 on 2025-02-13

The image is a black-and-white portrait of a woman. She is wearing a white veil that covers her head and falls over her shoulders. She is also wearing a necklace with a pendant. Her hands are clasped together in front of her chest, and she appears to be looking to the side. The image is framed by a dark border, and there is text below the portrait.