Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 23-35 |

| Gender | Male, 54.6% |

| Calm | 52.6% |

| Surprised | 45.1% |

| Happy | 45.1% |

| Sad | 45.5% |

| Angry | 46.2% |

| Fear | 45.1% |

| Disgusted | 45.2% |

| Confused | 45.1% |

Feature analysis

Amazon

| Person | 99.3% | |

Categories

Imagga

| interior objects | 51.8% | |

| food drinks | 17.4% | |

| cars vehicles | 14.9% | |

| paintings art | 4.9% | |

| pets animals | 4% | |

| people portraits | 2.4% | |

| nature landscape | 1.3% | |

| streetview architecture | 1.3% | |

Captions

Microsoft

created on 2019-11-03

| an old photo of a person | 62.6% | |

| a group of people in a room | 62.5% | |

| old photo of a person | 58.6% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2025-01-31

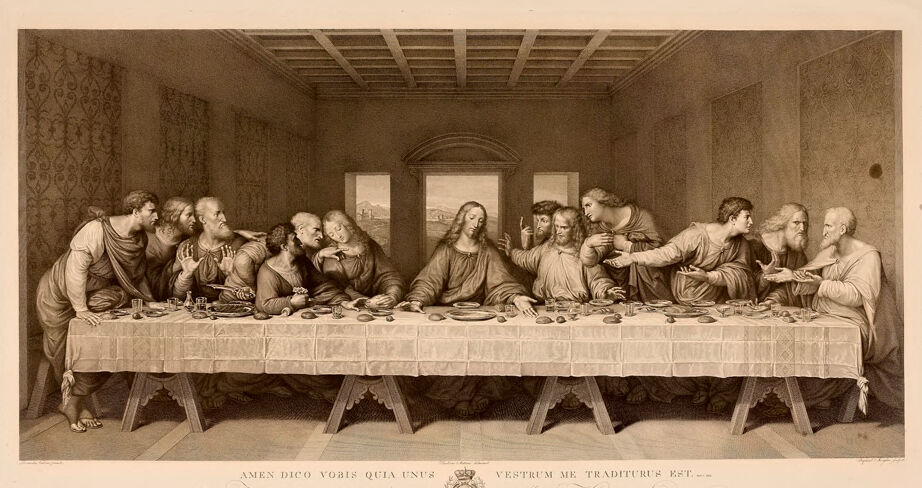

The image is a depiction of Leonardo da Vinci's famous mural painting, "The Last Supper." It shows Jesus Christ with his twelve disciples seated along a long table set for a meal. The atmosphere is tense and emotionally charged, capturing the moment when Jesus announces that one of his disciples will betray him. The painting is renowned for its composition, perspective, and the detailed expressions and postures of each figure, reflecting their varying reactions to the announcement. The room has a coffered ceiling and windows in the background, contributing to the sense of depth and space.

Created by gpt-4 on 2025-01-31

This image depicts a group of individuals seated at a long rectangular table, engaged in an animated and likely significant meal. The table is covered with a decorative tablecloth and has various plates, cups, and pieces of bread distributed on it. The individuals are dressed in garments that suggest a historical or religious context. The room features a coffered ceiling and decorated walls, with an open doorway or window visible in the background leading the view to an outdoor scene. There is text inscribed below the main depiction, suggesting that the image may have a specific title and possibly references to literary or historical texts. It appears to be an artwork, likely an engraving or reproduction of a famous painting.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-01-31

This is a reproduction of Leonardo da Vinci's famous "The Last Supper" as an engraving or print. The image shows the iconic scene with thirteen figures seated at a long table covered with a white tablecloth. The composition is set in a room with a coffered ceiling and three windows visible in the background, showing a distant landscape. The dramatic scene is arranged symmetrically, with the central figure at the middle of the table and the other twelve arranged in groups on either side. There appears to be text in Latin below the image, including "AMEN DICO VOBIS QUIA UNUS VESTRUM ME TRADITURUS EST" which translates to "Truly I say to you, one of you will betray me." The image has a sepia or brownish tone typical of historical prints and engravings.

Created by claude-3-opus-20240229 on 2025-01-31

The image depicts Leonardo da Vinci's famous painting "The Last Supper". It shows Jesus and his twelve apostles seated at a long table, partaking in what appears to be their final meal together before Jesus' crucifixion, based on the Biblical account. The figures are all dressed in robes and have expressive poses and hand gestures, conveying a sense of interaction and emotion. The setting appears to be an interior room with arched ceilings.

Below the image of the painting itself, there is an inscription that reads "AMEN DICO VOBIS QUIA UNUS VESTRUM ME TRADITURUS EST", which translates from Latin to "Truly I tell you, one of you will betray me". This is a quote attributed to Jesus in the Bible, referring to Judas Iscariot's impending betrayal.

The sepia tones and aged paper suggest this is an old print or reproduction of Leonardo's masterpiece from 1495-1498, which covers the wall of the dining hall at the monastery of Santa Maria delle Grazie in Milan, Italy. It captures one of the most iconic scenes in Western art and Christianity.

Created by claude-3-haiku-20240307 on 2025-01-31

The image depicts the famous painting of the Last Supper, a biblical scene in which Jesus Christ is shown sharing a final meal with his twelve apostles before his crucifixion. The image shows the central figure of Christ seated at the table, surrounded by his apostles. The scene is set in an ornate interior with arched windows and a decorative ceiling. The apostles are shown in various poses, some engaged in conversation, while others appear deep in thought. The overall composition and style suggest this is a print or engraving of the original painting, likely by a renowned artist.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-16

The image depicts a sepia-toned print of Leonardo da Vinci's famous painting, "The Last Supper." The scene is set in a long room with a table running down the center, where Jesus and his twelve apostles are seated. Jesus is positioned at the head of the table, while the apostles are arranged along either side. The atmosphere is one of solemnity and contemplation, as the figures are engaged in conversation and reflection.

The print is rendered in a detailed and realistic style, with intricate textures and shading that bring the scene to life. The sepia tone gives the image a sense of age and nostalgia, evoking a sense of history and tradition. The overall effect is one of reverence and awe, inviting the viewer to reflect on the significance of this pivotal moment in Christian history.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-16

The image depicts a sepia-toned engraving of Leonardo da Vinci's famous painting, "The Last Supper." The scene is set in a long, narrow room with a high ceiling and three windows on the back wall. Jesus Christ sits at the center of a long table, surrounded by his twelve apostles.

The apostles are arranged in pairs, with each pair engaged in conversation or reacting to Jesus' words. The table is laden with food and drink, including plates, cups, and bread. The atmosphere is one of solemnity and contemplation, as the apostles prepare for Jesus' impending betrayal and crucifixion.

The engraving is rendered in exquisite detail, capturing the subtleties of expression and emotion on the faces of the apostles. The use of sepia tones adds a sense of warmth and intimacy to the scene, drawing the viewer into the drama unfolding before them.

At the bottom of the image, there is an inscription in Latin, which reads: "AMEN DICO VOBIS QVIA VNVS VESTRVM ME TRADITVRVS EST." This translates to "Amen I say to you, one of you will betray me." The inscription serves as a reminder of the central theme of the painting: the betrayal of Jesus by one of his own disciples.

Overall, the image is a powerful representation of one of the most iconic scenes in Christian art, capturing the emotional depth and complexity of the moment when Jesus shares his final meal with his apostles.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-31

The painting depicts Jesus Christ and his disciples gathered around a table. It is a monochromatic drawing that is printed on paper. The table is covered with a white cloth, and plates, glasses, and other items are placed on it. Jesus is seated in the middle, and his disciples are seated on either side of him. Behind them, there is a window with curtains. The watermarks are printed on the bottom.

Created by amazon.nova-lite-v1:0 on 2025-01-31

The image depicts a detailed black-and-white drawing of "The Last Supper" by Leonardo da Vinci. The drawing captures the scene of Jesus Christ and his twelve apostles sitting around a long table, engaged in various activities. The central figure of Jesus is prominently placed at the table's head, with his hands raised in a gesture of blessing or teaching. The apostles are arranged in groups, with some engaged in conversation, others looking towards Jesus, and a few appearing to be in deep thought or contemplation. The table is set with plates, cups, and bread, and the room has a simple yet elegant design with a wooden ceiling and patterned walls. The drawing is signed by Leonardo da Vinci, indicating his authorship.