Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 57-77 |

| Gender | Male, 99.3% |

| Disgusted | 0.4% |

| Confused | 3.3% |

| Happy | 0.5% |

| Angry | 3.7% |

| Calm | 76.6% |

| Surprised | 1.2% |

| Sad | 14.2% |

Feature analysis

Amazon

| Person | 98.8% | |

Categories

Imagga

| people portraits | 89.3% | |

| events parties | 5.7% | |

| paintings art | 4.2% | |

| pets animals | 0.4% | |

| food drinks | 0.2% | |

| text visuals | 0.2% | |

| nature landscape | 0.1% | |

Captions

Microsoft

created on 2018-02-10

| a group of people posing for a photo | 85% | |

| a group of people sitting posing for the camera | 84.9% | |

| a group of people posing for the camera | 84.8% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-30

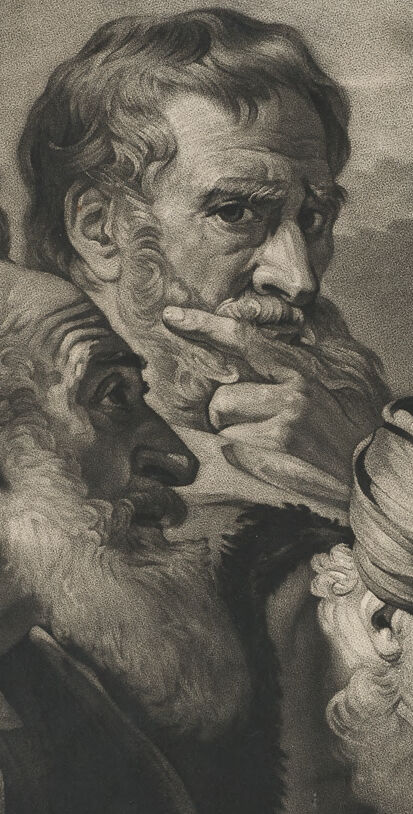

This image depicts a group of men engaged in a discussion. One figure on the right is raising their right hand with an index finger pointing upwards, possibly emphasizing a point or making a declaration. The central figure is gesturing with their hands, holding and showing an object to the others. The attire of the men appears to be historical, with robes and head coverings typically associated with ancient or biblical times. The overall scene suggests a moment of teaching or debate.

Created by gpt-4 on 2024-11-17

The image depicts a group of individuals in classical attire, engaging in what seems to be a significant interaction. The person on the right side of the image is gesturing upwards with one hand, while the other hand is extended outwards as if presenting an argument or making a proclamation. This figure is dressed in flowing robes and commands attention, suggesting a leadership or teacher role. The clothing and style are reminiscent of classical or biblical times, and the setting appears to include architectural elements such as pillars, which reinforce the historical context. The overall composition, attire, and gestures suggest a scene that could be representing a philosophical or religious discussion or teaching moment, typical of classical or religious artworks. The monochromatic tones imply it might be a reproduction or a photograph of an original painting.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-26

This appears to be a classical religious artwork, likely depicting a biblical scene. The black and white image shows several figures in flowing robes engaged in what seems to be a significant interaction or discussion. On one side, there's a group of older men with beards, while on the right stands a younger figure with longer hair in a teaching or speaking pose with one hand raised. The artwork has dramatic lighting and the figures are rendered in a style typical of Renaissance or Baroque religious paintings. The composition draws the viewer's attention to the interaction between the figures, with their hand gestures and facial expressions suggesting an important dialogue or debate taking place.

Created by claude-3-haiku-20240307 on 2024-11-17

The image appears to depict a dramatic biblical scene, likely based on a biblical story or parable. It shows several figures, including an older man with a long beard and flowing robes, surrounded by other men in robes and turbans. The central figure seems to be in distress or being questioned by the others. The overall composition and style suggest this is a work of classical or religious art, perhaps a painting or engraving.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-24

This image is a painting of Jesus Christ, surrounded by a group of men. The painting is rendered in black and white, with Jesus standing on the right side of the image. He is depicted wearing a long robe and has his right arm raised, pointing upwards with his index finger. In his left hand, he holds a small object, possibly a coin. To the left of Jesus, a group of men are gathered, all of whom appear to be older. They are dressed in robes and turbans, and some of them have beards. One of the men is holding out his hand, as if offering something to Jesus. The background of the painting is dark and shadowy, with a faint light source coming from the top-left corner. The overall atmosphere of the painting is one of reverence and contemplation, with Jesus at the center of attention.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-30

The image depicts a scene from the Bible, specifically the story of Jesus healing the blind man. The painting shows Jesus standing on the right side of the image, wearing a long robe and holding out his hand with his palm facing upwards. He has long hair and a beard, and is looking at the man he is about to heal. To the left of Jesus are several men, all of whom are looking at him with a mixture of curiosity and awe. They are dressed in robes and turbans, and some of them have their hands raised in a gesture of supplication or prayer. In the background of the painting, there is a cloudy sky and a building or structure that appears to be made of stone. The overall atmosphere of the painting is one of reverence and wonder, as the men gather around Jesus in anticipation of his healing power. The painting is done in a classical style, with detailed brushstrokes and shading that gives the figures a sense of depth and dimensionality. The colors used are muted, with shades of brown, beige, and gray dominating the palette. The overall effect is one of serenity and tranquility, as if the viewer has been transported to a peaceful and sacred place. Overall, the image is a powerful representation of the healing power of Jesus and the faith of those who believe in him. It is a testament to the enduring power of art to convey complex emotions and ideas, and to inspire us to reflect on our own lives and values.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-28

The image depicts a scene from the Bible, likely the moment when Jesus Christ is questioned by the Pharisees and Sadducees, as described in the New Testament. The scene is rendered in black and white, giving it a timeless and historical feel. In the center, Jesus is standing, dressed in a simple tunic, with his right hand raised and his left hand extended towards an elderly man, possibly a Pharisee. The elderly man, wearing a turban, is leaning forward, intently listening to Jesus. Behind Jesus, there are several other figures, including a man with a beard and a woman, both looking towards Jesus. The background is blurred, suggesting an outdoor setting, possibly a temple or a courtyard. The overall composition is dynamic, with the figures arranged in a way that draws the viewer's eye towards the central interaction between Jesus and the elderly man.

Created by amazon.nova-pro-v1:0 on 2025-02-28

The image is a black and white painting of a group of people, possibly a religious scene. The people are dressed in ancient clothing, and one of them is holding a coin. The man in the middle is looking at the coin, and the man on the right is looking at him. The man on the left is looking at the man on the right. The sky is cloudy, and there is a pillar behind them.