Machine Generated Data

Tags

Color Analysis

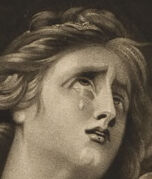

Face analysis

Amazon

AWS Rekognition

| Age | 14-23 |

| Gender | Male, 58.3% |

| Sad | 24.1% |

| Happy | 3.2% |

| Angry | 33% |

| Calm | 10% |

| Surprised | 7.8% |

| Confused | 15.5% |

| Disgusted | 6.4% |

AWS Rekognition

| Age | 20-38 |

| Gender | Female, 56.6% |

| Happy | 1.3% |

| Surprised | 3.8% |

| Angry | 7.4% |

| Calm | 12.7% |

| Disgusted | 11.2% |

| Sad | 55% |

| Confused | 8.5% |

AWS Rekognition

| Age | 23-38 |

| Gender | Female, 95.5% |

| Calm | 47.9% |

| Surprised | 17.6% |

| Sad | 4% |

| Angry | 6.7% |

| Disgusted | 8.4% |

| Confused | 11.5% |

| Happy | 4% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 34.6% | |

| streetview architecture | 25.4% | |

| people portraits | 21.9% | |

| events parties | 11% | |

| nature landscape | 5.5% | |

| text visuals | 0.7% | |

| pets animals | 0.4% | |

| food drinks | 0.3% | |

| interior objects | 0.1% | |

| beaches seaside | 0.1% | |

Captions

Microsoft

created on 2018-02-10

| a group of people sitting posing for the camera | 87.9% | |

| a group of people posing for a photo | 85.2% | |

| a group of people sitting posing for a photo | 84.3% | |

Azure OpenAI

Created on 2024-11-28

This is a monochrome image depicting a classical scene set in a wooded landscape. The central figure, draped in flowing garments, is gesturing dramatically with one arm raised. There is an expression of distress or concern as this figure clutches the arm of another figure, who appears to be reclining or slumped over in a state of exhaustion or sorrow. The second figure's posture and expression suggest vulnerability or need for comfort. In the background, other figures are depicted among the trees; these appear ethereal, possibly indicating a heavenly or spiritual presence as one of them extends an arm forward as if reaching out to or interacting with the main figures in the foreground. The art style suggests an influence from classical or Renaissance traditions, rich in allegory or mythological storytelling, with a focus on human emotion and divine intervention.

Anthropic Claude

Created on 2024-11-27

The image depicts a dramatic, allegorical scene set in a lush, forested landscape. In the foreground, a woman with an ethereal, angelic appearance is reaching out towards a figure lying on the ground. The figure on the ground appears to be resting or in a state of distress. Above the scene, an angelic figure with outstretched wings is visible in the clouds. The overall composition and lighting create a sense of mystery and spiritual undertones.

Meta Llama

Created on 2024-11-25

The image depicts a scene from the biblical story of Jacob wrestling with God, also known as the "Angel of the Lord" or "Angel of Jacob." The scene is rendered in a classical style, with the figures set against a backdrop of trees and foliage. In the foreground, Jacob is shown lying on the ground, his body twisted in a dramatic pose as he grapples with the angel. His right arm is raised, and his left arm is bent at the elbow, with his hand grasping the angel's wrist. The angel, depicted as a male figure with wings, is shown in mid-air, his right arm outstretched and his left arm holding Jacob's wrist. The angel's face is serene, with a gentle expression. The background of the painting features a serene landscape, with trees and foliage stretching out towards the horizon. The sky above is a soft, pale blue, with a few wispy clouds scattered across it. The overall effect of the painting is one of drama and tension, as Jacob struggles against the angel in a bid to secure his blessing. The painting is rendered in a classical style, with smooth lines and subtle shading. The colors used are muted, with shades of brown, beige, and gray dominating the palette. The overall effect is one of quiet contemplation, inviting the viewer to reflect on the significance of the scene being depicted. The painting is likely intended to convey the idea that even in the midst of struggle and adversity, there is always the possibility for transformation and growth. Jacob's wrestling match with the angel is a powerful symbol of the human desire for connection with something greater than ourselves, and the painting captures this moment of intense spiritual struggle in a beautiful and evocative way.