Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 11-18 |

| Gender | Female, 54.8% |

| Happy | 45% |

| Surprised | 45% |

| Angry | 45% |

| Disgusted | 45% |

| Calm | 45.1% |

| Sad | 54.7% |

| Confused | 45% |

Feature analysis

Amazon

| Person | 99.2% | |

Categories

Imagga

| paintings art | 79.2% | |

| pets animals | 20.7% | |

Captions

Microsoft

created on 2019-06-17

| a vintage photo of a group of people posing for the camera | 82.5% | |

| a vintage photo of a group of people posing for a picture | 82.4% | |

| a vintage photo of a person | 82.3% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2025-01-26

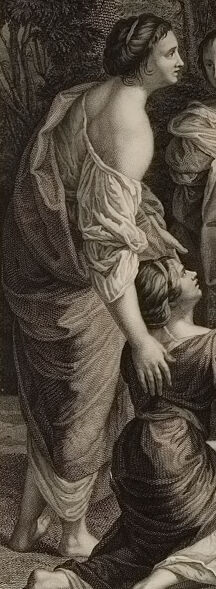

The image depicts a classical, historical scene featuring several figures dressed in flowing, draped garments typical of ancient or Renaissance times. In the center, two women are holding an infant enclosed in a cloth. Other figures surround them, arranging themselves in dynamic poses that suggest they are engaged in an important or emotional moment. The background includes detailed trees and a cloudy sky, highlighting the outdoor setting. The composition and style of the clothing and setting suggest it may be an illustration of a significant event from history or mythology.

Created by gpt-4 on 2025-01-26

This image is a grayscale artwork depicting a scene with multiple figures wearing classical drapery that is reminiscent of ancient Greco-Roman attire. The clothing and poses suggest a scene from mythology or history, as the style is indicative of neoclassical themes. The figures appear to be engaged in a dynamic and possibly emotional moment, with one figure positioned on the ground reaching out towards another figure who is being supported by others. The setting includes a nature-based backdrop with trees and an open sky, adding to the serene yet dramatic atmosphere of the scene. The artwork employs fine detailing and shading techniques to create depth and texture.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-01-26

This appears to be a classical engraving or print depicting a dramatic scene with several figures in flowing, draped clothing typical of ancient or classical style. The composition shows a group gathered around what appears to be an infant or small child. The central figure wears a crown or diadem and elegant robes, while others are arranged in emotional poses around them, some kneeling or gesturing. The scene is set in an outdoor environment with trees and foliage in the background. The artistic style and execution suggest this is likely from the 17th or 18th century, with careful attention paid to the folds of fabric and the classical poses of the figures. The print uses sophisticated shading and line work characteristic of high-quality engravings from this period.

Created by claude-3-opus-20240229 on 2025-01-26

The image depicts a group of women, likely in the 18th century based on their clothing and hairstyles, resting by some trees in a pastoral landscape. The women are wearing elaborate dresses with full skirts and detailed bodices. Their hair is styled in upswept curls and adorned with decorative elements. The women appear to be interacting with each other, with some sitting and others standing or leaning against the tree trunks. The scene has a peaceful, idyllic quality, evoking a sense of leisure and camaraderie among the female figures in a natural outdoor setting.

Created by claude-3-haiku-20240307 on 2025-01-26

The image appears to be an engraved illustration depicting a group of women in classical poses and attire arranged in a lush, idyllic natural setting with trees and foliage in the background. The central figures seem to be attending to or comforting a person lying on the ground, suggesting a narrative scene of some kind. The overall style and composition evoke a classical, allegorical or mythological tone.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-01-26

The image is a black-and-white illustration of a group of women in old-fashioned clothing gathered around a baby. The central figure, a woman wearing a crown and a long dress, stands out as she holds the baby. She is surrounded by other women who are dressed in similar attire, with some kneeling or standing in various poses. The background features trees and a cloudy sky, suggesting an outdoor setting. The overall atmosphere appears to be one of reverence and celebration, with the women gathered around the baby in a scene that evokes a sense of tradition and cultural significance.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-01-26

The image depicts a scene from the painting "The Finding of Moses" by Nicolas Poussin, created in 1638. The painting is rendered in sepia tones, which adds to its timeless and classic feel. In the foreground, a group of women are gathered around a baby, who is being lifted up by two of them. The women are dressed in flowing robes, with one woman wearing a crown, indicating her importance. The baby is swaddled in a cloth, and the women's faces convey a sense of concern and care. The background of the painting features trees and a cloudy sky, which adds depth and context to the scene. The overall atmosphere of the painting is one of serenity and reverence, capturing a moment of tenderness and devotion. The image is surrounded by a thick border, which helps to frame the painting and draw attention to its central figures. The border also adds a sense of age and history to the image, as if it has been preserved for centuries. Overall, the image is a beautiful representation of Poussin's masterpiece, capturing the essence of his style and technique. The use of sepia tones and the detailed rendering of the figures and background create a sense of depth and dimensionality, drawing the viewer into the scene.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-26

The image is a monochromatic drawing of a group of women gathered around a woman holding a baby. The woman holding the baby is wearing a crown and a long dress. The women around her are also wearing long dresses. Some of them are kneeling on the ground, while others are standing. They are all looking at the baby. Behind them are trees and a view of the sky.

Created by amazon.nova-lite-v1:0 on 2025-01-26

The image is a black-and-white drawing depicting a scene with several women gathered around a central figure. The central figure, a woman wearing a crown, is holding a baby in her arms. The baby is lying on a cloth, and two women on either side of the central figure are reaching out to touch the baby. The other women in the group are standing behind the central figure, some of them looking down at the baby. The background shows trees and a landscape, suggesting an outdoor setting. The image has a vintage look, and the drawing is framed with a white border.