Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Imagga

AWS Rekognition

| Age | 63-73 |

| Gender | Female, 69% |

| Calm | 94.1% |

| Surprised | 6.9% |

| Fear | 6.1% |

| Sad | 2.6% |

| Happy | 1.3% |

| Disgusted | 0.5% |

| Angry | 0.4% |

| Confused | 0.3% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 97.4% | |

Categories

Imagga

created on 2023-10-06

| paintings art | 100% | |

Captions

Microsoft

created by unknown on 2018-05-10

| an old photo of a person | 66.9% | |

| old photo of a person | 61.5% | |

| an old photo of a book | 33.3% | |

Salesforce

Created by general-english-image-caption-blip-2 on 2025-07-07

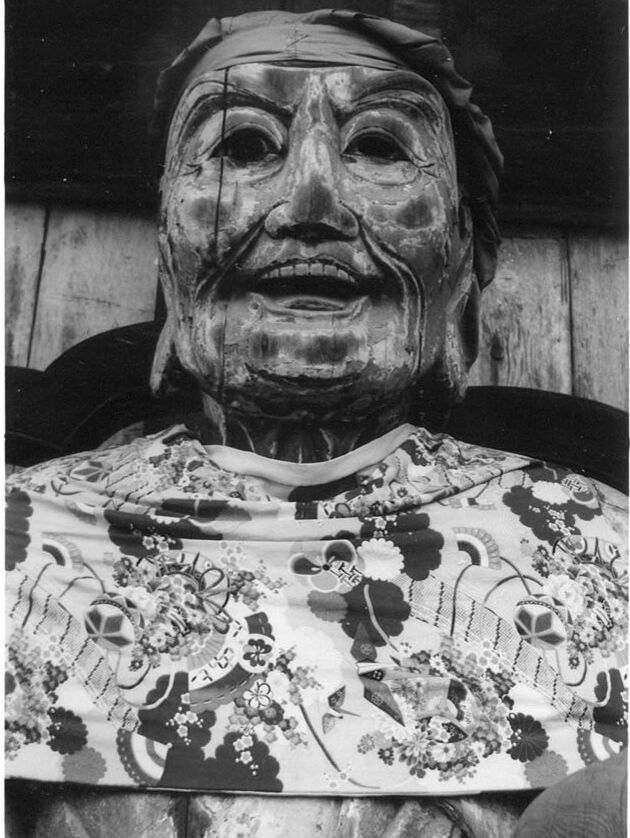

a black and white photo of a statue with a face mask

Created by general-english-image-caption-blip on 2025-05-20

a photograph of a black and white photo of a man with a mask on

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-09

This black-and-white image shows a wooden figure dressed in a patterned cloth adorned with intricate floral motifs, geometric designs, and traditional decorative elements. The background consists of wooden boards, providing a rustic setting for the figure, which appears to be seated or part of a sculptural display. The craftsmanship and textiles suggest cultural or artistic significance.

Created by gpt-4o-2024-08-06 on 2025-06-09

The image depicts a statue clothed in a fabric with intricate patterns. The fabric features floral and geometric designs, with various shapes and motifs. The statue appears to be part of a traditional or cultural setting, indicated by the style of the garment. The background consists of wooden panels, adding to the rustic or historical ambiance of the scene.

Anthropic Claude

Created by anthropic.claude-3-haiku-20240307-v1:0 on 2025-06-13

The image shows a large, carved wooden mask or statue. The mask has a prominent, exaggerated facial expression with wide, staring eyes and an open, toothy mouth. The mask is adorned with a colorful, floral-patterned fabric or garment draped over its chest. The background appears to be a wooden structure or wall, creating a stark contrast with the carved mask. The overall image has a striking, almost unsettling quality, capturing the unique and expressive nature of this carved wooden figure.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-13

This is a black and white photograph of what appears to be a wooden or carved mask or sculpture with Asian features. The mask has a smiling expression with pronounced features and is wearing what looks like a head covering. Below the face, there is a floral patterned garment or fabric with an intricate design featuring flowers and other decorative elements. The photograph has a somewhat eerie quality due to the stark contrast and close-up perspective of the mask's features. The style suggests this might be from a traditional Asian theatrical or ceremonial context.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-12

The image is a black-and-white photograph of a statue of an elderly woman's head and shoulders, with a floral patterned cloth draped over her shoulders.

The statue is made of stone or metal and depicts the head and shoulders of an elderly woman. She has a wide face with deep wrinkles, a prominent nose, and a wide mouth with thin lips. Her eyes are sunken, and her eyebrows are thin and arched. She is wearing a headscarf that covers her hair, and her ears are visible. The statue appears to be weathered, with cracks and discoloration visible on its surface.

The woman's head is tilted slightly upwards, and her mouth is open as if she is speaking or singing. The overall effect is one of serenity and contentment, as if the woman is at peace with the world around her.

The background of the image is a wooden wall, which adds a sense of warmth and coziness to the scene. The wall is made up of vertical planks, and it appears to be old and worn, with scratches and scuffs visible on its surface.

Overall, the image is a beautiful and poignant portrayal of an elderly woman, captured in a moment of quiet contemplation. The use of black and white photography adds a sense of timelessness and universality to the image, making it feel both personal and relatable.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-12

The image is a black-and-white photograph of a large wooden statue of a man's head and upper torso. The statue appears to be carved from wood and has a weathered appearance, with visible cracks and wear on its surface. It is positioned in front of a wall made of vertical wooden planks.

The statue is wearing a traditional Japanese garment, which is white with a floral pattern featuring various shades of gray and black. The garment covers the statue's chest and shoulders, adding a touch of cultural significance to the overall scene.

In the background, the wooden wall provides a rustic and natural setting for the statue. The overall atmosphere of the image suggests that the statue may be an important cultural or religious artifact, possibly displayed in a museum or temple. The use of black and white photography adds a sense of timelessness and simplicity to the image, drawing attention to the intricate details of the statue and its surroundings.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-05

The image is a black-and-white photograph of a traditional Japanese mask. The mask is a full-face mask, covering the entire face of the person wearing it. The mask has a wooden texture and is intricately designed with various patterns and details. The mask has a wide, smiling mouth and large, expressive eyes. The mask is worn by a person, and the person's head is partially visible above the mask. The person is wearing a traditional Japanese outfit, including a white shirt with a floral design.

Created by amazon.nova-pro-v1:0 on 2025-06-05

The image is a black-and-white photograph of a statue of an old man with a smiling face. The statue has a wrinkled face, and it is wearing a scarf around its neck. The scarf has a floral design. The statue is wearing a robe with a floral design. The statue is placed on a wooden surface, which appears to be a chair. The statue is placed in a dark room.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-02

Here's a description of the image:

The image is a black and white photograph of a wooden sculpture. The sculpture appears to be a bust of an older man with a slightly open mouth, possibly smiling. The face is detailed with wrinkles and lines, suggesting age. He's wearing a patterned shirt or garment with floral and geometric designs. The background appears to be a wooden surface, possibly a wall or structure. The photograph has a vintage feel, possibly due to the black and white format and the subject matter.

Created by gemini-2.0-flash on 2025-05-02

The black and white image depicts a close-up of a carved wooden sculpture of a man. He has a weathered appearance with visible cracks and wear, suggesting age and exposure to the elements. His face is expressive with deep-set eyes and a slight smile revealing teeth. He wears what appears to be a headband or wrapping around his head.

The sculpture is clothed in a patterned garment. The clothing is floral and has a geometric design. The man appears to be positioned in front of a wooden structure, perhaps a building or wall, with planks visible in the background. The overall tone of the image is somber and contemplative, highlighting the details of the sculpture and emphasizing its aged and historical nature.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-31

The image depicts a person wearing a traditional mask and costume, likely associated with a cultural or theatrical performance. The mask is intricately designed, featuring exaggerated facial features such as deep wrinkles, a prominent nose, and a wide, open-mouthed smile. The mask appears to be made of a material that gives it a weathered, aged look.

The person is also wearing a patterned garment over their shoulders, which has a floral design with large, bold flowers and intricate details. The garment covers the upper part of the body, adding to the traditional aesthetic of the costume. The background is dark and appears to be an indoor setting, possibly a wooden structure.

This type of attire is often seen in traditional theater performances, such as Noh or Kabuki in Japan, where masks and elaborate costumes are used to portray characters and tell stories.