Machine Generated Data

Tags

Amazon

created on 2019-10-30

Clarifai

created on 2019-10-30

Imagga

created on 2019-10-30

| stamp | 49.9 | |

|

| ||

| sketch | 49 | |

|

| ||

| drawing | 43.3 | |

|

| ||

| envelope | 39 | |

|

| ||

| die | 36.2 | |

|

| ||

| paper | 30.7 | |

|

| ||

| vintage | 30.6 | |

|

| ||

| grunge | 29.8 | |

|

| ||

| old | 29.3 | |

|

| ||

| representation | 28.2 | |

|

| ||

| antique | 26.9 | |

|

| ||

| shaping tool | 26.5 | |

|

| ||

| retro | 24.6 | |

|

| ||

| texture | 24.3 | |

|

| ||

| container | 24.2 | |

|

| ||

| design | 23.5 | |

|

| ||

| dirty | 20.8 | |

|

| ||

| art | 20.4 | |

|

| ||

| floral | 19.6 | |

|

| ||

| frame | 17.5 | |

|

| ||

| aged | 17.2 | |

|

| ||

| artistic | 16.5 | |

|

| ||

| symbol | 16.2 | |

|

| ||

| flower | 16.2 | |

|

| ||

| tool | 16.1 | |

|

| ||

| decorative | 15.9 | |

|

| ||

| pattern | 15.1 | |

|

| ||

| blank | 13.7 | |

|

| ||

| brown | 13.3 | |

|

| ||

| page | 13 | |

|

| ||

| border | 12.7 | |

|

| ||

| grungy | 12.3 | |

|

| ||

| card | 12 | |

|

| ||

| graphic | 11.7 | |

|

| ||

| wallpaper | 11.5 | |

|

| ||

| shirt | 11.3 | |

|

| ||

| ancient | 11.3 | |

|

| ||

| celebration | 11.2 | |

|

| ||

| empty | 11.2 | |

|

| ||

| note | 11 | |

|

| ||

| silhouette | 10.8 | |

|

| ||

| textured | 10.5 | |

|

| ||

| scroll | 10.5 | |

|

| ||

| decoration | 10.4 | |

|

| ||

| ornament | 10.3 | |

|

| ||

| letter | 10.1 | |

|

| ||

| jersey | 10 | |

|

| ||

| rough | 10 | |

|

| ||

| parchment | 9.6 | |

|

| ||

| damaged | 9.5 | |

|

| ||

| element | 9.1 | |

|

| ||

| black | 9 | |

|

| ||

| worn | 8.6 | |

|

| ||

| canvas | 8.5 | |

|

| ||

| greeting | 8.4 | |

|

| ||

| painting | 8.2 | |

|

| ||

| paint | 8.2 | |

|

| ||

| map | 8.1 | |

|

| ||

| detail | 8 | |

|

| ||

| yellow | 8 | |

|

| ||

| creative | 7.9 | |

|

| ||

| love | 7.9 | |

|

| ||

| text | 7.9 | |

|

| ||

| color | 7.8 | |

|

| ||

| leaf | 7.8 | |

|

| ||

| space | 7.8 | |

|

| ||

| gift | 7.7 | |

|

| ||

| bouquet | 7.6 | |

|

| ||

| document | 7.4 | |

|

| ||

| style | 7.4 | |

|

| ||

| foliage | 7.4 | |

|

| ||

| object | 7.3 | |

|

| ||

| artwork | 7.3 | |

|

| ||

| backgrounds | 7.3 | |

|

| ||

| clothing | 7.3 | |

|

| ||

| business | 7.3 | |

|

| ||

| material | 7.1 | |

|

| ||

| romance | 7.1 | |

|

| ||

| romantic | 7.1 | |

|

| ||

| surface | 7.1 | |

|

| ||

| garment | 7 | |

|

| ||

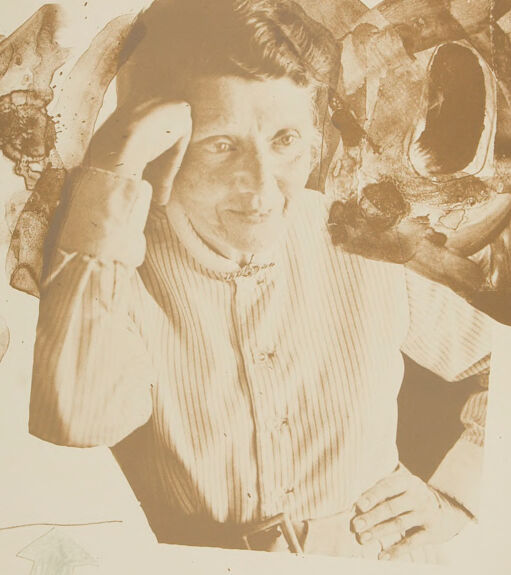

Google

created on 2019-10-30

| Photograph | 95 | |

|

| ||

| Art | 85.4 | |

|

| ||

| Illustration | 80.9 | |

|

| ||

| Drawing | 77 | |

|

| ||

| Photography | 62.4 | |

|

| ||

| Stock photography | 62.1 | |

|

| ||

| Visual arts | 59.3 | |

|

| ||

| Black-and-white | 56.4 | |

|

| ||

| Sketch | 54.4 | |

|

| ||

| Artwork | 53.4 | |

|

| ||

| Style | 52.5 | |

|

| ||

| Portrait | 51.6 | |

|

| ||

| Painting | 50.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 26-42 |

| Gender | Female, 95.3% |

| Sad | 5% |

| Happy | 1.7% |

| Angry | 2.2% |

| Confused | 6.9% |

| Disgusted | 0.9% |

| Calm | 81.8% |

| Surprised | 1.2% |

| Fear | 0.2% |

Feature analysis

Categories

Imagga

| paintings art | 100% | |

|

| ||

Captions

Microsoft

created on 2019-10-30

| a close up of text on a white surface | 37% | |

|

| ||

| a close up of text on a white background | 36.9% | |

|

| ||

| a photo of a person | 36.2% | |

|

| ||

Text analysis

Amazon

14

MAwMorN6Ho 14

MAwMorN6Ho