Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 16-22 |

| Gender | Female, 98% |

| Calm | 96% |

| Surprised | 1.1% |

| Sad | 0.8% |

| Fear | 0.8% |

| Happy | 0.5% |

| Disgusted | 0.3% |

| Angry | 0.3% |

| Confused | 0.1% |

Feature analysis

Amazon

| Person | 98.4% | |

Categories

Imagga

| paintings art | 66.5% | |

| pets animals | 29.5% | |

| people portraits | 1.8% | |

| streetview architecture | 1.7% | |

Captions

Microsoft

created on 2022-01-22

| a person sitting on a bed | 69.7% | |

| a man and a woman sitting on a bed | 39.5% | |

| an old photo of a person | 39.4% | |

OpenAI GPT

Created by gpt-4 on 2024-12-23

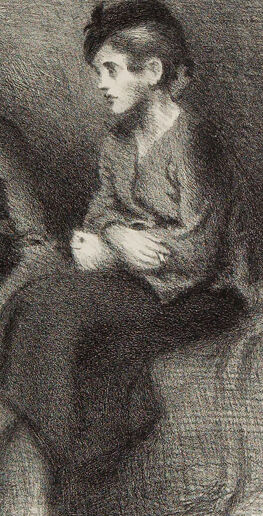

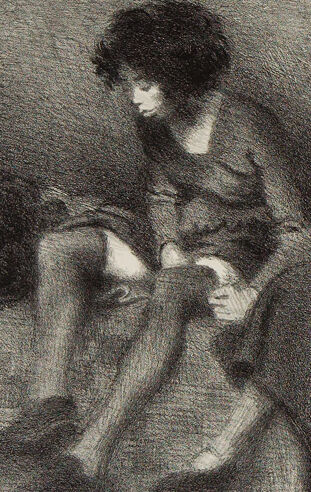

This is a black and white artwork that depicts two figures seated next to each other on what appears to be a bench or a couch. The style of the piece is reminiscent of etching or charcoal drawing, displaying a range of textures and shading that give a sense of depth and form to the subjects. The clothing and hairstyle details suggest a setting that is either in the past or created to give a timeless feel. The figures are positioned in a casual, relaxed manner with one seemingly turned towards the other, perhaps engaged in a conversation or in a moment of quiet companionship. The artwork, signed "Raphael Soyer," typically suggests it's by the artist known for his work featuring everyday people and urban scenes. The scenery in the background is minimal, focusing the viewer's attention on the interaction between the two figures.

Created by gpt-4o-2024-05-13 on 2024-12-23

The image depicts a lithograph of two individuals seated side by side. They both appear to be engaged in a quiet moment, possibly in conversation or contemplation. The individuals are rendered in a detailed, textured manner using cross-hatched lines, which adds depth and a sense of softness to the image. The figures are wearing simple clothing, and the background is sparsely detailed, focusing attention on the subjects. The artwork is signed by the artist Raphael Soyer and dated 1934 in the bottom right corner.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-23

The image appears to be a black and white etching or engraving depicting two figures seated on a couch or sofa. The figures appear to be a young man and a young woman, though they are not identified by name. The image has a dark, moody atmosphere, with the figures silhouetted against a shadowy background. The artist's signature, "R. Rathbun-Joyce," is visible in the bottom left corner.

Created by claude-3-5-sonnet-20241022 on 2024-12-23

This is a black and white sketch or lithograph showing two young figures seated together. The artwork appears to be from an earlier era, possibly the late 19th or early 20th century, given its style and technique. The figures are dressed in what appears to be period clothing, with one wearing what looks like knee breeches or short pants. The drawing technique uses soft, subtle shading to create a moody, atmospheric quality. The composition is intimate and informal, suggesting these might be companions or siblings sharing a quiet moment. The artist's signature appears to be visible in the lower right corner of the work.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-23

The image depicts a charcoal drawing of two children sitting on a bench, titled 'Two Boys' by Raphael Soyer. The drawing is rendered in a somber and introspective tone, with the children appearing lost in thought. The artist's use of charcoal creates a sense of depth and texture, drawing the viewer's attention to the subtle expressions and postures of the children. The composition is simple yet evocative, inviting the viewer to ponder the story behind the scene.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-23

This image is a black-and-white drawing of two boys sitting on the ground. The boy on the left has dark, curly hair and is wearing a long-sleeved shirt, shorts, and shoes. He is leaning forward with his hands clasped together in front of him. The boy on the right has short, dark hair and is wearing a long-sleeved shirt and pants. He is also leaning forward with his hands clasped together in front of him. The background of the drawing is dark, with some lighter shading around the boys. There are some indistinct shapes in the background, but they are not clearly defined. The overall atmosphere of the drawing is one of quiet contemplation, as if the boys are lost in thought.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-24

The image is a black-and-white drawing by Raphael Soyer titled "Two Girls." It depicts two girls sitting on a bed, facing each other, and appears to be engaged in conversation. The girl on the left is holding a book and looking down, while the girl on the right is looking at her. The drawing has a vintage look and a sense of intimacy, capturing a moment of connection between the two girls. The image is framed with a white border.

Created by amazon.nova-pro-v1:0 on 2025-02-24

The image depicts a black-and-white drawing of two young children, a boy and a girl, sitting on a bed. The boy, who appears to be the younger of the two, is sitting on the left side of the bed, while the girl is sitting on the right side. They are both looking in the same direction, possibly at something outside the frame. The drawing is done in a sketch style, with fine lines and shading to create depth and texture.