Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 22-30 |

| Gender | Female, 58.5% |

| Calm | 97.9% |

| Sad | 0.9% |

| Disgusted | 0.3% |

| Fear | 0.2% |

| Surprised | 0.2% |

| Angry | 0.1% |

| Happy | 0.1% |

| Confused | 0.1% |

Feature analysis

Amazon

| Monitor | 84.4% | |

Categories

Imagga

| paintings art | 73.6% | |

| pets animals | 8% | |

| interior objects | 6% | |

| streetview architecture | 4.1% | |

| nature landscape | 3.1% | |

| food drinks | 2.6% | |

| text visuals | 2.3% | |

Captions

Microsoft

created on 2022-01-22

| an old photo of a room | 70.6% | |

| an old photo of a living room | 38% | |

| old photo of a room | 37.9% | |

OpenAI GPT

Created by gpt-4 on 2024-12-23

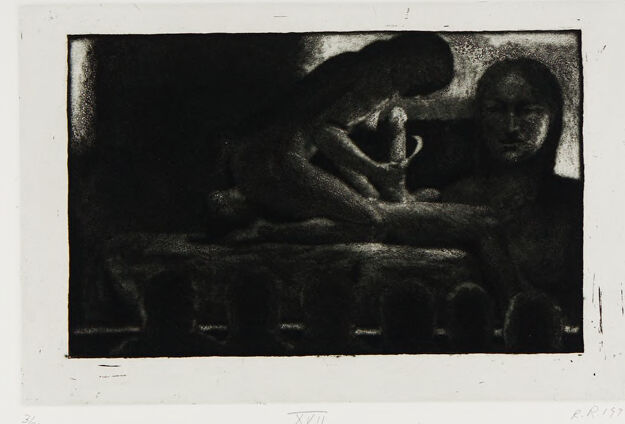

This is a photograph of an artwork, specifically a print. The print is rendered in dark tones, suggesting a night scene or a setting with low light. It depicts an interior space where a figure seems to be situated on a bed or a couch. The composition is centered around an interaction or a moment involving this figure, which is the focal point of the scene.

The artwork is presented with a wide white border that frames the central image, accentuating the contrast between the artwork and the surrounding space. In the lower-left corner of the border, there is a roman numeral "XIII," suggesting that this print may be part of a series or a numbered edition. In the lower-right corner, there's an inscription that appears to be the artist's signature along with the year, which looks to say "62" indicating that this piece might have been created in 1962.

The artwork has a sense of intimacy and stillness, with attention to the play of light and shadow, giving it a rich, textured quality that is characteristic of certain printmaking techniques.

Created by gpt-4o-2024-05-13 on 2024-12-23

The image is an artwork featuring a dark, somber scene. It depicts a figure hunched over another figure in what appears to be an intimate or intense moment. The setting is minimalist, with low lighting contributing to the dramatic and evocative atmosphere of the composition. The figures are rendered in a soft, almost blurred manner, adding to the sense of mystery and emotion. The artwork is mounted on a white background, and there is a notation at the bottom with what appears to be Roman numerals "XVII" and possibly a signature or date.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-23

The image appears to be a black and white photographic print or etching depicting two nude human figures embracing or intertwined in an intimate pose. The figures are rendered in a dark, shadowy style, creating a dramatic and moody atmosphere. The composition is framed within a rectangular border, suggesting this may be an artistic or creative work rather than a documentary photograph. Overall, the image seems to convey a sense of intimacy, connection, and perhaps vulnerability between the two figures.

Created by claude-3-5-sonnet-20241022 on 2024-12-23

This is a dark, moody monochromatic print or etching. The image appears to show shadowy figures or forms in a dimly lit interior setting. There's a strong contrast between light and dark areas, creating a dramatic atmospheric effect. The composition has a horizontal emphasis, with what seems to be architectural elements or a structure along the bottom portion. The print has a somewhat abstract quality, with forms emerging from the darkness. Below the image, there appear to be some notations, likely indicating it's part of a numbered series or edition. The overall impression is one of mystery and emotional depth, achieved through the skillful use of shadow and light.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-23

The image is a black and white print of a woman sitting at a table, with a cup in her hand. The print is signed by the artist, F. Ritz, in the bottom-right corner.

- The woman is sitting at a table, facing the viewer.

- She has dark hair and is wearing a dark dress or robe.

- Her face is partially obscured by shadows.

- The cup is in her right hand, which is resting on the table.

- The cup appears to be made of ceramic or glass.

- It has a handle on one side and a small lip on the other.

- The background of the print is a light gray color.

- There are no other objects or people visible in the background.

- The overall effect is one of simplicity and focus on the woman and the cup.

The print is likely a study or sketch of a woman in a contemplative pose, perhaps enjoying a cup of tea or coffee. The use of black and white creates a sense of contrast and emphasizes the shapes and forms of the woman and the cup.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-23

The image presents a monochromatic artwork, likely a print or painting, featuring a darkened scene with three figures. The central figure appears to be a woman holding an object, possibly a baby, while two other figures are visible on either side of her. The artwork is set against a white background and includes some illegible text at the bottom.

Key Features:

- Central Figure: A woman holding an object, possibly a baby.

- Two Other Figures: Visible on either side of the central figure.

- Background: White.

- Text: Illegible text at the bottom of the artwork.

Overall Impression:

The image conveys a sense of intimacy and closeness among the three figures, with the central figure appearing to be the focus of attention. The use of a monochromatic color scheme adds to the somber and contemplative atmosphere of the artwork.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-02-27

The image is a black-and-white photograph of a drawing. It depicts two people, one of whom is sitting and the other is standing. The standing person is looking at the seated person, who is bent over and appears to be doing something to their feet. The seated person is wearing a long-sleeved shirt and pants, while the standing person is wearing a long-sleeved shirt and shorts.

Created by amazon.nova-lite-v1:0 on 2025-02-27

The image is a black-and-white photograph featuring two figures in a dimly lit room. The figures are positioned in a seated posture, with one person sitting on a chair and the other person sitting on a desk. The person on the desk is holding a pen, possibly writing or drawing, while the person on the chair is looking at them. The photograph has a vintage feel, with a slightly blurry quality and a monochromatic color scheme. The image is framed in a white border, and there is a small text at the bottom of the image that reads "XVII" and "FR1972".