Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 1-7 |

| Gender | Female, 65.3% |

| Calm | 15.2% |

| Confused | 1.9% |

| Surprised | 13.7% |

| Sad | 0.7% |

| Happy | 8.1% |

| Fear | 3.2% |

| Disgusted | 7.3% |

| Angry | 49.8% |

Feature analysis

Amazon

| Person | 98.6% | |

Categories

Imagga

| paintings art | 100% | |

Captions

Microsoft

created by unknown on 2019-08-10

| a vintage photo of a person | 78.6% | |

| a vintage photo of a person holding a book | 51.3% | |

| a painting of a person | 51.2% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-26

| a photograph of a drawing of a man laying on a bed | -100% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2025-02-07

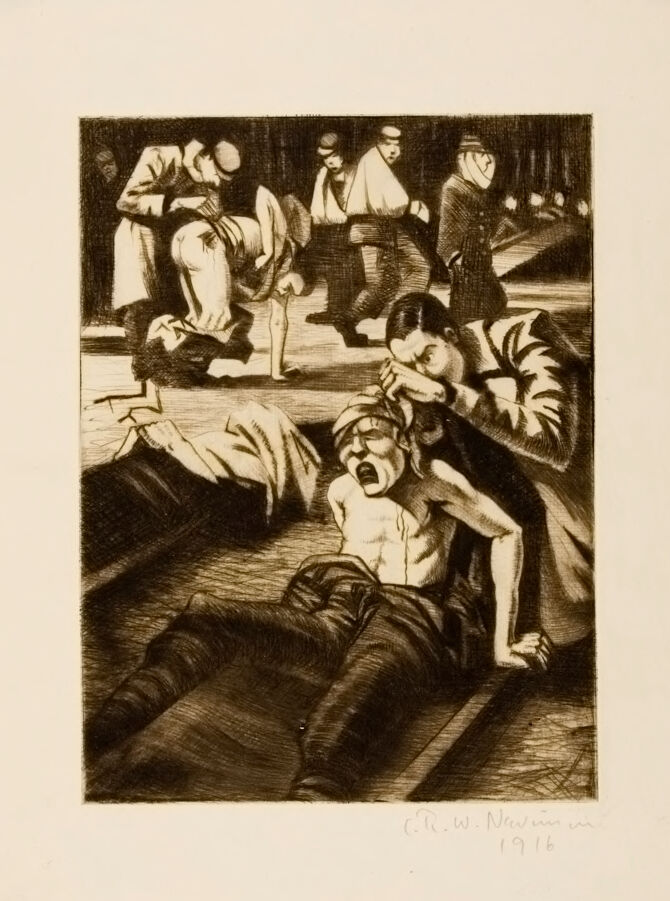

This image appears to be an art piece showing a dramatic scene involving several men in what looks like a nighttime setting. One man is reclining on the ground, shirt open, possibly tended to by another figure. The other men in the background are engaged in various activities, contributing to the tense and active atmosphere of the scene. The artwork is signed by the artist in the bottom-right corner, dated 1916. The style is reminiscent of early 20th-century illustrations, characterized by strong contrasts and a sense of urgency.

Created by gpt-4 on 2025-02-07

This is a print of a black and white etching. The image depicts a scene of several individuals in an urban environment, possibly workers as indicated by their attire and physical postures, which suggest manual labor. The clothing style suggests an earlier era, perhaps the early 20th century, given the hats and coats worn by the figures. One figure in the foreground is in a bending position, engaging with the pavement or street, suggesting some activity like working or picking something up. Other figures appear to be walking past or engaged in separate tasks. The overall atmosphere conveyed by the etching is one of everyday work life in a city setting. There is a strong contrast between light and dark areas, contributing to the depth and texture of the image. The etching is signed and dated, indicating the artist's involvement and the period when it was created. The paper on which the etching is printed has generous borders, commonly seen in print art for framing and presentation purposes.

Please note that the description is based on visible elements of the etching and does not cover obscured details.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-02-07

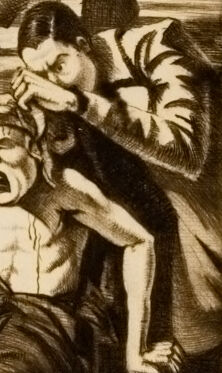

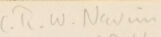

This appears to be a dark and dramatic artwork from 1916, likely a lithograph or etching, depicting what seems to be a medical or emergency scene. The composition shows several figures attending to someone who is lying on the ground, appearing to be in distress or pain. The central figure is shirtless and seems to be crying out, while someone leans over them, possibly providing medical attention. There are additional figures in the background observing the scene. The artistic style uses strong contrasts between light and dark, creating a sense of tension and urgency. The work has an emotionally charged atmosphere that captures a moment of human suffering or crisis. The signature indicates it was created by Ch. W. Newman in 1916.

Created by claude-3-opus-20240229 on 2025-02-07

The black and white sketch depicts a dramatic scene with several figures engaged in what appears to be a violent confrontation or struggle. In the foreground, a man lies on the ground with his mouth open as if crying out. Another figure stands over him, reaching down towards the fallen man's face. In the background, additional figures stand around observing the action, some with arms raised. The sketchy, gestural linework and strong tonal contrasts create an intense, emotionally charged atmosphere in this unsettling scene.

Created by claude-3-haiku-20240307 on 2025-02-07

The image appears to be a black and white etching or engraving depicting a scene of people in distress or suffering. In the foreground, there are several figures lying on the ground or being attended to, seemingly in a state of pain or anguish. In the background, there are other figures observing or interacting with the distressed individuals. The overall atmosphere of the image is one of turmoil and hardship.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-12

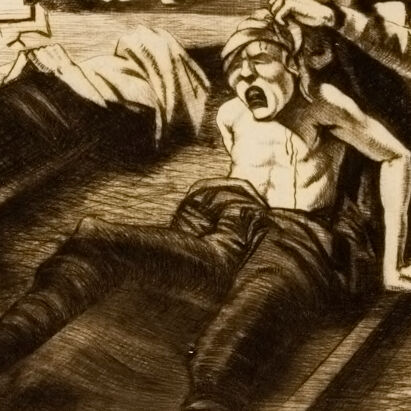

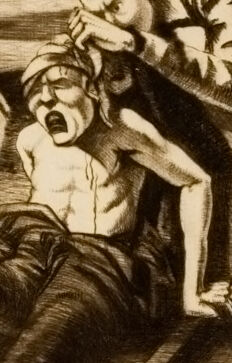

The image depicts a black and white drawing of a man lying on the ground, surrounded by several people. The man is in the foreground, wearing dark pants and a light-colored shirt, with his head tilted back and his mouth open in a scream. He appears to be injured or in distress.

In the background, there are several people standing around him, some of whom are also wearing dark clothing. One person is kneeling beside the man, while another is standing behind him, looking down at him with concern. There are also several other figures in the background, some of whom are partially obscured by the man's body.

The overall atmosphere of the image is one of chaos and confusion, with the man's scream and the surrounding figures creating a sense of urgency and alarm. The use of dark colors and dramatic shading adds to the sense of tension and drama in the scene.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-12

The image depicts a black-and-white etching of a scene from World War I, titled "The Wounded" by Christopher Richard Wynne Nevinson. The etching features a group of soldiers in various states of distress, with some lying on the ground and others standing or kneeling nearby. The soldiers are dressed in military uniforms, and some have bandages or other signs of injury. In the background, there are trees and other foliage, suggesting that the scene is set in a forest or wooded area.

The overall mood of the etching is one of sadness and despair, as the soldiers appear to be struggling with the physical and emotional toll of war. The use of dark shadows and muted colors adds to the somber atmosphere, creating a sense of gravity and seriousness. The etching is a powerful commentary on the human cost of war and the impact it has on those who fight it.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-02-07

The black-and-white illustration depicts a scene from a hospital. A man is lying on the floor, and a person is standing beside him, holding his head and looking at him. The man appears to be in pain. Another person is standing beside him, and a few people are standing behind him. A person is standing in front of the man, and a few clothes are placed on the floor.

Created by amazon.nova-lite-v1:0 on 2025-02-07

The image is a black-and-white drawing depicting a scene from a hospital or medical setting during World War I. The drawing features a man lying on a stretcher, appearing to be in pain or distress, with his mouth open and his eyes closed. Another man, dressed in a long coat and hat, is standing beside him, possibly a doctor or medical professional, and is holding the patient's head with his left hand while placing his right hand on the patient's forehead. In the background, there are several other individuals, including a man in a white coat, who may be medical staff or patients. The image conveys a sense of urgency and medical care during the war, with the artist capturing the emotional and physical toll of the conflict on both soldiers and medical personnel.

Google Gemini

Created by gemini-2.0-flash on 2025-05-28

The image is a black and white etching depicting a scene of trauma, possibly related to war or injury. In the foreground, a man is sitting with his legs outstretched, his torso bare and bandaged around the head, seemingly in distress with his mouth open in what appears to be a scream or expression of pain. Another figure leans over him, possibly attending to his wounds or offering assistance.

In the background, there are several other figures, some of whom appear to be injured, bandaged, or in various states of being tended to. The overall atmosphere is somber and suggestive of suffering, with the monochromatic palette adding to the gravity of the scene. The style is reminiscent of early 20th-century expressionist art, focusing on emotional impact through exaggerated expressions and dark shading.

Created by gemini-2.0-flash-lite on 2025-05-28

The image is a stark, monochromatic etching depicting a scene of suffering and medical care. The central focus is on a man, shirtless, with a bandaged head, sitting on the ground and screaming in agony. A person in a coat and with dark hair stands over him, seemingly tending to his wounds.

In the background, other individuals are present. Some appear to be wounded or in distress, with bandaged heads or arms. The setting seems to be a makeshift treatment area, possibly a war hospital or a similar emergency situation. The overall tone is one of desolation, pain, and perhaps the chaos of a war scene.