Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

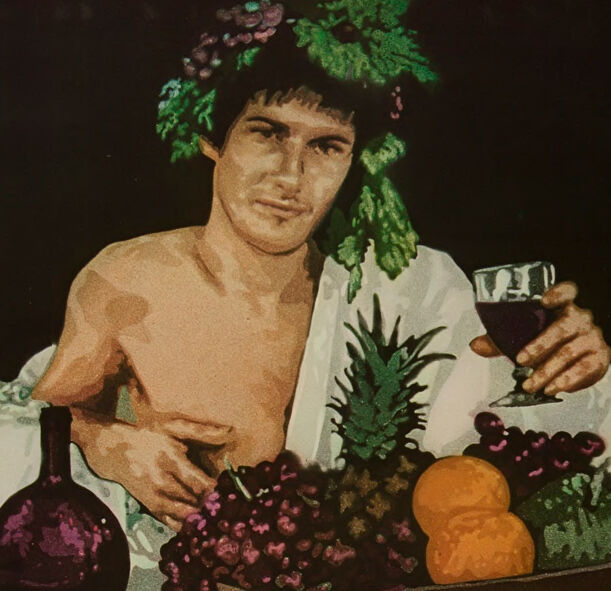

| Age | 22-34 |

| Gender | Male, 99.5% |

| Disgusted | 3.1% |

| Angry | 8.3% |

| Sad | 64.3% |

| Surprised | 0.5% |

| Confused | 2.8% |

| Fear | 1% |

| Happy | 0.6% |

| Calm | 19.2% |

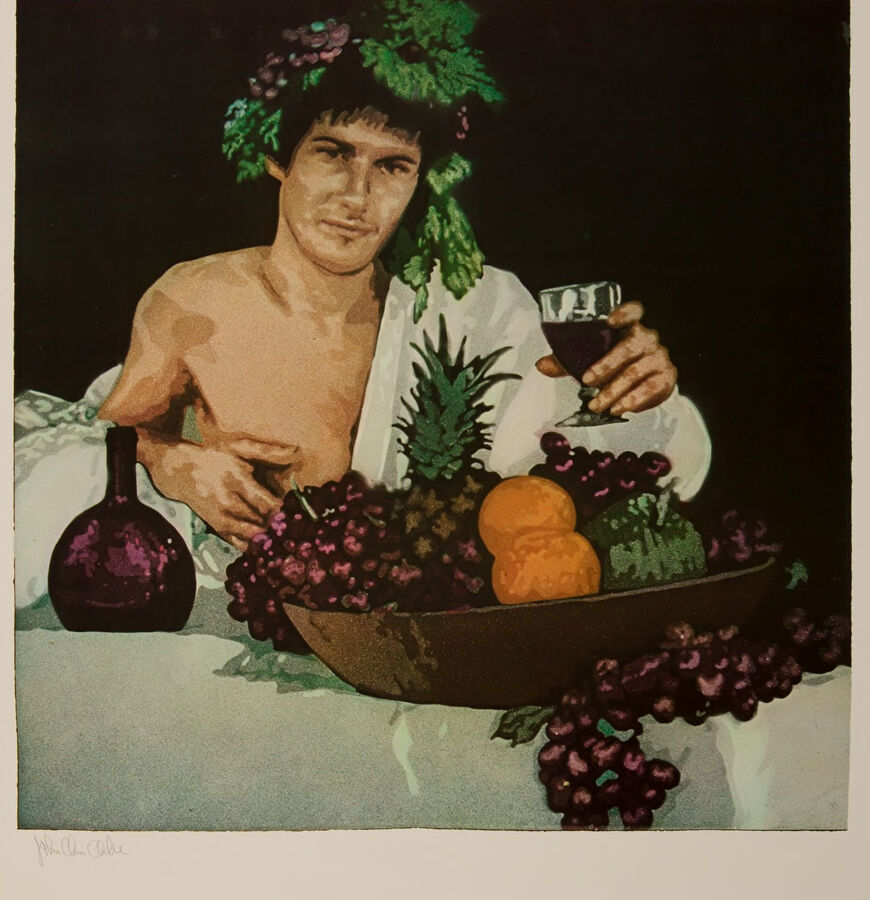

Feature analysis

Amazon

| Person | 98.1% | |

Categories

Imagga

| paintings art | 97.9% | |

Captions

Microsoft

created on 2019-11-01

| a person holding a flower | 28.3% | |

| a person holding a flower | 28.2% | |

| an old photo of a person | 28.1% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2025-01-31

The image shows a classical-style painting depicting a person with a crown of leaves and grapes on their head. The individual is draped in a white cloth and is holding a glass of red wine in one hand. The scene includes a large basket filled with a variety of fruits such as grapes, oranges, and a pineapple. There is also a dark wine bottle placed on the table next to the person. The overall setting is indicative of a traditional Bacchus or Dionysus theme, evoking elements of luxury and celebration associated with the ancient gods of wine and festivity.

Created by gpt-4 on 2025-01-31

The image features a setting that appears to be reminiscent of a classical still life painting. It shows an array of fruits, prominently grapes, with possibly a pineapple and other fruits presented in a bowl, creating an atmosphere of abundance. To the left of the composition, there's a dark-colored bottle that could be indicative of wine. The person present is raising a glass, suggesting a toast or a gesture of hospitality or celebration. The overall tone of the image is warm and rich, possibly evoking the senses with the visual representation of taste and the idea of a feast or gathering. The style suggests a combination of realism with a slightly stylized color palette, achieving a timeless quality in its depiction.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-01-31

This is a stylized photograph or artistic print showing a Bacchus-like scene. The subject is draped in white fabric and wearing a wreath of green leaves, holding a glass of what appears to be red wine. In front of them is a large bowl filled with fruit including grapes, oranges, and a pineapple. The composition is reminiscent of classical still life paintings and Roman feast imagery. There's a dark wine bottle visible on the table as well. The image has a theatrical, staged quality with dramatic lighting against a dark background. The overall mood is one of abundance and revelry, drawing on classical mythological themes.

Created by claude-3-opus-20240229 on 2025-01-31

The image depicts a shirtless man with short dark hair sitting at a table with an assortment of fruit, including grapes, a pineapple, oranges, and a small jug or glass. The man has a serious expression and is holding a glass in one hand. The background is dark, contrasting with the light tones of the table cloth and fruit. The image has an artistic, painted quality to it, likely created using watercolors or a similar medium. The overall composition centers the male figure amidst the vibrant still life arrangement.

Created by claude-3-haiku-20240307 on 2025-01-31

The image depicts a shirtless male figure posing with a large bowl of assorted fruits, including grapes, oranges, and pineapple. The figure has a serious expression and is adorned with a wreath of greenery on his head. Behind the figure, there is a bottle of wine or liquor. The overall composition and style suggest this is an artistic or promotional image, possibly for food, wine, or some other product.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-11

The image depicts a man sitting at a table, surrounded by a bowl of fruit and a bottle of wine. He is shirtless, with a white cloth draped over his shoulders, and has a wreath of leaves and grapes on his head. In his right hand, he holds a glass of red wine, while his left hand rests on his stomach. The table is covered with a white cloth, and a bowl filled with various fruits, including grapes, oranges, and a pineapple, sits in front of him. A bottle of wine with a purple label is placed to his left.

The background of the image is black, which creates a dramatic contrast with the vibrant colors of the fruits and the man's attire. The overall atmosphere of the image suggests a sense of celebration or festivity, possibly a toast to good health or prosperity. The use of grapes and wine as symbols of abundance and joy adds to the festive tone of the image.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-11

This image is a painting of a man sitting at a table with a bowl of fruit and a bottle of wine. The man is shirtless, wearing a white robe draped over his left shoulder, and has a wreath of grapes on his head. He holds a glass of wine in his right hand and rests his left hand on the table.

The table is covered with a white cloth, and the bowl of fruit sits in front of the man. The bowl contains various fruits, including grapes, oranges, and a pineapple. A bottle of wine sits to the left of the bowl, and some grapes are scattered on the table.

The background of the painting is dark, which helps to focus attention on the man and the fruit. The overall atmosphere of the painting is one of luxury and abundance, as the man enjoys the fruits of his labor. The use of rich colors and textures adds depth and warmth to the painting, making it feel inviting and engaging.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-31

The image is a painting of a man holding a wine glass, with a bowl of fruits and a bottle on a table. The man is wearing a wreath of leaves on his head. He is holding a wine glass with his right hand, and his left hand is on his hip. The bowl of fruits contains grapes, oranges, and a pineapple. The bottle is placed on the left side of the bowl.

Created by amazon.nova-lite-v1:0 on 2025-01-31

The image features a man who is sitting on a table with a white cloth. He is wearing a white toga and a wreath of green leaves on his head. He is holding a glass of wine in his right hand. In front of him is a bowl filled with various fruits, including grapes, pineapple, and oranges. There is also a purple bottle on the left side of the table. The image has a black border around it.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-04-28

Here is a description of the image:

The image is a colorful print featuring a man in a classical, possibly mythological, setting. He is the focal point, positioned in the center with the background entirely dark.

- The Man: He is fair-skinned, and has dark hair with a wreath of green leaves and purple grapes adorning it, giving him a Dionysian or Roman god appearance. He is wearing a white cloth draped over his shoulders, leaving one shoulder bare. He holds a glass of red wine in his right hand, offering it, and gazes toward the viewer.

- The Still Life: In front of him is a table covered in a tablecloth, on which sits a cornucopia of fruit. This includes a pineapple, grapes, and two oranges. Beside the fruit, there is a purple bottle.

- Style and Color: The print has a distinct, artistic feel with bold colors. The use of colors, with the purple, greens, and the man's skin tones contrasting against the dark background, creates a vibrant effect.

The overall composition suggests a scene of celebration or abundance, possibly with references to classical antiquity, wine culture, or hedonism.

Created by gemini-2.0-flash on 2025-04-28

The image is a painting or print depicting a man in a classical or Bacchic style. The man is fair-skinned, with dark hair and is adorned with a wreath of grapes and leaves. He's wearing a white garment that drapes over his shoulder, partially revealing his chest.

In his right hand, he holds a glass of red wine, and his left hand rests on his stomach. In front of him is a wooden bowl filled with various fruits including grapes, oranges, and a pineapple. A dark purple bottle is to his left, and a bunch of grapes spills out of the bowl onto the white cloth beneath. The background is dark, which makes the man and the objects in the foreground stand out.

The overall composition evokes a sense of classical indulgence and relaxation, reminiscent of scenes from ancient mythology or art.