Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 18-24 |

| Gender | Female, 96.5% |

| Calm | 23.9% |

| Sad | 20.1% |

| Angry | 18.2% |

| Surprised | 17.9% |

| Disgusted | 14.7% |

| Fear | 9.7% |

| Confused | 3.2% |

| Happy | 1.1% |

Feature analysis

Amazon

| Adult | 98.5% | |

Categories

Imagga

| paintings art | 99.7% | |

Captions

Microsoft

created on 2018-12-20

| a person and a book | 49.4% | |

| a person looking at a book | 49.3% | |

| a person holding a book | 49.2% | |

OpenAI GPT

Created by gpt-4 on 2025-02-20

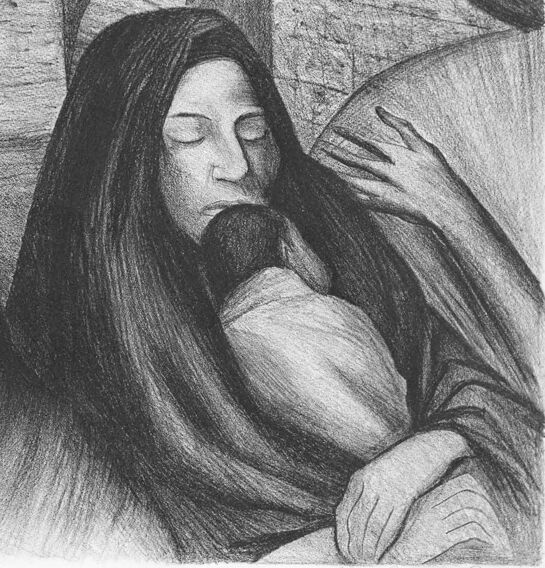

The image depicts a black and white pencil drawing of two individuals, likely female, situated close to each other. They appear to be seated, based on the presence of what looks like an armrest or a portion of the furniture visible in the drawing. The subjects have long hair resting over their shoulders. The person on the left seems to be slightly leaning towards the right. The one on the right is resting their chin on their hand, suggesting a contemplative or relaxed posture. The artist has signed the artwork in the lower right corner. The background is lightly sketched, offering minimal context, which keeps the focus on the subjects themselves.

Created by gpt-4o-2024-05-13 on 2025-02-20

The image is a monochromatic drawing, likely done in pencil or charcoal, that features two figures draped in heavy, textured fabrics. One of the figures has long, flowing hair partially covered by the fabric, while the other appears to be wearing a hood or cloak. The background has an abstract, shadowy quality, with rough, angled lines suggesting architectural elements or a textured wall. In the bottom right corner of the image, there is a signature which reads "First Annual Drawing." The overall mood of the image is somber and introspective.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2025-02-20

The image appears to be a pencil drawing depicting two women in a pensive, somber mood. The figures have long, dark hair and appear to be embracing or comforting each other, with one woman holding the other. The background is abstract and textured, with a sense of depth and movement. The overall tone of the image is melancholic and introspective, conveying a sense of emotional connection and shared experience between the two individuals.

Created by claude-3-opus-20240229 on 2025-02-20

The image depicts two women sitting or lying down, drawn in a sketchy, expressive style using pencil or charcoal. The shading is rough and textured, giving the figures a sculptural, three-dimensional quality. The women have long dark hair and appear to be resting or sleeping, with their eyes closed. The composition has an intimate, peaceful feeling, capturing a quiet moment between the two figures. The drawing style has an Impressionistic or Modernist sensibility in its loose, gestural handling of the forms and shading.

Created by claude-3-5-sonnet-20241022 on 2025-02-20

This appears to be a black and white pencil drawing depicting two figures with long dark hair. The artwork has a somber, contemplative quality, with both figures shown with closed eyes in what seems to be a moment of rest or reflection. The drawing technique employs strong shading and contrast, creating depth and texture, particularly in the rendering of the hair and clothing. The composition is intimate and the style appears to be influenced by social realism or similar artistic movements that emphasize human subjects in an emotionally resonant way.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-13

The image is a black and white sketch of a woman holding a baby, with another woman sitting next to them. The woman on the right is holding a baby in her arms, and the woman on the left is sitting next to her. Both women are wearing long robes and have their heads covered. The background of the image is a stone wall with arches. The overall atmosphere of the image is one of serenity and tranquility, with the woman and baby appearing to be in a peaceful state.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-13

The image is a black and white pencil drawing of two women, one holding a baby. The woman on the left has her eyes closed and is wearing a headscarf. The woman on the right is also wearing a headscarf and has her eyes closed as she holds the baby to her chest. The background of the image appears to be a wall or other structure, but it is not clearly defined. The overall atmosphere of the image is one of serenity and intimacy, with the two women and the baby forming a close and peaceful group.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-20

The image is a black-and-white drawing of two women sitting close to each other. The woman on the right is holding a baby and is looking down with her eyes closed. The woman on the left is looking down with her eyes closed as well. The drawing has a white border and is in a monochromatic color scheme. The image has a vintage or antique look, possibly from the early 20th century.

Created by amazon.nova-pro-v1:0 on 2025-02-20

In a black-and-white drawing, two women are sitting on a couch. One of them is holding a baby. The woman on the left is looking down, while the woman on the right is looking at the baby with her eyes closed. She is holding the baby with her right hand. The woman on the left is wearing a scarf around her neck. Behind them, there is a blurry background.