Machine Generated Data

Tags

Color Analysis

Face analysis

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Possible |

| Headwear | Very likely |

| Blurred | Very unlikely |

Feature analysis

Amazon

| Person | 72.2% | |

Categories

Imagga

Captions

Microsoft

created on 2018-04-19

| a close up of a book | 48.1% | |

| close up of a book | 41.5% | |

| a close up of a book cover | 41.4% | |

OpenAI GPT

Created by gpt-4 on 2024-12-10

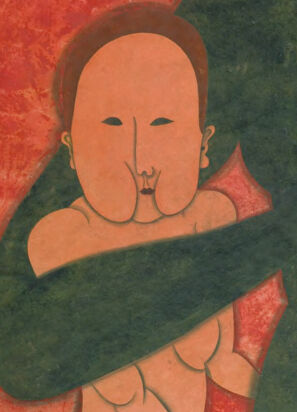

This image depicts a painting of three figures with a vivid red background. The central figure appears to be an adult wearing a dark green garment. To the left and right of the central figure are two smaller figures, resembling children, also wearing garments of a similar dark green shade. One of these figures is using their hands in a manner that seems like playful interaction or a form of childish mimicry. The adult figure's right hand is placed on the shoulder of one of the smaller figures, indicating a possible connection or relationship between them, such as a family unit. The outlines and details of the painting are done with a simplified, stylized approach, not focusing on realistic portrayal but rather on the form and potential emotional connection between the figures. The work has a sense of depth achieved through the use of shading and highlights. The style of the painting suggests it may be influenced by primitivism or modernist art movements.

Created by gpt-4o-2024-05-13 on 2024-12-10

This image appears to be a stylized painting depicting a person holding two children. The person is wearing a dark-colored garment with long sleeves that extend to hold the children. One child, positioned on the left, is clinging to the person's arm, while the other child, on the right, is being held up, with one of their hands reaching towards the person. The background is composed of a rich, textured red hue, creating a striking contrast with the dark clothing of the central figure and the lighter tones of the children’s skin.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-10

The image appears to be a painting depicting a central figure with a somber expression, surrounded by two smaller figures. The central figure is shown in a green dress, with their hands raised in a gesture that suggests contemplation or prayer. The two smaller figures have a similar stylized facial features and expressions. The overall color palette is dominated by shades of red, orange, and green, creating a vivid and emotive atmosphere.

Created by claude-3-5-sonnet-20241022 on 2024-12-10

This is a modernist or expressionist style painting depicting what appears to be a mother with two children. The artwork uses a bold color palette dominated by a deep red background and a dark green dress on the central figure. The figures are rendered in a simplified, stylized manner with elongated faces and minimal details. The painting has a flat, two-dimensional quality typical of modernist art. The central figure's hands are raised in a gesture that could suggest prayer or protection. The composition creates a sense of intimacy between the three figures, while the stark color contrasts and simplified forms give the work a powerful emotional quality.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-10

The image depicts a painting of a woman holding two babies. The woman has dark hair and is wearing a dark green dress. She is holding a baby on her left side, who is also wearing a dark green dress, and another baby on her right side, who is not wearing any clothes. The background of the painting is red. The painting is in the style of Pablo Picasso, with bold lines and simple shapes. The overall effect is one of simplicity and elegance, with the bold colors and clean lines creating a sense of clarity and focus. The use of simple shapes and bold lines gives the painting a sense of modernity and freshness, while the use of red as the background color adds a sense of warmth and energy to the piece.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-10

The image is a painting of a woman holding two babies. The woman has dark hair and is wearing a dark green dress. She is holding one baby in each arm, with her hands placed on their chests. The babies are both naked and have their eyes closed. The background of the painting is a muted red color. The painting appears to be in the style of Pablo Picasso, with bold lines and simple shapes used to create the figures. The overall effect is one of simplicity and elegance, with a focus on the relationships between the three figures.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-24

The image features a painting of a woman with two children. The woman has a serious expression and is holding both children with her arms. The children are positioned on either side of her, and they are both looking up at her. The woman's hair is tied back in a bun, and she is wearing a green dress. The background of the painting is a deep red color, and there is a watermark in the bottom right corner.

Created by amazon.nova-pro-v1:0 on 2025-02-24

The painting depicts a woman holding two babies in her arms. The woman is wearing a green dress and has black hair. She is looking at something in front of her. The babies are wearing earrings. The baby on the left is looking at something, and the baby on the right is raising its hands. The background is red. The painting is framed in a white border.