Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

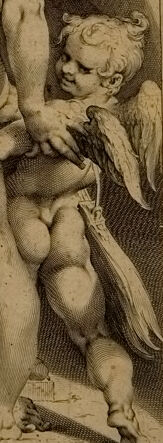

| Age | 10-20 |

| Gender | Male, 94.2% |

| Surprised | 0.1% |

| Disgusted | 0% |

| Angry | 4.2% |

| Fear | 0.2% |

| Calm | 94.5% |

| Happy | 0.3% |

| Sad | 0.6% |

| Confused | 0.1% |

Feature analysis

Amazon

| Painting | 99.5% | |

Categories

Imagga

| paintings art | 72.1% | |

| streetview architecture | 26.6% | |

Captions

Microsoft

created on 2019-10-30

| a vintage photo of a book | 57.2% | |

| a vintage photo of a person holding a book | 46.4% | |

| a vintage photo of a person | 46.3% | |

OpenAI GPT

Created by gpt-4 on 2025-01-30

This image appears to be an engraving or etching of classical or mythological inspiration. It has dramatic composition with dynamic figures arranged diagonally in action poses. The central figure is a muscular male with curly hair, conveying a sense of movement and intensity, his body twisting as he reaches upward. There are also several other figures which are part of the scene; they are also depicted in dynamic postures and appear to be interacting with the central figure and with one another. The figures are rendered with attention to detail, highlighting musculature and facial expressions, suggestive of a scene of struggle or combat. In the background, two winged creatures are depicted; one appears to be a cherub or putto, and the other looks like a bird, perhaps a swan, descending towards the figures below it. Above the heads of the figures, a swath of drapery adds to the scene's dramatic tension. The bottom of the image contains two lines of Latin text, indicating that the image likely has a significant narrative or allegorical meaning, which was common in classical and renaissance-inspired art pieces. The style of the engraving, the use of shading and cross-hatching to create depth, and the handling of anatomical detail, indicate that this is a work from an era when classical revivals were popular, potentially around the Renaissance or a neo-classical period thereafter.

Created by gpt-4o-2024-05-13 on 2025-01-30

The image is an intricate and expressive depiction in a classic, detailed style. It shows a scene with dynamic, intertwined human figures that exhibit a high level of muscularity and movement. Several of the figures are in dramatic poses, some seemingly in flight or suspended in the air. The scene has a classical, mythological feel, with elements such as flowing drapery and a sense of motion throughout. Additionally, there are birds at the bottom of the image, adding to the narrative or symbolic content. The artwork is monochromatic with finely executed shading and textures. There are also inscriptions in Latin at the bottom of the image, suggesting a connection to classical literature or mythology.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-01-30

This appears to be a classical or baroque-era engraving depicting a mythological scene. The composition shows several nude figures in dynamic poses, including winged cherubs or putti. The artwork includes dramatic drapery and flowing fabric elements. At the bottom of the image are fallen or flying birds, and there's Latin text inscribed beneath the scene. The engraving technique uses cross-hatching and fine lines to create depth and shadow, typical of the period's artistic style. The overall scene appears to be depicting some form of allegorical or mythological narrative, possibly related to Bacchus or Venus, as suggested by the Latin inscription. The image has a sepia or brownish tone, characteristic of historic prints and engravings.

Created by claude-3-opus-20240229 on 2025-01-30

The image depicts a detailed engraving or etching showing a dramatic mythological scene. Multiple nude or partially nude figures are intertwined in a chaotic, dynamic composition. Winged figures, likely representing cupids or putti, fly above the tangled bodies below. The figures seem to be wrestling or battling, with their limbs entangled. Some appear to be wielding weapons like bows and arrows. The poses convey a sense of violent struggle and agitation. Below the main visual, there is text in Latin that likely provides context for the mythological subject matter being portrayed, though I cannot translate it directly. The fine linework and shading give the print an impressive level of detail and volume. Overall, it is an elaborate, energetic Baroque composition depicting a tumultuous scene from Classical mythology or literature, skillfully rendered through printmaking techniques. The artist demonstrates great technical ability in portraying the human figures and their dynamic interactions.

Created by claude-3-haiku-20240307 on 2025-01-30

The image appears to be a complex, intricate engraving depicting a mythological or allegorical scene. It features numerous nude figures, both male and female, in dynamic poses. The central figure seems to be a male figure, surrounded by other nude figures and various decorative elements like drapery and cherub-like figures. The overall composition is richly detailed and evocative of Baroque or Rococo artistic styles. Without identifying any individuals, the image presents a visually striking and imaginative depiction of a mythological or symbolic subject.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-13

The image is a sepia-toned engraving of a scene depicting a man being attacked by two cherubs, with a third cherub flying above him. The man is nude and appears to be struggling against the cherubs, who are holding him down and attempting to restrain him. One cherub is holding a knife to the man's throat, while the other is grasping his arm. The background of the image is dark and shadowy, with some indistinct figures visible in the distance. The overall atmosphere of the image is one of chaos and turmoil, with the man's struggles against the cherubs creating a sense of tension and drama. The use of sepia tones adds a sense of age and weariness to the image, suggesting that it may be an older work or a reproduction of an earlier piece. The image appears to be a representation of a mythological or allegorical scene, possibly depicting the struggle between good and evil or the power of desire and passion. The cherubs, often associated with divine or supernatural forces, are depicted as being in control of the situation, while the man is shown as being helpless against their attacks. Overall, the image is a powerful and evocative representation of a dramatic scene, with the use of sepia tones and the depiction of the cherubs adding to its sense of age and mystique.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-13

The image is a sepia-toned illustration of a mythological scene, featuring a central figure being attacked by various creatures. The central figure appears to be a man with wings, possibly an angel or a demon, who is being assaulted by a group of smaller winged creatures. These creatures are depicted as having human-like bodies with wings and tails, and they seem to be attacking the central figure with weapons such as swords and spears. In the background, there are several other figures that appear to be watching the scene unfold. These figures are not clearly defined, but they seem to be standing on a rocky outcropping or a cliff, looking down at the central figure and the attacking creatures. At the bottom of the image, there is a line of text in an old-fashioned font. This text appears to be in Latin, and it may provide some context or explanation for the scene depicted in the image. However, without further information or translation, it is difficult to determine the exact meaning or significance of the text. Overall, the image appears to be a depiction of a mythological or allegorical scene, possibly representing a struggle between good and evil or a battle between different forces or entities. The use of sepia tones and the old-fashioned font suggest that the image may be a reproduction or adaptation of an older work of art, possibly from the Renaissance or Baroque period.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-30

The image is a black-and-white engraving that depicts a mythological scene with multiple figures and elements. At the center, there is a large, muscular figure with a human upper body and a lower body that appears to be a mix of human and animal, possibly a satyr or a faun. This central figure is being embraced by a winged female figure, possibly a nymph or a muse, who is holding a lyre. Surrounding this central pair are several other figures, including winged infants or putti, who are engaged in various activities such as playing instruments, holding drapery, or interacting with the central figures. The background features flowing drapery and clouds, suggesting a heavenly or ethereal setting. The engraving is detailed, with intricate line work that highlights the musculature and textures of the figures, as well as the decorative elements like the drapery and musical instruments. There are also inscriptions at the bottom of the image, which appear to be in Latin, adding to the classical theme of the artwork.

Created by amazon.nova-lite-v1:0 on 2025-01-30

The image is a monochromatic engraving by Johann Muller, also known as Wolter, depicting a scene with multiple figures. The central figure is a large, muscular man, likely representing Hercules, who is engaged in a struggle with a smaller, winged figure, possibly representing the god Bacchus. The scene is set against a backdrop of drapery and has a dramatic, almost theatrical quality. There are two cherubs, one holding a bow and the other holding a key, and a bird flying above them. The engraving is accompanied by Latin inscriptions, which add to the historical and mythological context of the image.