Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 17-29 |

| Gender | Female, 50.2% |

| Disgusted | 45% |

| Happy | 45% |

| Calm | 45% |

| Fear | 45.2% |

| Surprised | 45% |

| Confused | 45% |

| Angry | 45.1% |

| Sad | 54.6% |

Feature analysis

Amazon

| Person | 92.4% | |

Categories

Imagga

| paintings art | 73.8% | |

| streetview architecture | 25.9% | |

Captions

Microsoft

created on 2019-08-10

| a close up of a book | 49.3% | |

| close up of a book | 43.3% | |

| a close up of a book cover | 43.2% | |

OpenAI GPT

Created by gpt-4 on 2025-02-08

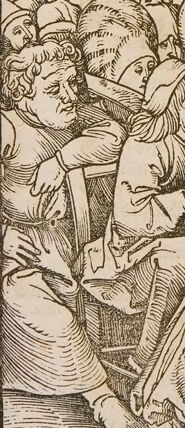

This is an illustration in the style of a woodcut or engraving, characterized by its detailed line work and lack of color. The scene is crowded with figures, all of whom appear to be drawn in period attire that suggests the image may have been produced in the late Medieval or Renaissance era. Various individuals are depicted with halos, suggesting they are saints or figures of religious significance. The composition is dense, with many characters interacting closely with one another. The foreground features a number of individuals engaged in what appears to be a meaningful activity or event, while the background includes architectural elements like archways and structural beams. The intricate linework, the distribution of light and shadow, and the expressive postures of the characters all contribute to the narrative quality of the scene.

Created by gpt-4o-2024-05-13 on 2025-02-08

This is a detailed woodcut illustration featuring a religious scene. The composition includes a group of figures gathered closely together, many of whom have halos, suggesting their holy status. The architecture in the background includes an arched doorway through which a figure can be seen, and the setting appears to be inside a building with a high ceiling and wooden beams. To the right, a person is holding a large book. The intricate designs and careful attention to detail are typical of woodcuts from the medieval or Renaissance period.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-02-08

This appears to be a medieval or Renaissance-era woodcut print depicting a religious scene. The artwork shows a crowded gathering indoors, with many figures wearing robes and some with halos indicating their holy status. In the background, there's an arched window or doorway with a smaller scene visible within it. The main composition shows a large group of people gathered around a central figure who appears to be teaching or preaching. The figures are rendered in the characteristic linear style of woodcut prints, with parallel lines creating shading and texture. The clothing and architectural details suggest this is likely a biblical or religious historical scene. It's executed in a single color (sepia or brown tone) as was typical for woodcut prints of this period.

Created by claude-3-opus-20240229 on 2025-02-08

This woodcut image depicts a crowded interior scene, likely a church or cathedral, filled with many figures. In the background, there are large arched windows or openings. In the foreground, a group of people, including what appear to be religious figures or clergy members in robes, are gathered together. Some figures have distinct facial features and expressions. The composition has a sense of depth with the receding arches and the crowd extending back into the space. The linework is detailed and textural, giving the scene an energetic, bustling feel. At the top, there is an inset illustration showing a different scene with figures interacting.

Created by claude-3-haiku-20240307 on 2025-02-08

The image appears to depict a religious or biblical scene, showing a large crowd gathered around a figure who appears to be Jesus Christ or another religious leader. The figure is surrounded by people, some of whom seem to be reaching out to him or seeking his attention. The image has a stylized, woodcut-like illustration style typical of religious art from earlier historical periods. While there are many people shown, the image does not identify any specific individuals.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-16

The image is a woodcut print of a scene from the Bible, specifically depicting the moment when Jesus heals a man with a withered hand. The scene is set in a crowded room, with Jesus standing at the center, wearing a long robe and a halo. He has long hair and a beard, and his right hand is raised as if he is about to touch the man's hand.

To the left of Jesus, there is a group of people gathered around the man with the withered hand. They are all looking at Jesus with a mixture of hope and fear in their eyes. The man himself is kneeling on the ground, his right hand outstretched towards Jesus.

In the background, there is a large stone wall with an arched doorway. Through the doorway, you can see a group of people standing outside, watching the scene unfold. The overall atmosphere of the image is one of drama and tension, as Jesus prepares to perform a miracle that will change the lives of those present.

The style of the image is reminiscent of 16th-century woodcuts, with intricate details and textures that give it a sense of depth and dimensionality. The use of shading and perspective creates a sense of volume and space, drawing the viewer's eye into the scene.

Overall, the image is a powerful representation of the story of Jesus healing the man with the withered hand, and it serves as a reminder of the transformative power of faith and compassion.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-16

The image depicts a scene from the Bible, specifically Jesus Christ preaching to a crowd of people. The scene is set in a church or temple, with Jesus standing at the front and addressing the crowd.

Key Elements:

- Jesus Christ: Jesus is depicted as a central figure, standing at the front of the crowd and addressing them. He is shown wearing a long robe and has a halo around his head, symbolizing his divinity.

- Crowd: The crowd is composed of various individuals, including men, women, and children. They are all facing Jesus and appear to be listening intently to his words.

- Church or Temple: The background of the image shows a church or temple, with arches and columns visible. The architecture suggests a sense of grandeur and reverence.

- Lighting: The lighting in the image is soft and warm, with a golden glow emanating from the top-left corner. This creates a sense of warmth and comfort, emphasizing the spiritual nature of the scene.

Artistic Style:

- Woodcut: The image appears to be a woodcut, a type of printmaking technique that involves carving designs into wood blocks. The lines and textures in the image are characteristic of woodcuts, with bold lines and intricate details.

- Medieval Influence: The image also shows influences from medieval art, with its use of symbolism, archaic clothing, and architectural styles. The overall aesthetic is reminiscent of illuminated manuscripts and other medieval artworks.

Conclusion:

In conclusion, the image depicts a powerful scene from the Bible, with Jesus Christ preaching to a crowd of people in a church or temple. The use of woodcut techniques and medieval influences creates a unique and captivating visual style, emphasizing the spiritual significance of the scene.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-02-08

The image is a monochromatic drawing of a church scene. The scene depicts a group of people gathered in a church. In the center, a man wearing a robe and a halo is standing behind a podium, possibly delivering a sermon. On the left side, there is a crowd of people, some of whom are wearing hats, while others are kneeling. On the right side, there is a man with a beard and a candle on a stand.

Created by amazon.nova-lite-v1:0 on 2025-02-08

The image is a black-and-white engraving that depicts a religious scene. In the foreground, a priest stands at a pulpit, delivering a sermon to a crowd of people. The priest is wearing a long robe and a halo, symbolizing his holiness. The crowd is seated on the floor, with some individuals standing and listening intently. One person in the crowd is depicted kneeling and looking up at the priest, possibly in a state of prayer or deep contemplation. The scene is set in a church, with a candle on a stand in the background, adding to the religious atmosphere. The engraving captures a moment of spiritual significance, with the priest imparting knowledge and guidance to the faithful.