Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 22-34 |

| Gender | Male, 80.9% |

| Angry | 0.7% |

| Disgusted | 0.5% |

| Sad | 30% |

| Surprised | 1% |

| Fear | 2% |

| Calm | 3.3% |

| Happy | 0.1% |

| Confused | 62.5% |

Feature analysis

Amazon

| Person | 98.5% | |

Categories

Imagga

| events parties | 77.9% | |

| people portraits | 13.1% | |

| paintings art | 7.7% | |

Captions

Microsoft

created on 2019-08-10

| a group of people posing for a photo | 82.3% | |

| a group of people posing for the camera | 82.2% | |

| a group of people posing for a picture | 82.1% | |

OpenAI GPT

Created by gpt-4 on 2025-02-12

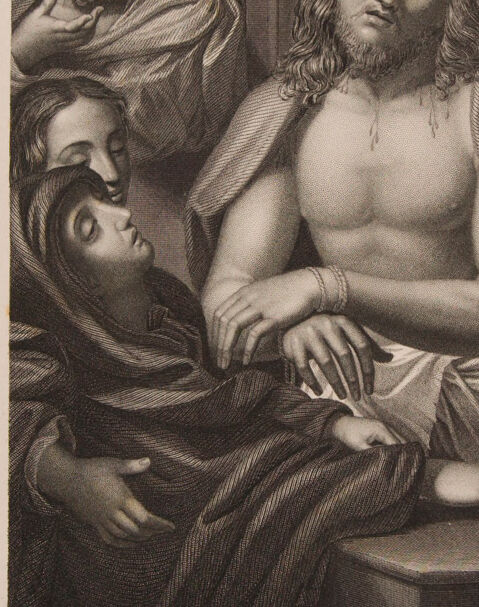

This is a black and white print depicting three figures that appear to be in classical or religious garb. The central figure is partially draped in a cloth, revealing a bare upper body, and is seated with hands resting gently on an object in front. The two other figures are draped more fully in cloth and are turned towards the central figure, suggesting a scene of interaction or conversation. The print has a formal composition with a balance of light and dark areas, and the folds of the cloth on each figure are rendered in intricate detail to suggest volume and texture. There is text at the bottom of the print that indicates it may be from a book or a collection, suggesting it might be tied to a story or historical narrative.

Created by gpt-4o-2024-05-13 on 2025-02-12

The image is a detailed, monochromatic engraving depicting three figures in classical attire. The central figure is shirtless, wearing a crown of thorns, and has their hands bound together by rope. This individual appears to be seated on a bench or low platform. The two surrounding figures are dressed in robes, and one of them is holding the hand of the central figure. The image has a textured and shaded style, creating a realistic and dramatic effect. The engraving's focus on the body language and attire suggests a scene of significance, likely of historical or religious context.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-02-12

This is a historical religious engraving depicting a scene from Christian art, specifically appearing to be a moment from the Passion of Christ. The central figure wears a crown of thorns and is shown bare-chested with a cloth around the waist. The composition includes several other figures in period clothing, including one wearing a turban. The artwork appears to be rendered in a classical style, likely from the Renaissance period or after, and is executed in black and white using engraving techniques. The scene conveys a solemn, dramatic moment with careful attention to shadow, texture, and emotional expression. The technical quality of the engraving shows fine detail and skilled craftsmanship typical of religious art from this era.

Created by claude-3-opus-20240229 on 2025-02-12

The image depicts a religious scene showing Christ being mocked. In the center is a figure representing Jesus Christ, portrayed shirtless with a crown of thorns on his head. He is surrounded by three other men who appear to be ridiculing or tormenting him. The man on the left wears a turban-like headwrap, the one on the right has a beard, and the third man's face is partially obscured as he looks down at Christ. The drawing is done in a detailed, classical style with shading to create depth and volume in the figures and drapery. It conveys a somber and tense atmosphere capturing this moment from the Biblical passion narrative.

Created by claude-3-haiku-20240307 on 2025-02-12

The image depicts a religious scene with several figures. In the center is a depiction of Jesus Christ, shown shirtless and wearing a crown of thorns. Two older bearded figures stand on either side of him, one appearing to gesture towards him. Lying at Christ's feet is a figure, likely representing a religious or symbolic figure. The overall scene conveys a sense of reverence and religious significance.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-15

The image depicts a black and white engraving of a scene from the Bible, specifically the moment when Jesus Christ is being taken down from the cross. The engraving is titled "Jesus Taken Down from the Cross" and is attributed to the artist William Dyce. In the foreground, Jesus is shown with his head bowed and his eyes cast downward, as he is being supported by two men on either side of him. One of the men is holding Jesus' right arm, while the other is supporting his left leg. A woman is also present in the scene, kneeling beside Jesus and holding his right hand. She appears to be mourning or praying. The background of the engraving is dark and shadowy, with a few faint details visible. The overall atmosphere of the image is one of sadness and reverence, capturing the emotional intensity of the moment when Jesus is being taken down from the cross. The engraving is rendered in a detailed and realistic style, with intricate textures and shading that give the image depth and dimension. The use of black and white creates a sense of contrast and drama, emphasizing the emotional impact of the scene. Overall, the image is a powerful and moving depiction of a pivotal moment in Christian history, conveying the sense of loss and sorrow that accompanied Jesus' crucifixion.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-15

The image depicts a poignant scene from the Bible, specifically the moment when Jesus Christ is being prepared for crucifixion. The central figure, Jesus, sits on a stool with his arms resting on his lap, wearing a crown of thorns and a cloth draped over his shoulders. His long hair and beard are visible, and he appears to be looking directly at the viewer. To the left of Jesus, a woman is shown holding his hand, while two men stand behind him. One of these men is touching Jesus' shoulder, conveying a sense of compassion and support. The background of the image features a dark wall with vertical lines, which adds to the somber mood of the scene. The overall atmosphere of the image is one of reverence and contemplation, inviting the viewer to reflect on the significance of this moment in Christian history. The use of muted colors and the focus on the central figures create a sense of intimacy and solemnity, drawing the viewer's attention to the emotional weight of the scene.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-02-12

The image is a black-and-white engraving of a scene from the biblical story of Jesus Christ. It depicts Jesus, crowned with thorns, seated on a stool. His expression is serene and composed, despite the evident pain and suffering he is enduring. His hands are bound with a rope, and he is looking down with a calm and introspective demeanor. Behind him, there are two figures, one on each side, who are observing him. The figure on the left is wearing a turban, while the figure on the right has a more elaborate headdress. In the foreground, there is a woman lying on the ground, her head resting on Jesus' lap. She appears to be in a state of distress or sorrow, with her eyes closed and her body positioned in a way that suggests she is seeking comfort or solace from Jesus. The overall atmosphere of the image is somber and reflective, capturing a moment of profound emotional and spiritual significance.

Created by amazon.nova-lite-v1:0 on 2025-02-12

The image is a black-and-white drawing of a scene depicting Jesus Christ, who is seated and wearing a crown of thorns on his head. He is surrounded by three figures, one of whom is a man wearing a turban and holding a cloth over his face, while the other two are wearing helmets. The drawing is titled "Ecce Homo" and was engraved by W. H. Eggleton. The image is framed in a white border with a black border around it.