Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 20-38 |

| Gender | Female, 91.5% |

| Calm | 7.1% |

| Disgusted | 2.3% |

| Angry | 3.8% |

| Confused | 5.3% |

| Surprised | 2.9% |

| Sad | 40.2% |

| Happy | 38.3% |

Feature analysis

Amazon

| Person | 99.2% | |

Categories

Imagga

| paintings art | 99.7% | |

Captions

Microsoft

created on 2019-08-06

| a group of people standing in front of a book | 63.2% | |

| a group of people looking at a book | 63.1% | |

| a group of people standing next to a book | 63% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2025-02-08

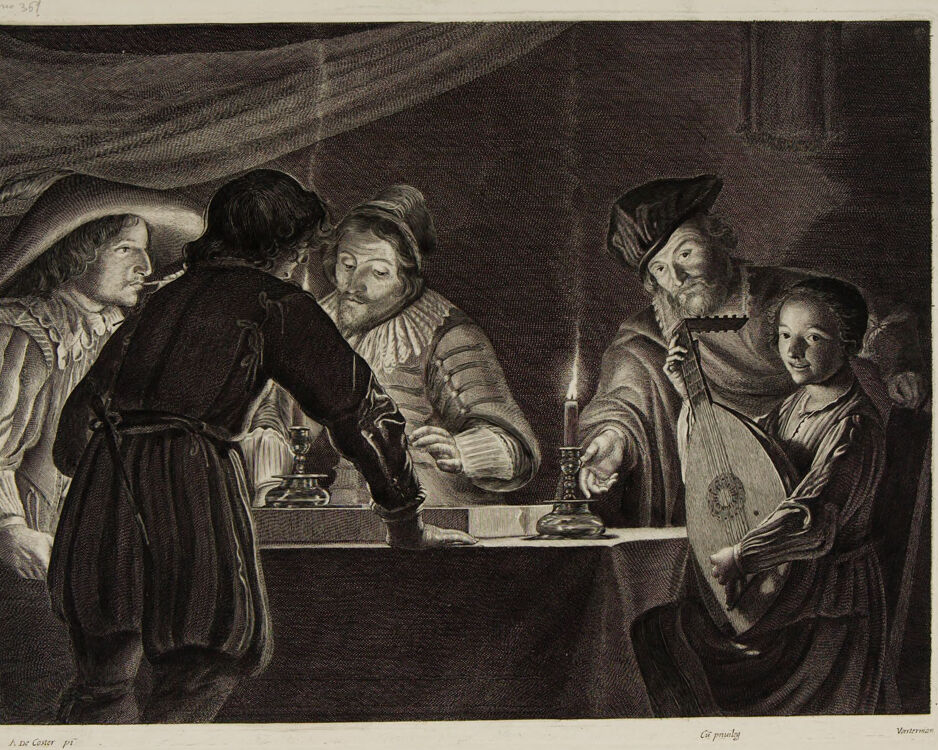

The image depicts an intricate scene with several individuals dressed in elaborate, historical costumes, gathered around a table. The setting appears to be lit by candlelight, adding a dramatic and intimate ambiance. One person is engaged in playing a lute, suggesting a musical or celebratory gathering. The detailed clothing and the presence of props like the candle and the musical instrument point to a scene set in a historical or renaissance period.

Created by gpt-4 on 2025-02-08

This is an image of an etching or engraving depicting what appears to be a scene from the past, as indicated by the period clothing and the use of a candle for illumination. One figure is holding a stringed musical instrument, which resembles a lute, suggesting that music is part of this scene. The etching style provides detailed textures, especially noticeable in the fabrics and the depiction of light and shadow, contributing to the dramatic atmosphere of the scene. There's a sense of intimacy and social interaction suggested by the close gathering around the table. The artwork seems to be from a collection, as indicated by the labeling and numbering in the corners of the paper.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-02-08

This is a historical engraving depicting a candlelit scene with several figures in period clothing from what appears to be the 17th century. The composition shows a group gathered around a table, with dramatic lighting from a single candle creating strong contrasts of light and shadow. The figures are wearing typical Baroque-era attire including ruffs (elaborate collars), wide-brimmed hats, and flowing robes. On the right side, someone is holding what appears to be a lute or similar stringed instrument. The scene has a theatrical quality to it, suggesting it might be depicting a moment from a play or a social gathering of the period. The artwork demonstrates skilled use of chiaroscuro (light and dark contrast) technique common in art of this era.

Created by claude-3-opus-20240229 on 2025-02-08

The image depicts a candlelit scene with four male figures gathered around a table. They appear to be dressed in 17th century Dutch or Flemish clothing, with ruffled collars, doublets, and feathered caps or hats. The men seem engaged in conversation or discussion, with thoughtful or pensive expressions on their faces. The dark, shadowy background created by the chiaroscuro lighting adds a dramatic, contemplative mood to the composition. Based on the clothing and artistic style, this engraving or etching is likely from the 1600s and portrays a gathering of Dutch or Flemish gentlemen of that era.

Created by claude-3-haiku-20240307 on 2025-02-08

The image appears to depict a scene from the past, likely in a dimly lit interior setting. Several individuals in period attire are gathered around a table, including men in formal dress and a young child. One man appears to be reaching towards another, who is seated at the table, possibly engaged in some kind of ceremonial or scholarly activity. The scene has a somber, contemplative atmosphere, conveyed through the use of chiaroscuro lighting and the serious expressions of the figures.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-17

The image is a black-and-white engraving of a scene from the 17th century, possibly depicting a gathering or meeting. The engraving shows a group of people in various poses, with some seated and others standing. The atmosphere appears to be formal, with the individuals dressed in attire typical of the period. In the foreground, a man is prominently featured, wearing a hat and holding a candle. He is positioned in front of a table, where a candlestick and a book are placed. To his left, another man is seated, looking at the first man with interest. Behind them, a woman stands, holding a lute or a similar stringed instrument. The background of the engraving is dark, with some indistinct figures visible in the shadows. The overall mood of the image is one of contemplation and discussion, with the individuals engaged in conversation or perhaps listening to music. The engraving is signed by the artist, "Rembrandt," indicating that it is a work by the famous Dutch painter and etcher Rembrandt van Rijn. The image is likely a reproduction of one of his engravings, which were highly regarded for their detail and emotional depth. Overall, the image presents a captivating glimpse into the social and cultural life of 17th-century Europe, showcasing the artistry and skill of Rembrandt's work.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-17

The image is a black and white drawing of a group of people gathered around a table. The scene appears to be set in the 17th century, based on the clothing and hairstyles of the individuals depicted. In the foreground, there are five people visible. On the left side of the image, a man wearing a wide-brimmed hat and a long coat is standing with his back to the viewer. He is holding a pipe in his mouth and appears to be engaged in conversation with another man seated at the table. The man seated at the table is wearing a ruffled collar and a long-sleeved shirt. He has a beard and is looking at the man standing next to him. To his right, there is an older man with a long white beard, wearing a hat and a cloak. He is holding a lute and appears to be playing it. On the far right side of the image, there is a woman sitting at the table, wearing a dress and a head covering. She is looking at the man playing the lute. The background of the image is dark, suggesting that the scene is taking place indoors, possibly in a tavern or a private residence. The overall atmosphere of the image is one of conviviality and social interaction, with the individuals depicted appearing to be enjoying each other's company. The image is signed by the artist, "Rembrandt," in the bottom-right corner, indicating that it is a work by the Dutch master Rembrandt van Rijn. The style of the drawing is consistent with Rembrandt's known works, which often featured scenes of everyday life and social gatherings.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-02-08

The image is a black-and-white print of a painting by the Dutch Golden Age painter, Gerard van Honthorst. The painting depicts a scene with five individuals, four men and one woman, engaged in a moment of interaction. The scene is set indoors, with a table at the center. The table is adorned with a candle in a stand, casting light on the scene. The man on the left is wearing a hat and is leaning over the table, possibly examining or handling an object. Next to him, another man is seated, wearing a cap and a collared shirt, looking towards the man on the left. The man in the middle is also seated, wearing a hat and a long-sleeved shirt, and he is looking towards the man on the left as well. The man on the far right is standing, holding a musical instrument, possibly a lute, and is also wearing a hat. The woman, seated next to the man on the right, is holding a musical instrument as well, possibly a viol, and is looking towards the man on the left. The image has a signature at the bottom left corner and a label at the bottom right corner.

Created by amazon.nova-lite-v1:0 on 2025-02-08

The image is a black-and-white drawing that depicts a group of people gathered around a table. The drawing appears to be an old print, possibly from the 19th century. The people in the drawing are dressed in period clothing, suggesting that the scene is set in a historical context. The central figure in the drawing is a man wearing a hat and holding a candle, while another man on the right side of the drawing is holding a lute. The man on the left side of the drawing is holding a glass, and there is a table with a candle and a book in the foreground. The drawing has a watermark in the bottom left corner, which reads "A. de Coster pi."