Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 48-66 |

| Gender | Male, 50.5% |

| Sad | 49.5% |

| Surprised | 50.2% |

| Happy | 49.5% |

| Fear | 49.5% |

| Calm | 49.6% |

| Disgusted | 49.5% |

| Angry | 49.6% |

| Confused | 49.5% |

Feature analysis

Amazon

| Painting | 99.5% | |

Categories

Imagga

| paintings art | 92.8% | |

| streetview architecture | 4.2% | |

| nature landscape | 1.5% | |

Captions

Microsoft

created on 2019-11-05

| a group of people in an old photo of a person | 75.7% | |

| a group of people standing in front of a building | 75.6% | |

| an old photo of a group of people in a room | 75.5% | |

OpenAI GPT

Created by gpt-4 on 2025-02-01

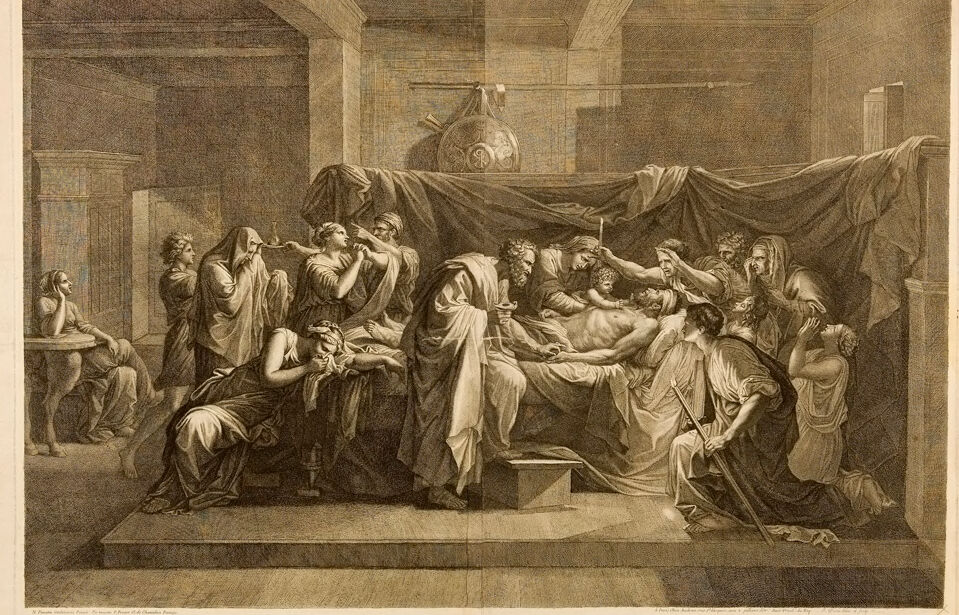

This image is a classical-style engraving depicting a scene with multiple figures gathered around a central reclining character, who is prominently displayed and appears to be in a state of distress or suffering. The attire and environment suggest an ancient or classical setting. The characters are dressed in draped clothing typical of classical antiquity, possibly Roman or Greek.

The scene is framed by architectural elements, including columns and an open structure allowing a view into a darker interior space. There is a sense of drama and attention focused on the central figure by the other characters, who display various expressions and poses indicative of concern, contemplation, or engagement in some ritualistic or significant action.

There are also objects that contribute to the setting's authenticity, such as a shield, a spear, and what seems to be a large pot or vessel on the left. The figures exhibit a range of emotions and are engaged in an event that is clearly of great significance to them. The image conveys a strong narrative and is likely to be an interpretation or representation of a specific historical, mythological, or literary moment.

Created by gpt-4o-2024-05-13 on 2025-02-01

The image depicts an intricately detailed, classical-style scene with numerous figures gathered around a central figure lying on a bed. This central figure lies in a horizontal position, seemingly asleep or deceased, with a shroud partially draped over his body. Surrounding him, other figures are engaged in various acts of mourning, prayer, or anointment. The room in which the scene takes place appears stately, with elegant drapery and pillars, suggesting an important or solemn event. The overall atmosphere is somber and reverent, with each character appearing deeply immersed in the moment. The composition and style suggest it is an artwork reminiscent of historical or religious themes.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-02-01

This appears to be a historical engraving or print depicting what seems to be a religious or ceremonial scene. The image shows multiple figures in classical robes gathered around what appears to be a bed or table with someone lying upon it. The composition is set in an interior space with columns and drapes hanging in the background. The style suggests this is likely from the 18th or 19th century.

The scene has a dramatic quality, with the figures showing emotional expressions and gestures. There's a Latin inscription at the bottom of the print which reads "OBVNT SVPER EVM VNGENTS EVM OLEO IN NOMINE DOMINI," which appears to reference anointing with oil in the name of the Lord.

The artwork uses classical artistic techniques with careful attention to light and shadow, drapery, and architectural details. The figures are arranged in a way that creates a sense of movement and emotional intensity around the central action of the scene.

Created by claude-3-opus-20240229 on 2025-02-01

This image depicts a classical scene with several human figures gathered together. In the center, a figure lies on a bed or platform, appearing to be ill or deceased. Surrounding this central figure are other people in robes and drapery, some reaching out towards the central figure while others gesture or look on mournfully. The setting has classical architecture in the background with columns and arched ceilings. The title indicates this scene represents the "Death of Germanicus", likely referring to the Roman general Germanicus who died in 19 AD. The shading and linework give the drawing a somber, dramatic tone fitting the subject matter of a notable death in ancient Rome.

Created by claude-3-haiku-20240307 on 2025-02-01

The image appears to depict a dramatic and sorrowful scene. It shows a large group of figures gathered around a central figure, likely a deceased individual, who is lying on a bed or platform. The figures surrounding the central figure are expressing grief and mourning, with some appearing to be in distress or anguish. The scene is set in an interior space, with ornate architectural details and furnishings visible in the background. The overall tone of the image is somber and contemplative.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-15

The image depicts a scene from ancient Greece, with a group of people gathered around a bed. The scene is set in a room with stone walls and a high ceiling, and the atmosphere is somber and mournful.

- A group of people are gathered around a bed, where a man lies on his back, covered by a sheet.

- The man is shirtless and has a bandage wrapped around his head.

- He appears to be in pain or distress.

- The people surrounding the bed are dressed in ancient Greek clothing, with togas and robes.

- Some of them are kneeling or sitting beside the bed, while others stand behind it.

- They all seem to be focused on the man on the bed.

- In the background, there is a large stone column and a doorway leading to another room.

- The column is decorated with intricate carvings, and the doorway is framed by a archway.

- The room beyond the doorway is dimly lit, but it appears to be a private chamber or study.

- The overall atmosphere of the image is one of sadness and loss.

- The people gathered around the bed seem to be mourning the man's passing or illness.

- The somber colors and serious expressions of the figures contribute to the sense of gravity and solemnity.

In summary, the image depicts a poignant scene from ancient Greece, where a group of people gather around a bed to mourn the passing or illness of a man. The scene is set in a somber and dignified atmosphere, with the figures dressed in traditional Greek clothing and the background featuring a large stone column and a doorway leading to another room.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-15

The image depicts a sepia-toned illustration of a group of people gathered around a bed, with one person lying on it. The scene appears to be set in an ancient Greek or Roman setting, with the individuals dressed in traditional attire from that era.

Key Elements:

- A group of people are gathered around a bed, with one person lying on it.

- The scene is set in an ancient Greek or Roman setting, with the individuals dressed in traditional attire from that era.

- The atmosphere suggests a sense of mourning or lamentation, as the people surrounding the bed appear to be grieving or paying their respects to the person lying on it.

- The overall mood of the image is somber and reflective, evoking a sense of reverence and respect for the deceased.

Conclusion:

The image presents a poignant and evocative scene, capturing a moment of collective grief and remembrance in an ancient cultural context. The use of sepia tones adds a sense of nostalgia and timelessness to the image, emphasizing the universal human experience of loss and mourning.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-02-01

The image is a black-and-white print of a painting. It depicts a scene of people in a room. The room has a ceiling and walls, and a large cloth is draped over the center of the room. The people are gathered around the cloth, and some are standing, while others are sitting on the floor. Some are holding objects, while others are looking at the cloth. The image has a watermark on the bottom right corner.

Created by amazon.nova-lite-v1:0 on 2025-02-01

The image depicts a scene from a historical or mythological context, possibly from ancient Greece or Rome. The central focus is a group of individuals gathered around a bed or altar, engaged in a ritual or ceremony. Some of them are lying down, while others are standing or kneeling, indicating a solemn and reverent atmosphere. The individuals are dressed in ancient Roman or Greek attire, with togas and other traditional garments. The setting appears to be an indoor space, possibly a temple or a sacred chamber, with architectural elements such as columns and a high ceiling visible in the background. The overall mood of the image is one of reverence, solemnity, and perhaps a sense of awe or mystery surrounding the event taking place.