Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 21-33 |

| Gender | Female, 54.7% |

| Angry | 45% |

| Surprised | 45% |

| Disgusted | 45% |

| Confused | 45% |

| Calm | 49.1% |

| Sad | 45% |

| Fear | 45% |

| Happy | 50.9% |

Feature analysis

Amazon

| Person | 98.9% | |

Categories

Imagga

| paintings art | 97.6% | |

| pets animals | 1.9% | |

Captions

Microsoft

created on 2019-11-09

| an old photo of a book | 48.6% | |

| a close up of a book | 47.4% | |

| an old photo of a person | 47.3% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2025-02-05

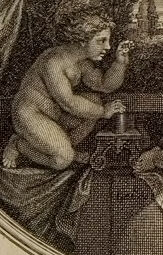

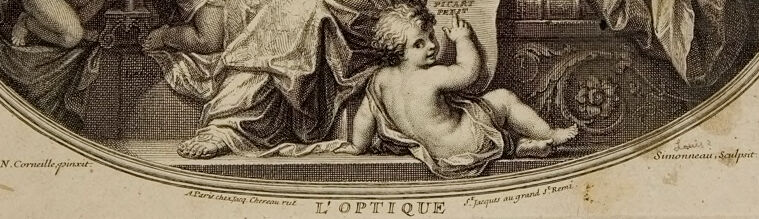

The image is an engraving titled "L'Optique," depicting an allegorical scene of optics. At the center, a seated female figure holds a telescope up to her eye, symbolizing the study of sight or vision. She is surrounded by four cherubs; one holds scientific instruments, another points towards a book labeled "Physica," and the others interact with the scene by holding drapery or similar elements. The backdrop showcases a natural setting with trees and an architectural structure, possibly a castle or large building, under a cloudy sky. The overall composition emphasizes the themes of vision and enlightenment.

Created by gpt-4 on 2025-02-05

This is an image of an oval-shaped engraving that features a classical scene. The artwork is rich in details and includes draped fabric elements, giving it a theatrical appearance. The bottom of the image contains text that suggests it is related to "optics" and credits to those who likely produced the image are given on the lower left and right corners, indicated by phrases like "sculpsit" implying engraving and "pinxit" suggesting painting. In the background, there is a landscape with a structured building resembling a palace or a classical temple. The composition and attire of the figures, along with the nature of the scene, are emblematic of Neoclassical or Renaissance artwork, which often draw from mythology or allegory for subject matter.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-02-05

This is a classical engraving titled "L'Optique" (The Optics), created by Simonneau based on a painting by N. Coypel. The oval-framed artwork depicts an allegorical scene featuring a central female figure holding what appears to be a telescope. She is surrounded by cherubic figures in various poses. The scene includes elaborate drapery and architectural elements in the background, with a classical building visible in the distance. The composition is done in a Baroque style with dramatic lighting and flowing forms. The engraving technique shows fine line work typical of 18th-century printmaking. The overall theme relates to optical science or the study of vision, as suggested by both the title and the telescope prop.

Created by claude-3-opus-20240229 on 2025-02-05

This black and white etching or engraving depicts an allegorical scene with several mythological figures. In the center, a female figure reclines while holding an arrow or dart. She is surrounded by cherubic infants or putti, who are engaged in various playful activities such as handling bows and arrows. The scene is set in an interior space with drapery and architectural elements visible in the background. The overall composition has an ornate, baroque style typical of 18th century French printmaking. Below the image is a caption that reads "L'OPTIQUE".

Created by claude-3-haiku-20240307 on 2025-02-05

This image appears to be an engraved illustration depicting a mythological or allegorical scene. It shows a composed, reclining female figure surrounded by smaller nude figures, possibly representing allegorical or mythological characters. The background includes a landscape with architectural elements. The overall style and subject matter suggest this is an artistic, rather than a photographic, representation.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-16

The image depicts an antique engraving of a woman surrounded by cherubs, titled "L'Optique" (Optics). The woman is seated on a throne-like structure, holding a telescope in her right hand and a book in her left. She wears a flowing robe and has a crown on her head. The cherubs are arranged around her, some playing musical instruments, while others appear to be engaged in various activities. The engraving is oval-shaped, with a decorative border surrounding the scene. The background features a landscape with buildings and trees, set against a cloudy sky. The overall atmosphere of the image is one of elegance and refinement, with the woman and cherubs conveying a sense of sophistication and learning. The title "L'Optique" suggests that the engraving may be related to the study of optics or astronomy, and the presence of the telescope and book supports this interpretation. The image appears to be a representation of the scientific and intellectual pursuits of the time, with the woman and cherubs embodying the ideals of knowledge and discovery. Overall, the image is a beautiful and intricate example of antique engraving, showcasing the artistic and cultural values of the period in which it was created.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-16

The image is a vintage illustration of a woman surrounded by cherubs, with the title "L'OPTIQUE" at the bottom. The woman sits on a throne-like chair, holding a telescope in her right hand and a scroll in her left. She wears a flowing robe and has her hair styled in an updo. To her left are two cherubs, one playing with a globe and the other holding a book. On her right side, another cherub is depicted, while a fourth cherub sits at her feet, looking up at her. The background features a landscape with trees, buildings, and a cloudy sky. The illustration is rendered in black ink on a beige paper, with a circular border around the central image. The overall atmosphere suggests a scene from classical mythology or allegory, possibly representing the pursuit of knowledge or scientific discovery.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-02-05

The image is an antique drawing of a woman holding a telescope and four children. The woman is sitting on a stone bench with a child on her lap. The woman is holding a telescope in her right hand and looking through it. The child on her lap is holding a book. The other three children are sitting on the bench on either side of the woman. The drawing is in black and white.

Created by amazon.nova-lite-v1:0 on 2025-02-05

This image depicts a black-and-white drawing of a woman sitting on a stone bench with three children. The woman is holding a telescope in her right hand and looking upward, possibly observing something. The children are sitting on the bench with her, and one of them is holding a book. The drawing is framed with a golden border and has the words "L'Optique" written in the center.