Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 26-42 |

| Gender | Female, 54.9% |

| Fear | 45% |

| Happy | 45% |

| Surprised | 45% |

| Calm | 54.1% |

| Sad | 45.7% |

| Angry | 45% |

| Disgusted | 45% |

| Confused | 45% |

Feature analysis

Amazon

| Person | 99.1% | |

Categories

Imagga

| streetview architecture | 61.4% | |

| interior objects | 32.1% | |

| paintings art | 5.9% | |

Captions

Microsoft

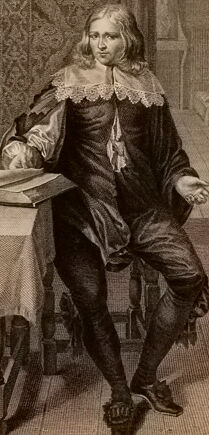

created on 2019-11-10

| a vintage photo of a group of people in a room | 93.9% | |

| a vintage photo of a person | 89.6% | |

| a vintage photo of a boy | 70.3% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2025-02-06

The image depicts an intricately detailed interior scene, likely from the Renaissance or Baroque period, featuring ornate woodwork and patterned wallpaper. On the left, there is a table with various items including books, a globe, and a small human figure statue. This suggests an environment associated with study or scholarly pursuits. In the center of the image a man stands gesturing, while to the right, a woman is seated beside a musical instrument that appears to be a virginal or harpsichord. A small dog rests on a stool beside her. The room is decorated with several framed paintings and there is a prominently arched doorway in the background, adding to the sophisticated atmosphere of the setting.

Created by gpt-4 on 2025-02-06

This is an image of a historical interior scene in an elaborate room. On the left side of the image, there is a table covered with various papers and a large globe, which suggests a space used for study or navigation. A small statue can also be seen on the table. The room features elaborate damask wall coverings and framed artwork hanging above ornate wainscoting. A large window is partially open, allowing light to flood the space and offer a view of the architecture outside. On the right side of the image, there is a desk with an open drawer revealing papers inside. Above the desk, a mirror reflects a white statue and other items, while a tapestry depicting a pastoral scene with horses hangs on the adjacent wall. There's a cushioned chair nearby and a small dog is comfortably sleeping on another cushion on the floor. The high level of detail in the furnishings and the overall atmosphere of the room suggests the setting is one of wealth and education, possibly in the 17th or 18th century. The architecture and furnishings indicate a European context, highlighting a Baroque or Renaissance style.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-02-06

This is a historical engraving depicting an elegant interior scene, likely from the 17th century. The room shows two figures dressed in period clothing with lace collars and fine fabrics. One appears to be standing by a table with books and a globe, while another is positioned near what appears to be a harpsichord or similar keyboard instrument. The room is decorated with ornate wallpaper patterns and framed landscape paintings on the walls. There's a decorative archway in the background, and the overall composition suggests this might be a scene of scholarly or musical activity among the upper class. A small dog can be seen resting on a cushioned stool. The style and details of the engraving are typical of formal artistic documentation of indoor scenes from this historical period.

Created by claude-3-opus-20240229 on 2025-02-06

The image depicts an interior scene from the past, likely from the 17th or 18th century based on the clothing and furnishings. The room features ornate wallpaper or tapestries with detailed patterns. There are two well-dressed individuals, a man and a woman, standing in the room. The man has his hand on a globe placed on a wooden table. The woman is standing near a harpsichord or early piano. There are also a small dog and cat in the room. The overall scene suggests an upper-class domestic interior from several centuries ago.

Created by claude-3-haiku-20240307 on 2025-02-06

The image depicts an interior scene with two figures dressed in 17th or 18th century attire. One figure, a woman, is standing at a desk and appears to be working or studying. The other figure, a man, is standing near her and observing. The room is ornately decorated with tapestries, paintings, and architectural details, suggesting a wealthy or noble setting. There are various objects scattered around the room, including a globe and what appears to be a small sculpture. The overall atmosphere conveys a sense of scholarly or artistic pursuit within an elegant, historic environment.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-13

The image depicts a scene of a man and woman in a room, with the man sitting at a desk and the woman standing nearby. The man is dressed in 17th-century attire, wearing a dark-colored suit with lace trim on his collar and cuffs, and has long hair. He is holding a quill pen in his right hand and appears to be writing or drawing on a piece of paper. The woman is also dressed in 17th-century clothing, wearing a long dress with a high neckline and a full skirt. She has long hair and is standing near a table with a box on it, which may contain some sort of instrument or tool. There is a small dog lying on the floor next to her. The room is decorated with various items, including a globe, a statue, and several paintings on the walls. The walls are adorned with intricate patterns and designs, and there is a large mirror hanging above the fireplace. The overall atmosphere of the image suggests a sense of elegance and refinement, with the two figures engaged in intellectual or artistic pursuits. In terms of visual content, the image features a range of textures and colors, including the smooth surface of the desk, the rough texture of the wood floor, and the vibrant colors of the paintings on the walls. The lighting in the image is soft and diffused, with a warm glow emanating from the fireplace. Overall, the image presents a serene and contemplative scene, with the two figures lost in thought as they engage in their respective activities. The image invites the viewer to step into the world of the 17th century and experience the beauty and sophistication of that era.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-13

The image is a sepia-toned illustration of a man and woman in a room with various objects, including books, a globe, and a dog. The man is standing at a table, while the woman is seated at a desk. The room has a fireplace and a doorway leading to another room. The overall atmosphere suggests a scene from the 17th or 18th century, possibly depicting a scholarly or intellectual setting.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-02-06

The image is a black-and-white engraving of a room with two people inside. The man is sitting on a chair, and the woman is standing near the piano. There is a dog sitting on a chair next to the piano. There is a globe, a clock, and some books on the table. There is a statue of a naked man on the table. The walls have several pictures and a large arched doorway.

Created by amazon.nova-lite-v1:0 on 2025-02-06

The image depicts a historical scene set in a room with an elegant and scholarly atmosphere. In the foreground, a man sits on a chair, holding a book and pointing towards it, suggesting he is engaged in reading or discussing the contents. Beside him is a table with a globe, an hourglass, and some books, indicating a scholarly or intellectual setting. A woman stands beside a desk, which features a painting and a dog lying on a cushion. The room has ornate wallpaper and paintings on the walls, contributing to a refined and cultured ambiance. The setting appears to be from a period where such scholarly pursuits were highly valued.