Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

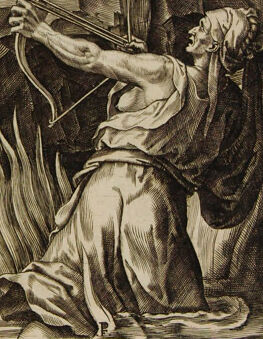

AWS Rekognition

| Age | 25-35 |

| Gender | Male, 99.2% |

| Sad | 100% |

| Surprised | 6.3% |

| Fear | 6% |

| Calm | 0.5% |

| Disgusted | 0.4% |

| Angry | 0.2% |

| Confused | 0.2% |

| Happy | 0% |

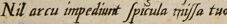

AWS Rekognition

| Age | 31-41 |

| Gender | Male, 100% |

| Calm | 100% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0% |

| Disgusted | 0% |

| Confused | 0% |

| Happy | 0% |

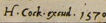

AWS Rekognition

| Age | 25-35 |

| Gender | Male, 93.1% |

| Calm | 84.9% |

| Surprised | 8.2% |

| Fear | 7.3% |

| Sad | 3.9% |

| Confused | 1.8% |

| Disgusted | 1% |

| Angry | 0.6% |

| Happy | 0.3% |

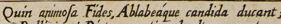

AWS Rekognition

| Age | 23-31 |

| Gender | Male, 99% |

| Calm | 71.7% |

| Sad | 13.3% |

| Surprised | 7.8% |

| Fear | 6.8% |

| Disgusted | 3.9% |

| Confused | 3.2% |

| Angry | 2.2% |

| Happy | 0.5% |

Feature analysis

Categories

Imagga

| paintings art | 88.1% | |

| beaches seaside | 7.5% | |

| streetview architecture | 3.4% | |

Captions

Microsoft

created on 2019-02-18

| a vintage photo of a person holding a book | 46.6% | |

| a vintage photo of a person | 46.5% | |

| a vintage photo of a book | 46.4% | |