Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 20-38 |

| Gender | Male, 54.1% |

| Sad | 45.7% |

| Calm | 53.9% |

| Angry | 45.1% |

| Confused | 45.1% |

| Disgusted | 45.1% |

| Happy | 45% |

| Surprised | 45.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Painting | 88.7% | |

Categories

Imagga

created on 2018-04-19

| paintings art | 97.9% | |

| streetview architecture | 1.4% | |

| nature landscape | 0.6% | |

Captions

Microsoft

created by unknown on 2018-04-19

| a close up of a book | 62.2% | |

| close up of a book | 57.1% | |

| a hand holding a book | 57% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-11

a photograph of a drawing of a woman sitting on a cloud

Created by general-english-image-caption-blip-2 on 2025-06-28

a drawing of the virgin and child with angels

OpenAI GPT

Created by gpt-4 on 2024-12-11

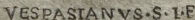

The image is an engraving that depicts a seated figure wearing robes, with multiple small cherub-like figures surrounding the central figures. The figure is seated on a cloud-like formation at the lower portion of the image, and one of the cherubs appears to be alighting on this cloud. The background is filled with rays emanating from behind the central figure, suggesting a divine or heavenly setting. At the bottom of the artwork, there is a caption reading "VESPASIANVS·S·I·E·". The classical style of the engraving, along with the subject matter, suggests it may be of religious or mythological significance, drawing upon Christian or classical iconography.

Created by gpt-4o-2024-05-13 on 2024-12-11

The image is a detailed engraving depicting a seated figure holding a baby. Both figures seem to be sitting on clouds, suggesting a celestial or divine setting. Surrounding them are multiple angelic figures, also positioned on the clouds, some with their heads bowed and others gazing towards the central figures. There is an inscription at the bottom left reading "VESPASIANVS. S.I.F." The overall scene exudes a sense of holiness and serenity.

Anthropic Claude

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-25

This is a historical engraving or etching signed by "VESPASIANVS" that appears to be religious in nature. The artwork shows a central figure seated on clouds, surrounded by cherubs or putti (small angelic figures) floating in the sky. The main figure is depicted in flowing robes in a classical style, and the composition has a heavenly or divine atmosphere created by the billowing clouds and the presence of the cherubic figures. The artwork demonstrates fine line work and cross-hatching typical of Renaissance or Baroque period engraving techniques. The overall tone is ethereal and spiritual, with the figures arranged in a dynamic upward-reaching composition.

Created by claude-3-haiku-20240307 on 2024-12-11

The image appears to be an engraved portrait depicting a woman seated on a cloud or mound, surrounded by angelic figures. She is wearing ornate, flowing robes and holding a young child in her arms. The background is filled with swirling clouds and rays of light emanating from above, creating a sense of divinity and transcendence. The engraving style suggests this is an artistic depiction from an earlier period, likely the 17th or 18th century.

Created by claude-3-5-sonnet-20241022 on 2024-12-11

This is a religious etching or engraving, likely from the Renaissance period, showing a woman and child seated on clouds in a heavenly scene. The artwork is rendered in a classical style with fine line work and cross-hatching techniques. Several cherubs or putti (baby angels) float around them in the clouds, and there appears to be a cherub's head at the bottom of the composition. The woman wears flowing robes and is depicted in a serene, maternal pose. The image is signed "VESPASIANVS" at the bottom, indicating its creator. The overall composition creates a peaceful, ethereal atmosphere typical of religious art from this period.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-11

The image depicts a drawing of the Virgin Mary holding the infant Jesus, surrounded by angels and clouds. The Virgin Mary is seated on a cloud, with the infant Jesus in her lap. She wears a long robe and has her right arm wrapped around the child, while her left arm is extended outward. The infant Jesus is dressed in a simple tunic and has his right hand resting on the Virgin's shoulder.

Above the Virgin and child, there are several angels depicted in various poses, some of whom are playing musical instruments or singing. The background of the image features a light-colored sky with rays of light emanating from behind the Virgin's head, symbolizing divine light or the Holy Spirit.

At the bottom of the image, there is an inscription that reads "VESPASIANVS S.I.F.", which is likely the artist's signature or a reference to the patron who commissioned the work. The overall atmosphere of the image is one of serenity and reverence, capturing a moment of quiet devotion between the Virgin Mary and the infant Jesus.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-11

The image depicts a vintage-style drawing of the Virgin Mary holding the infant Jesus, surrounded by angels and cherubs. The drawing is rendered in black ink on a light-colored background, with intricate details and shading that give it a sense of depth and texture.

Key Elements:

- Virgin Mary: The central figure in the drawing, depicted as a woman with long hair and a flowing robe, holding the infant Jesus in her arms.

- Infant Jesus: The baby Jesus is shown as a small, naked figure with curly hair, being held by the Virgin Mary.

- Angels and Cherubs: Several angels and cherubs are depicted around the Virgin Mary and the infant Jesus, some of which are shown playing musical instruments or holding flowers.

- Clouds: The drawing features several clouds, which are depicted as soft, white masses that provide a sense of depth and atmosphere to the scene.

- Sunburst: A sunburst is visible in the background of the drawing, which adds to the overall sense of light and radiance.

Style and Technique:

- Cross-hatching: The drawing features extensive use of cross-hatching, which creates a sense of texture and depth.

- Shading: The artist has used shading to create a sense of volume and dimensionality in the figures and objects depicted in the drawing.

- Line work: The lines used in the drawing are bold and expressive, with a range of thicknesses and textures that add to the overall sense of detail and realism.

Overall Impression:

The image presents a serene and peaceful scene, with the Virgin Mary and the infant Jesus at its center. The surrounding angels and cherubs add a sense of joy and celebration, while the clouds and sunburst provide a sense of depth and atmosphere. The drawing's use of cross-hatching, shading, and line work creates a sense of texture and dimensionality, making it feel like a detailed and realistic representation of the scene.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-26

This image is a black-and-white drawing of the Virgin Mary and Jesus Christ. The Virgin Mary is seated on a cloud, holding Jesus Christ on her lap. Jesus Christ is looking up at his mother, and both of them are looking at the viewer. The Virgin Mary is wearing a long dress and a veil, while Jesus Christ is wearing a tunic. There are several angels surrounding the Virgin Mary and Jesus Christ, and the image is surrounded by a white border.

Created by amazon.nova-pro-v1:0 on 2025-02-26

The image depicts a black-and-white drawing of a woman sitting on a cloud with a child on her lap. The woman is holding the child with her right hand, while her left hand is placed on the child's shoulder. The child is looking down, and the woman is looking to the left. The woman is wearing a long dress, and the child is wearing a long-sleeved shirt. The drawing is surrounded by a border.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-18

The image is a detailed monochrome engraving, likely from the Renaissance period. The central focus is a woman, presumably the Virgin Mary, holding a young child, presumably Jesus. They are seated on a cloud-like formation, and radiating light surrounds their heads.

Around them, there are numerous cherubic figures, some with wings, creating a heavenly atmosphere. The style is characteristic of Renaissance art, with careful attention to detail in the drapery, facial expressions, and the overall composition. The artist's initials, "VESPASTIANVS S.I.F", are visible on the bottom left corner of the image, indicating the artist's name and perhaps the etching technique used. The overall impression is one of serenity and religious devotion.

Created by gemini-2.0-flash on 2025-05-18

Here is a description of the image:

The artwork is an etching featuring the Madonna and Child surrounded by various angels and clouds. The style suggests a classical or Renaissance influence.

In the center of the image, the Madonna is seated on a bank of clouds. She is holding the Christ Child, who is depicted as a small, nude infant. The Madonna's face is gentle, and she gazes out towards the viewer. The Child's posture is dynamic, with one arm outstretched, as if reaching.

The pair are enveloped by clouds. At the bottom of the image, the clouds appear dense and three-dimensional. Scattered above are various figures of angels. These angels are not uniform; some appear to be fully formed cherubs with wings, while others are simply faces with small wings.

A circle of radiant light surrounds the Madonna and Child, suggesting divine illumination. The etching is done with fine lines that create varying shades and textures, giving the composition depth and form.

At the bottom, there is an inscription: "VESPASIANVS·S·I·F·", suggesting that the artist may be someone named Vespasianus.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-31

The image is a classical engraving depicting a religious scene. The central figure is a woman, likely the Virgin Mary, holding a child, presumably Jesus. The woman is seated on a cloud, which is a common motif in religious art to signify divine or heavenly presence. She is dressed in flowing robes, and her hair is styled in a manner typical of classical art. The child is nestled close to her, and both figures are surrounded by cherubic angels, adding to the ethereal and sacred atmosphere of the scene.

In the background, there are several other figures, possibly saints or religious figures, also depicted on clouds. The overall composition is balanced and symmetrical, with the central figures drawing the viewer's attention. The engraving is detailed, with intricate lines and shading to create depth and texture.

At the bottom of the image, there is an inscription that reads "VESPASIANVS S-LE," which likely refers to the artist or engraver of the piece. The style and subject matter suggest that this is a work from the Renaissance or Baroque period, characterized by its focus on religious themes and classical aesthetics.

Qwen

No captions written