Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 38-59 |

| Gender | Male, 54.7% |

| Calm | 46.5% |

| Disgusted | 45.1% |

| Surprised | 45.2% |

| Confused | 45.1% |

| Sad | 45.3% |

| Angry | 45.2% |

| Happy | 52.7% |

Feature analysis

Amazon

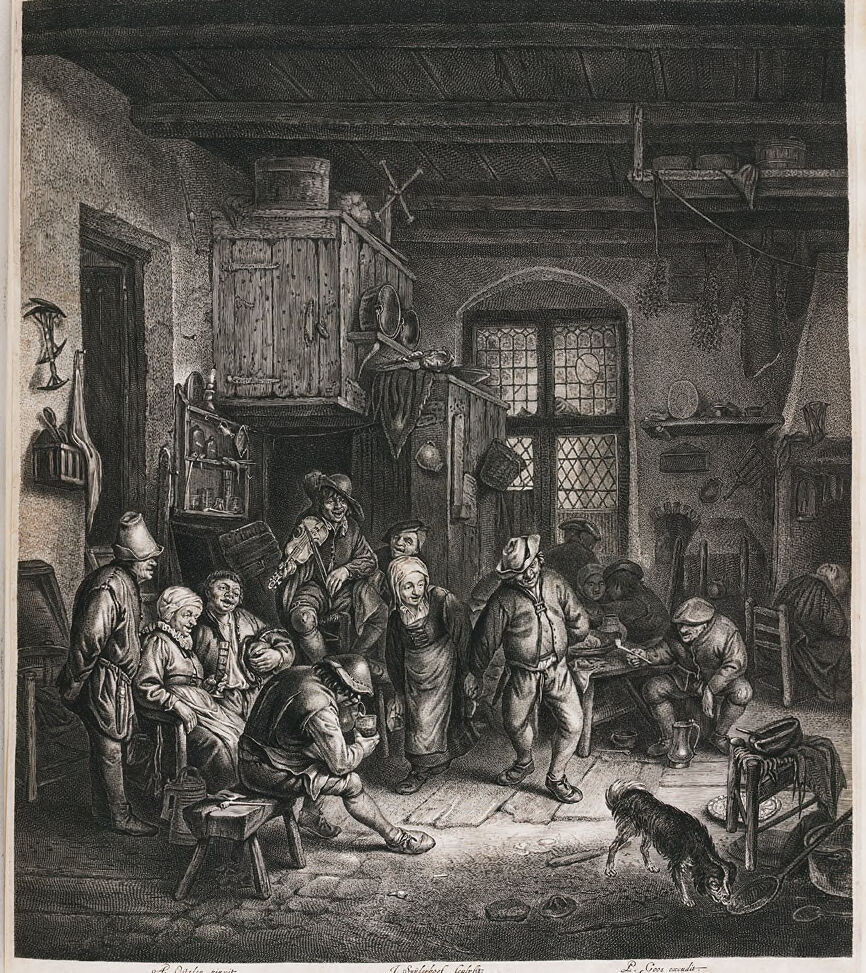

| Painting | 99.3% | |

Categories

Imagga

| streetview architecture | 99.5% | |

| paintings art | 0.4% | |

| nature landscape | 0.1% | |

| interior objects | 0.1% | |

Captions

Microsoft

created on 2018-03-24

| a vintage photo of an old building | 89.3% | |

| a vintage photo of a group of people in a room | 89.2% | |

| a vintage photo of some people | 89.1% | |

OpenAI GPT

Created by gpt-4 on 2024-12-16

This is an etching depicting a scene inside a room that appears to be from a bygone era, possibly medieval or early modern. It shows a detailed interior with several individuals gathered around and engaging in what looks like a communal activity. The room is dark and furnished with period-appropriate elements such as wooden furniture, a cabinet, shelves with various items, and tools hanging from the wooden beams. An open door can be seen on the left, and there is a window with lattice work on the right, letting in some light. The floor is covered with what looks like straw, and there's a dog standing near the center of the room. The style of the etching is intricate, demonstrating a high level of craftsmanship and attention to detail that suggests the individuals are deeply immersed in a specific situation or event.

Created by gpt-4o-2024-05-13 on 2024-12-16

This image is an old-style black-and-white illustration depicting a busy interior scene, likely of a tavern or community gathering place. The room is filled with several people dressed in historical attire, engaged in different activities. Some are seated, some are standing, and one person is playing a violin-like instrument. The room's architecture includes wooden beams, a large window with latticework, and various items hanging on the walls, such as hats and tools. A dog is seen in the foreground near the bottom right, drinking from a bowl. The overall ambiance suggests a lively, social environment.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-16

The image is a detailed black and white engraving depicting a lively scene in what appears to be an interior room or tavern setting. The room is filled with a diverse group of people engaged in various activities - some are seated around a table, others are standing and conversing, and there are items and furnishings scattered throughout the space. The overall atmosphere suggests a busy, crowded, and somewhat chaotic environment. The intricate details and contrasting lights and shadows create a captivating and immersive visual experience.

Created by claude-3-5-sonnet-20241022 on 2024-12-16

This is a historical black and white engraving depicting a scene inside what appears to be a rustic tavern or inn. The interior shows several people gathered in a dimly lit room with leaded glass windows and wooden furniture. The scene appears to be from the 17th or 18th century, based on the clothing styles and setting.

The room is cluttered with various objects and furniture, including what appears to be a large wooden cabinet or storage unit against the wall. The ceiling has exposed beams, and there are various implements and items hanging on the walls. In the foreground, a dog can be seen sniffing at something on the floor.

The scene depicts what seems to be a social gathering, with people in period clothing interacting with each other. The artistic style is very detailed, showing fine line work characteristic of engravings from this period. The composition creates a sense of depth and atmosphere, with careful attention paid to light and shadow.

At the bottom of the image, there appear to be artist credits or signatures, suggesting this is a professionally produced artistic work, possibly for publication or collection.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-16

The image depicts a lively scene in a tavern, with a group of people gathered around a table. The atmosphere is lively and joyful, with the people laughing and chatting. The tavern is filled with various objects, such as a large wooden cabinet, a window with diamond-shaped panes, and a door with a cross-shaped window. The walls are adorned with various items, including a sword, a shield, and a helmet.

In the foreground, a dog is seen lying on the floor, adding to the sense of warmth and comfort in the scene. The overall mood of the image is one of conviviality and camaraderie, suggesting that the people in the tavern are enjoying each other's company and having a good time.

The image appears to be a painting or drawing, possibly created in the 17th century. The style is reminiscent of Dutch Golden Age art, with its attention to detail and use of chiaroscuro to create a sense of depth and volume. The image is likely a depiction of everyday life in a Dutch tavern during the 17th century, showcasing the social and cultural norms of the time.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-16

The image is a black-and-white illustration of a group of people in a room, possibly a tavern or inn. The scene appears to be from the 17th or 18th century, based on the clothing and architecture.

In the foreground, there are several people gathered around a table, some sitting and others standing. They are all dressed in traditional clothing, with men wearing hats and women wearing headscarves. One man is playing a musical instrument, possibly a lute or a violin.

To the left of the group, there is a large wooden cabinet or wardrobe, and above it, a window with a cross-shaped grille. On the right side of the image, there is a doorway leading to another room, and above it, a shelf with various objects, including a jug and a basket.

In the background, there are several other people, some of whom appear to be sleeping or resting. The walls of the room are made of stone, and the ceiling is low and vaulted. There is a dog in the foreground, and a cat can be seen in the background.

Overall, the image suggests a lively and bustling atmosphere, with people gathered together to socialize and enjoy each other's company. The illustration style is detailed and realistic, with a focus on capturing the textures and patterns of the clothing and furniture.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-28

The image is a black-and-white drawing depicting a group of people in a room. The room has a wooden ceiling, and there are various items on the shelves, including a basket, a plate, and a bowl. The room also has a window with a glass panel, and there is a door on the left side. The people in the room are dressed in traditional clothing, and some of them are sitting on stools while others are standing.

Created by amazon.nova-pro-v1:0 on 2025-02-28

The black-and-white image shows a group of people in a room. They are all wearing hats and shoes. Some are sitting on the floor, while others are standing. A dog is standing on the right side of the room. There is a cabinet with a lot of stuff on top. There is a window on the right side of the room. There are several objects hanging on the wall.