Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 48-66 |

| Gender | Female, 51.2% |

| Calm | 45.9% |

| Fear | 45% |

| Angry | 45% |

| Sad | 54% |

| Surprised | 45% |

| Disgusted | 45% |

| Happy | 45% |

| Confused | 45% |

Feature analysis

Amazon

| Person | 98% | |

Categories

Imagga

| paintings art | 98% | |

| streetview architecture | 2% | |

Captions

Microsoft

created on 2020-04-24

| a painting of an old building | 71.5% | |

| an old photo of a painting | 65% | |

| a painting on the side of a building | 63% | |

OpenAI GPT

Created by gpt-4 on 2024-12-04

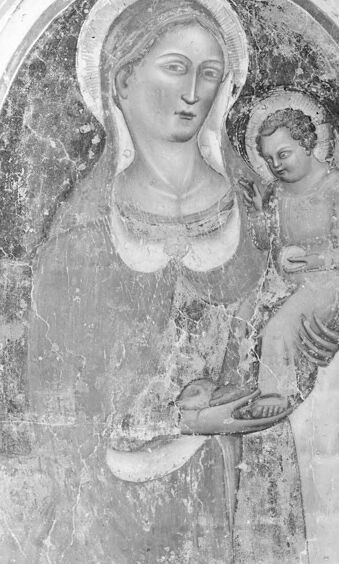

The image is a black and white photograph of a fresco depicting various figures that appear to have religious or historical significance. There are three distinct sections in the artwork: 1. The upper section contains figures that seem to be flying or floating, perhaps representing angels or other celestial beings. There are three visible figures in this area, each with unique postures and attributes, like wings. 2. The central section, resembling an arched alcove, shows a standing figure holding a small, nude child. The stance and presentation suggest a Madonna and Child motif, which is common in Christian iconography. 3. The lower section consists of two full-length figures in niches. Each figure is shown standing and facing forward, with one holding what seems to be a book, and they are positioned as if guarding or flanking the central scene. To the left of this painting is a small panel with a number (type or handwritten), which is likely an inventory or catalog reference. The fact that the photograph is monochromatic suggests it might be of historical nature or taken before the widespread use of color photography. The image's age and the style of the artwork imply that the fresco could be from the medieval or Renaissance period.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-29

This appears to be a medieval religious painting or fresco, likely from the Gothic period. The central panel shows Madonna and Child under an arched frame, with both figures depicted with halos. On either side of the central arch are smaller panels containing figures of saints or religious figures in robes. At the top of the composition, there are flying angels depicted in a symmetrical arrangement. The artwork shows signs of age and deterioration, visible in the cracking and fading of the surface. The image is in black and white, though the original work would likely have been in color. The composition follows traditional medieval artistic conventions, with formal, frontal positioning of the central figures and hierarchical scaling to emphasize their importance. The number "10" appears at the bottom of the frame, suggesting this might be part of a cataloged collection or series.

Created by claude-3-haiku-20240307 on 2024-11-27

The image appears to be a religious painting or fresco depicting the Virgin Mary and the Christ child. The central figure is a serene, maternal image of the Virgin Mary holding the infant Jesus. Above them are two angels, and on either side are two additional figures, possibly saints or religious figures. The overall composition has an ornate, architectural framing around the central scene, giving it a sense of grandeur and reverence. The style and subject matter suggest this is a work of religious artwork, likely from a church or sacred building.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-29

This image depicts a black-and-white photograph of a painting featuring the Virgin Mary and Jesus Christ, surrounded by angels and other figures. The central figure is the Virgin Mary, holding Jesus Christ in her arms. She wears a halo and a long dress, while Jesus is also adorned with a halo. Above them are two angels, one on each side, with outstretched wings. On either side of the Virgin Mary are two additional figures, each standing in an archway. The figure on the left is an older man with a long white beard, wearing a robe and holding a book. The figure on the right is a younger man dressed in a robe, holding a cross. The painting appears to be old and worn, with visible cracks and fading. It is set against a dark background, suggesting that it may be displayed in a museum or gallery setting. Overall, the image presents a serene and reverent scene, capturing the essence of Christian iconography.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-24

The image depicts a black-and-white photograph of an old painting, likely from the Renaissance period. The painting is rectangular and features a central figure of the Virgin Mary holding the infant Jesus, surrounded by two arches. The left arch contains a figure of Saint Joseph, while the right arch features a figure of Saint John the Baptist. Above the central figure, there are four angels depicted in flight, with two on each side. The painting appears to be in a state of disrepair, with visible signs of aging and damage. The surface is cracked and worn, with some areas showing significant deterioration. Despite this, the overall composition and detail of the painting remain intact, showcasing the skill and craftsmanship of the artist who created it. The background of the image is dark and indistinct, but it appears to be a room or studio where the painting is being displayed. There are some faint outlines of other objects or furniture in the background, but they are not clearly visible. Overall, the image provides a glimpse into the history and artistry of the Renaissance period, highlighting the beauty and significance of this particular painting.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-05

The image is a black-and-white photograph of a painting depicting a Madonna and Child with two angels. The painting is framed with a white border and has a number "10" in the bottom right corner. The central figure of the Madonna is seated, holding the infant Jesus on her lap, while two angels are positioned above her, one on either side. The painting appears to be an old artwork, with visible signs of wear and tear.

Created by amazon.nova-pro-v1:0 on 2025-01-05

The image is a black-and-white photograph of a religious painting. The painting features a woman holding a child. The woman is dressed in a long gown and has a halo above her head. The child is also dressed in a long gown and is holding a book. The woman and child are standing in front of a large, ornate archway. The archway is decorated with intricate designs and patterns. The painting is surrounded by a frame.