Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 36-54 |

| Gender | Male, 50.4% |

| Surprised | 45.4% |

| Confused | 45% |

| Happy | 45.3% |

| Fear | 45.1% |

| Sad | 45.1% |

| Calm | 53.9% |

| Angry | 45.1% |

| Disgusted | 45% |

Feature analysis

Amazon

| Person | 86.4% | |

Categories

Imagga

| streetview architecture | 98.2% | |

| paintings art | 1.8% | |

Captions

Microsoft

created on 2020-04-24

| a black and white photo of a store | 64.9% | |

| an old photo of a store | 64.8% | |

| a clock on the side of a building | 31.4% | |

OpenAI GPT

Created by gpt-4 on 2024-12-04

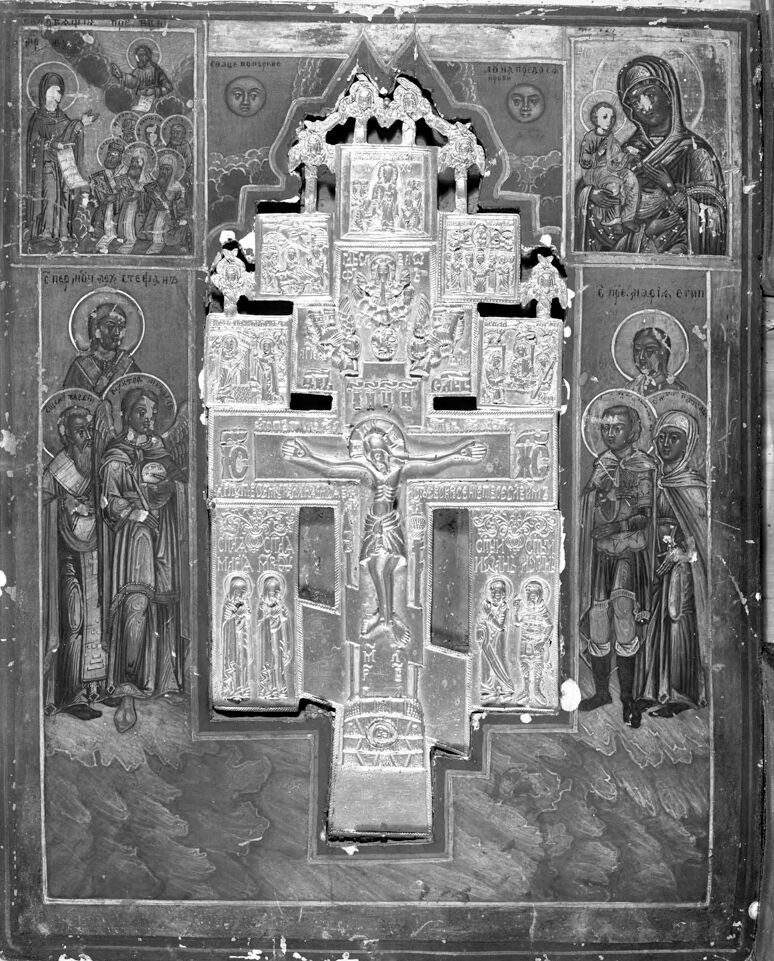

The image shows an intricately designed Christian Orthodox iconostasis panel or a portion of a larger devotional piece. At the center is a prominent embossed or engraved crucifix, with Jesus on the cross, flanked by detailed biblical scenes and figures depicted in relief. The craftsmanship is elaborate, indicating that this is likely a work of religious significance, possibly used in a church or chapel setting. Around the periphery are painted icons, each within their own compartment, featuring various saints and holy figures. Each painting follows the traditional style of Orthodox iconography, with careful attention to expression, gesture, and symbolic elements. Some of the figures carry objects or have halos, identifying their sacred status. Above the cross, there is a segment that appears damaged or broken off, suggesting this piece may be quite old or has endured some wear over time. The overall presentation suggests that this artifact is designed to inspire devotion and veneration, and it is rich in religious symbolism and artistry.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-29

This appears to be a medieval Orthodox Christian icon featuring a central crucifix surrounded by various religious scenes. The main element is a large, ornate cross in silver or white metal relief work, with a crucifixion scene at its center. Around the cross are smaller panels depicting various biblical scenes and figures. The icon is arranged in a hierarchical composition typical of Orthodox iconography, with smaller scenes in the corners and along the sides of the central cross. The background appears dark, and the overall piece shows signs of age. The metalwork cross (likely silver) stands out against the darker painted surface. The craftsmanship appears to be quite detailed, with intricate engravings and religious imagery throughout. The style and composition suggest this is likely from the Byzantine or Russian Orthodox tradition, possibly dating from the medieval period. On either side of the central crucifix are panels showing various religious figures arranged in groups, painted in the traditional Orthodox icon style. The overall piece combines both painted elements and metalwork in a style that was common in high-status religious artifacts of the period.

Created by claude-3-haiku-20240307 on 2024-11-27

This image appears to be a religious icon or altarpiece depicting various religious figures and scenes. The central focus is a large cross with a crucifix, surrounded by intricate iconography and imagery. There are a number of smaller depictions of saints, religious figures, and biblical scenes arranged around the central cross. The overall style and composition suggest this is a traditional Eastern Orthodox or Eastern Christian religious artwork. Without being able to identify any individuals, the image conveys a sense of deep religious symbolism and devotion.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-29

The image is a black-and-white photograph of an old, damaged religious icon. The icon is a large, rectangular piece of art with a cross in the center. The cross is made up of several smaller images, including a depiction of Jesus Christ on the cross. Surrounding the cross are several other images, including saints and biblical figures. The icon appears to be quite old, with visible signs of wear and tear, such as cracks and fading. The background of the icon is a dark color, which helps to highlight the details of the images. Overall, the icon is a beautiful and meaningful piece of religious art that has been preserved for many years.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-22

The image is a black-and-white photograph of an old, worn-out icon. The icon features a large cross in the center, surrounded by various images and figures. The cross is adorned with intricate carvings and has a figure of Jesus Christ at its center. The surrounding images depict scenes from the Bible, including the Virgin Mary, saints, and other biblical figures. The icon appears to be made of wood or metal and has a dark patina, indicating age and wear. The edges are rough and worn, with visible cracks and scratches. The overall appearance suggests that the icon has been handled and displayed extensively over the years, with signs of aging and damage. The background of the image is not visible, as the icon takes up the entire frame. However, the surface on which the icon is placed appears to be a wooden table or shelf, with a light-colored finish. Overall, the image presents a striking and poignant representation of religious art and symbolism, with the worn and weathered icon serving as a testament to its enduring significance and cultural importance.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-05

The image depicts a traditional Christian icon with a central cross, surrounded by various religious figures and scenes. The cross is intricately designed, with a silver or gold-like finish and detailed carvings. At the top of the cross is a depiction of the sun and moon, symbolizing the celestial and divine aspects of the Christian faith. The cross is flanked by two figures, one on each side, dressed in ancient attire, possibly representing saints or biblical figures. The background of the icon is a dark, textured surface, possibly wood or stone, giving it an aged and venerable appearance. The icon is framed in a rectangular shape, with the central cross occupying the majority of the space, while the surrounding figures and scenes are smaller and more detailed. The overall composition is symmetrical, with the cross at the center and the figures and scenes arranged in a balanced and harmonious manner.

Created by amazon.nova-pro-v1:0 on 2025-01-05

The image is a black and white photograph of an old, damaged painting or icon. The central figure is a cross with Jesus Christ on it, surrounded by other religious figures. The cross is made of silver and is intricately designed. The figures are dressed in traditional clothing, and some are holding objects. The painting is framed by a wooden border, and the background is a plain, unadorned surface.