Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 30-46 |

| Gender | Male, 80.6% |

| Calm | 87.7% |

| Disgusted | 1.8% |

| Sad | 4.5% |

| Surprised | 0.9% |

| Confused | 2.2% |

| Angry | 1.5% |

| Fear | 0.6% |

| Happy | 0.9% |

Feature analysis

Amazon

| Helmet | 99.6% | |

Categories

Imagga

| paintings art | 97.6% | |

| streetview architecture | 2.4% | |

Captions

Microsoft

created on 2020-04-24

| a black and white photo of a man | 73.4% | |

| an old photo of a man | 73.3% | |

| a man holding a book | 37.2% | |

OpenAI GPT

Created by gpt-4 on 2024-12-04

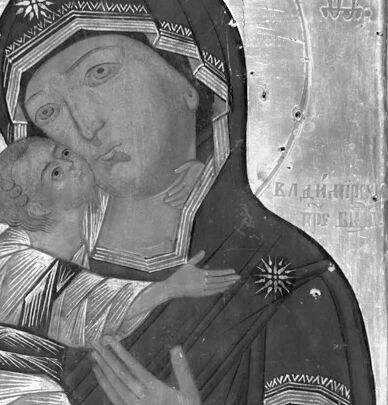

This is a black and white photograph of an iconographic painting, characteristically seen in Eastern Orthodox Christian art. The subject of the painting is depicted in a traditional, stylized manner with specific attention to drapery and symbolic elements. The figure is wearing elaborately patterned garments that suggest a religious or holy status, with a visible hand gesture that is often used in iconography to convey blessing or teaching. The halo around the head signifies the subject's sanctity. Silver and gold elements within the painting indicate it might be adorned with precious metals, which is a common technique in iconography to signify the divine light or presence. The painting is framed in what looks to be a simple wooden frame, and the Cyrillic text in the top corners of the icon suggests Slavic origin or influence.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-29

This is a black and white photograph of a religious icon, specifically an Orthodox Christian icon depicting the Virgin Mary and infant Jesus Christ. It appears to be painted in the traditional Byzantine style, with a gold or light-colored background and the characteristic haloes around their heads. The icon shows the Virgin wearing an ornately decorated veil with geometric patterns, and she is holding the infant who is dressed in light-colored robes. The composition demonstrates the tender embrace between mother and child, which is known in Orthodox iconography as the "Virgin of Tenderness" or "Eleousa" type. The image shows some signs of age, with visible cracks and wear on the surface. The icon is framed within a simple border, and the overall style and execution suggest it's likely from a medieval or early modern period.

Created by claude-3-haiku-20240307 on 2024-11-27

The image depicts a religious icon or painting of the Virgin Mary holding the baby Jesus. The Virgin Mary is shown in a frontal pose, with a pensive, caring expression on her face as she holds the infant Jesus close to her. The painting features ornate decorative elements, such as the intricate border around the central figures. The overall image has a somber, reverent quality characteristic of religious art.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-29

The image is a black-and-white photograph of an icon of the Virgin Mary holding the infant Jesus. The icon is framed in a dark wood frame with a lighter wood border around the icon itself. The Virgin Mary is depicted wearing a dark robe with a lighter-colored patterned head covering and a dark shawl draped over her shoulders. She is holding the infant Jesus in her left arm, and her right hand is raised in a gesture of blessing or protection. The infant Jesus is wrapped in a white cloth and appears to be looking up at his mother. The background of the icon is a light color, possibly gold or silver, and features a circular halo around the Virgin Mary's head. There are some faint inscriptions on the icon, but they are not legible in the photograph. Overall, the icon appears to be a traditional representation of the Virgin Mary and the infant Jesus, likely created in an Eastern Orthodox or Byzantine style.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-24

The image is a black-and-white photograph of an old, worn icon of the Virgin Mary holding the infant Jesus. The icon is in a rectangular wooden frame with a dark border around it. The Virgin Mary is depicted wearing a dark robe with a patterned hood and a starburst design on her head. She is holding the infant Jesus in her arms, who is wrapped in a white cloth. The background of the icon is a light-colored circle with a patterned border, and there are some words written in a foreign language on the sides of the icon. The overall atmosphere of the image suggests that it is a religious artifact, possibly from Eastern Europe or Russia.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-05

The image is a black-and-white photograph of an icon. The icon is framed by a silver border and depicts a woman holding a child. The woman is dressed in a long robe and is wearing a headdress. The child is dressed in a robe and is being held by the woman in her arms. The icon is placed on a wooden surface, and the background is plain.

Created by amazon.nova-lite-v1:0 on 2025-01-05

The image is a black-and-white photograph of an icon, possibly an icon of the Virgin Mary holding Jesus. The icon is framed in a rectangular shape with a silver border. The icon is depicted in a traditional style, with the Virgin Mary holding Jesus in her arms. The icon is adorned with intricate designs and patterns, and the background is a dark, possibly black, color. The image is captured from a low angle, giving it a sense of depth and perspective.