Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 21-33 |

| Gender | Female, 96.4% |

| Surprised | 0.8% |

| Sad | 92.6% |

| Happy | 0.1% |

| Disgusted | 0.1% |

| Angry | 0.4% |

| Calm | 4.1% |

| Fear | 1.2% |

| Confused | 0.8% |

Feature analysis

Amazon

| Person | 91.5% | |

Categories

Imagga

| paintings art | 95.9% | |

| streetview architecture | 2.4% | |

| interior objects | 1.3% | |

Captions

Microsoft

created on 2020-04-24

| a vintage photo of a group of people posing for the camera | 75.7% | |

| a vintage photo of a group of people posing for a picture | 75.6% | |

| a vintage photo of a group of people sitting posing for the camera | 68% | |

OpenAI GPT

Created by gpt-4 on 2024-12-04

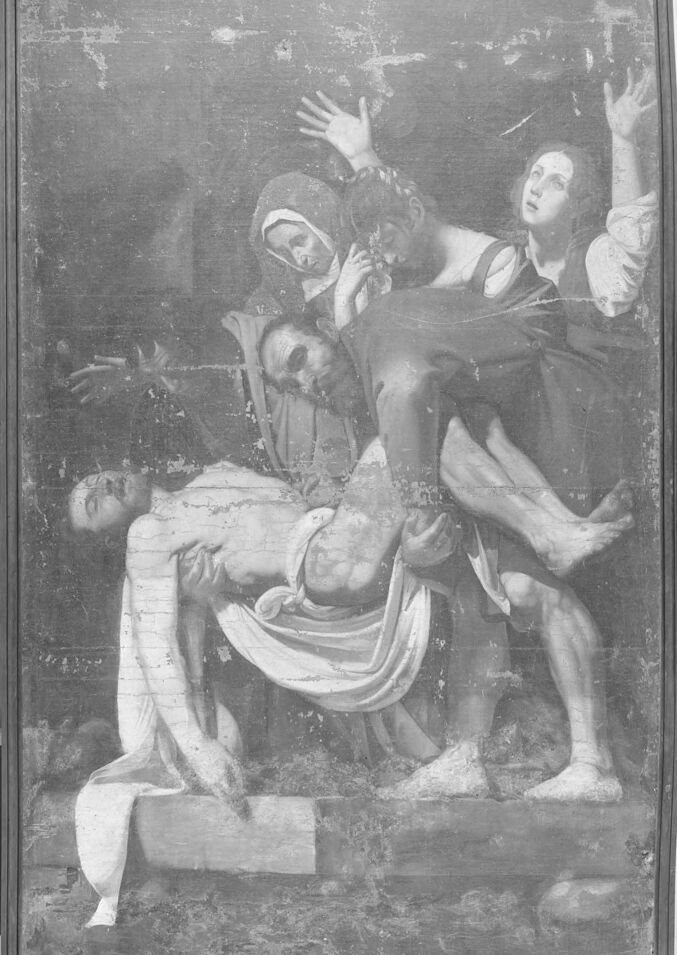

The image shows a monochromatic photograph of a painting with multiple figures in a scene that appears to be of a religious or historical nature. The central focus is on a figure who is being supported by another as they lie back with their gaze directed upwards. The setting of this painted scene might depict a moment of sorrow or suffering, as suggested by the body language and gestures of the figures involved. The painting is framed, and it shows some signs of age and wear. The photograph captures the texture of the painting's surface, suggesting that it is an aged artifact, possibly on display or in storage within a museum or similar institution.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-29

This appears to be a historical religious painting, likely depicting the Entombment of Christ or a similar biblical scene. The black and white image shows a classical composition with several figures in dramatic poses. The central figure appears lifeless and is being carried or lowered, draped in flowing robes. The other figures are shown in poses of grief and lamentation, with raised hands and emotional expressions typical of religious art from the Renaissance or Baroque periods. The painting has some visible wear and aging, with areas showing deterioration or damage. It appears to be photographed in what might be a museum or conservation setting, given the institutional-looking background visible at the edges of the frame.

Created by claude-3-haiku-20240307 on 2024-11-27

The image appears to be a religious or allegorical painting depicting a solemn and dramatic scene. The painting shows several figures gathered around a central figure, who appears to be the body of a deceased individual lying on a stone slab or bier. The figures surrounding the central figure seem to be expressing grief or mourning, with some reaching out towards the body. The overall scene has a somber and contemplative quality.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-29

The image is a black-and-white photograph of a painting, which appears to be a reproduction of "The Entombment of Christ" by Michelangelo Merisi da Caravaggio. The painting depicts the moment when Christ's body is being lowered into his tomb after his crucifixion. In the foreground, a group of figures are gathered around the body of Christ, who is draped in a white cloth and being supported by two men. One of the men, likely Joseph of Arimathea, is holding Christ's legs, while the other, possibly Nicodemus, is supporting his upper body. Behind them, a woman, likely Mary Magdalene, is shown with her arms raised in a gesture of grief and despair. Another figure, possibly Mary, the mother of Jesus, is also present, although her face is not clearly visible. The background of the painting is dark and somber, with a sense of depth and volume created by the use of chiaroscuro, a technique that uses strong contrasts between light and dark to create a sense of three-dimensionality. The overall mood of the painting is one of sadness and mourning, as the figures gather to pay their respects to the deceased Christ. The painting itself appears to be in a state of disrepair, with visible signs of aging and wear. The surface is cracked and faded, and there are areas where the paint has flaked off or been damaged. Despite this, the image remains powerful and evocative, conveying the emotional intensity of the scene it depicts.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-24

The image depicts a black-and-white photograph of an old painting, likely taken in a museum or gallery setting. The painting is framed and appears to be quite large, possibly a masterpiece from the Renaissance period. **Painting Details:** * The painting features a dramatic scene with several figures, including a man being carried by two others. * The man is dressed in a white robe, while the two men carrying him wear dark clothing. * A woman stands behind them, her arms raised in a gesture of despair or prayer. * The background of the painting is dark, with subtle shading and texture that adds depth to the scene. **Photography Details:** * The photograph was taken in a well-lit room, with a neutral-colored wall visible behind the painting. * The frame surrounding the painting is dark wood, with a simple design that does not distract from the artwork itself. * The overall atmosphere of the image is one of reverence and respect for the painting, suggesting that it is a valuable and important piece of art. **Context:** * The image was likely taken by a museum or gallery staff member for documentation or archival purposes. * The painting may be part of a larger collection or exhibition, and the photograph could be used to promote the event or provide information about the artwork. * The image could also be used for educational or research purposes, allowing art historians and enthusiasts to study the painting in detail.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-05

The image shows a framed painting with a black-and-white filter. The painting depicts a scene of a man being carried by two women, one of whom is holding a spear. The man is lying on a stone slab, and the two women are holding him by his arms and legs. The painting is mounted on a wall, and it seems to be in a storage room. The painting has a black frame with a silver border.

Created by amazon.nova-pro-v1:0 on 2025-01-05

The image is a black-and-white painting of three people, two of whom are carrying a dead person. The dead person is lying on his back, and the two people are carrying him by his arms and legs. The person on the left is wearing a hood, and the person on the right is wearing a white shirt. The painting is mounted on a wooden frame.