Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 22-34 |

| Gender | Male, 92.1% |

| Disgusted | 0.4% |

| Confused | 1% |

| Fear | 1% |

| Calm | 21% |

| Happy | 0.4% |

| Surprised | 0.4% |

| Sad | 74.3% |

| Angry | 1.5% |

Feature analysis

Amazon

| Painting | 93.5% | |

Categories

Imagga

| paintings art | 65.9% | |

| people portraits | 33% | |

Captions

Microsoft

created on 2020-04-24

| a vintage photo of a man | 91.3% | |

| a vintage photo of a man holding a book | 62.8% | |

| an old photo of a man | 62.7% | |

OpenAI GPT

Created by gpt-4 on 2024-12-04

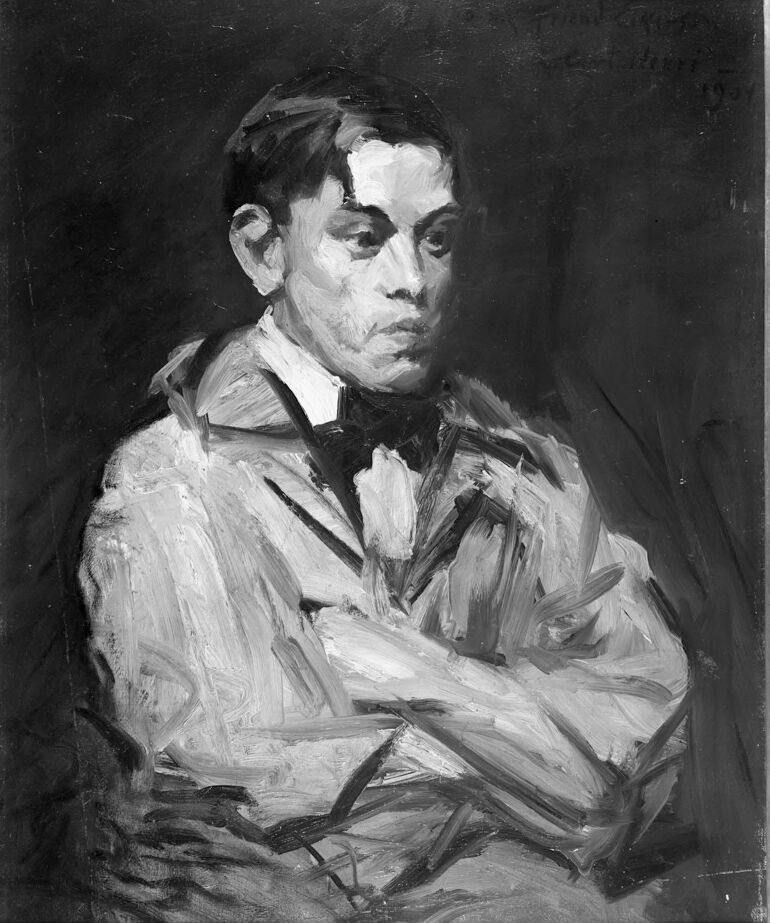

The image displays a monochromatic painting that portrays a figure in a seated position. The figure is shown from the waist up and appears to be dressed in what might be formal attire, suggested by the visible collar, tie, and jacket. The strokes of the painting are expressive and varied, contributing to a sense of texture throughout. Judging by the clothing and the posture, the painting seems to capture a moment of quiet repose or contemplation. The background of the painting is dark, contrasting with the lighter tones of the figure, which helps to draw attention to the subject. The top right corner of the painting contains some faded text, potentially a signature or a date, which is partially obscured and difficult to decipher.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-29

This is a black and white painted portrait, appearing to be done in an expressive, loose brushstroke style. The subject is wearing a light-colored formal outfit with what appears to be a bow tie or cravat. The painting has a dark background, creating a strong contrast with the lighter tones used for the clothing and figure. The brushwork is quite visible and energetic, giving the painting a dynamic quality typical of early modern portraiture. The composition is positioned at a slight angle, with the figure's arms appearing to be crossed. The painting style suggests it may be from the early to mid-20th century, with characteristics of expressionist portraiture.

Created by claude-3-haiku-20240307 on 2024-11-27

The image depicts a portrait of a male figure, shown in a somber and contemplative pose. The figure is rendered in a loose, expressionistic style, with bold brushstrokes and a predominantly dark, monochromatic palette. The subject's face is the focal point, conveying a sense of introspection and melancholy. The overall composition and style suggest a work of modern or early 20th century art.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-30

This image is a black and white painting of a man in a white shirt and black bow tie, with his arms crossed. The painting is done in an impressionist style, with visible brushstrokes and a focus on capturing the subject's likeness rather than precise details. The man is depicted from the chest up, facing forward but turned slightly to his right. He has short dark hair and a serious expression on his face. His arms are crossed over his chest, with his right hand resting on his left forearm. He is wearing a white shirt with a high collar and a black bow tie. The background of the painting is dark, which helps to emphasize the subject's features and create a sense of depth. The overall effect of the painting is one of simplicity and elegance, with a focus on capturing the essence of the subject rather than creating a highly detailed or realistic portrait. The painting is framed in a white frame, which adds a touch of sophistication and helps to draw attention to the artwork. Overall, this image is a beautiful example of impressionist art, with a focus on capturing the subject's likeness and creating a sense of atmosphere and mood.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-25

The image is a black-and-white painting of a young man with dark hair, dressed in a white shirt and a dark bow tie. The painting appears to be a self-portrait, as the artist's signature is visible in the top-right corner. The subject is depicted with his arms crossed, facing the viewer directly. The background of the painting is a solid black, which serves to accentuate the subject's features. The overall style of the painting is reminiscent of early 20th-century portraiture, with bold brushstrokes and a focus on capturing the subject's likeness. The artist's use of chiaroscuro, a technique that uses strong contrasts between light and dark to create a sense of volume in modeling three-dimensional objects and figures, adds depth and dimensionality to the painting. The subject's expression is serious and introspective, inviting the viewer to ponder his thoughts and emotions. Overall, the painting is a compelling and thought-provoking work of art that showcases the artist's skill and attention to detail.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-05

The image depicts a black-and-white portrait of a man. He is seated with his arms crossed in front of him. The man is wearing a white coat with a bow tie. His facial expression is serious, and he is looking directly at the camera. The background is dark, and the lighting is focused on the man, creating a contrast between him and the background. The portrait is framed in a silver frame.

Created by amazon.nova-pro-v1:0 on 2025-01-05

The painting depicts a man sitting with his arms crossed. The man is wearing a black bow tie and a white coat. He has a serious expression on his face. The painting is in black and white, and the man's face is painted with a combination of white and black colors. The background of the painting is dark, and it seems to be a wall.