Microsoft

created on 2020-04-24

Azure OpenAI

Created on 2024-12-04

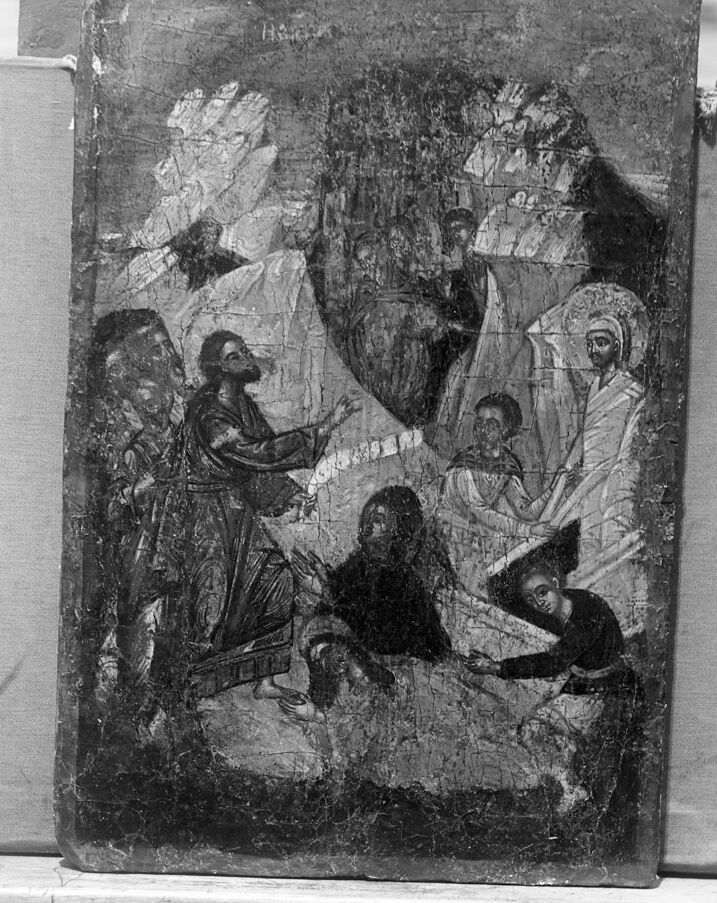

This is a black and white photograph of an aged and cracked religious iconographic painting. The image reveals several figures that appear to be engaged in a narrative scene, which is typical of religious artworks designed to tell a story from scripture or tradition. Given the style, it seems to be in the tradition of Eastern Orthodox or Byzantine iconography.

The painting is detailed, and even through the cracks and apparent wear, one can observe that the figures are clothed in traditional garments, which suggest a historical or biblical context. There are draped fabrics and some of the figures appear to be holding objects, possibly indicative of their roles or the story being told by the painting. The icon is bordered with a simple linear edge, indicating that the central panel is the primary focus. The icon seems to have a textured surface, with craquelure throughout indicative of its age. The photo also shows a bit of the edge of the mounting or frame, suggesting this icon is likely on display or stored in a setting that preserves its condition.

Anthropic Claude

Created on 2024-11-27

The image appears to be a religious painting or icon depicting a religious scene. It shows several figures in traditional religious garb, possibly biblical or historical characters, engaged in some kind of interaction or event. The image has a textured, aged appearance, suggesting it may be an antique or historical work of art.

Meta Llama

Created on 2024-11-22

The image is a black and white photograph of an old, worn painting depicting a scene with multiple figures. The painting appears to be on a wooden board or canvas, with visible cracks and wear throughout. The scene shows a group of people gathered around a central figure, possibly a religious leader or figure of authority. Some of the figures are dressed in robes or tunics, while others wear head coverings or other distinctive attire. The background of the painting is dark and indistinct, but it appears to feature some sort of architectural element, such as a building or a column.

The overall atmosphere of the painting is one of reverence and solemnity, suggesting that it may be a religious or devotional work. The use of muted colors and the focus on the central figure create a sense of intimacy and importance, drawing the viewer's attention to the main subject of the painting.