Machine Generated Data

Tags

Color Analysis

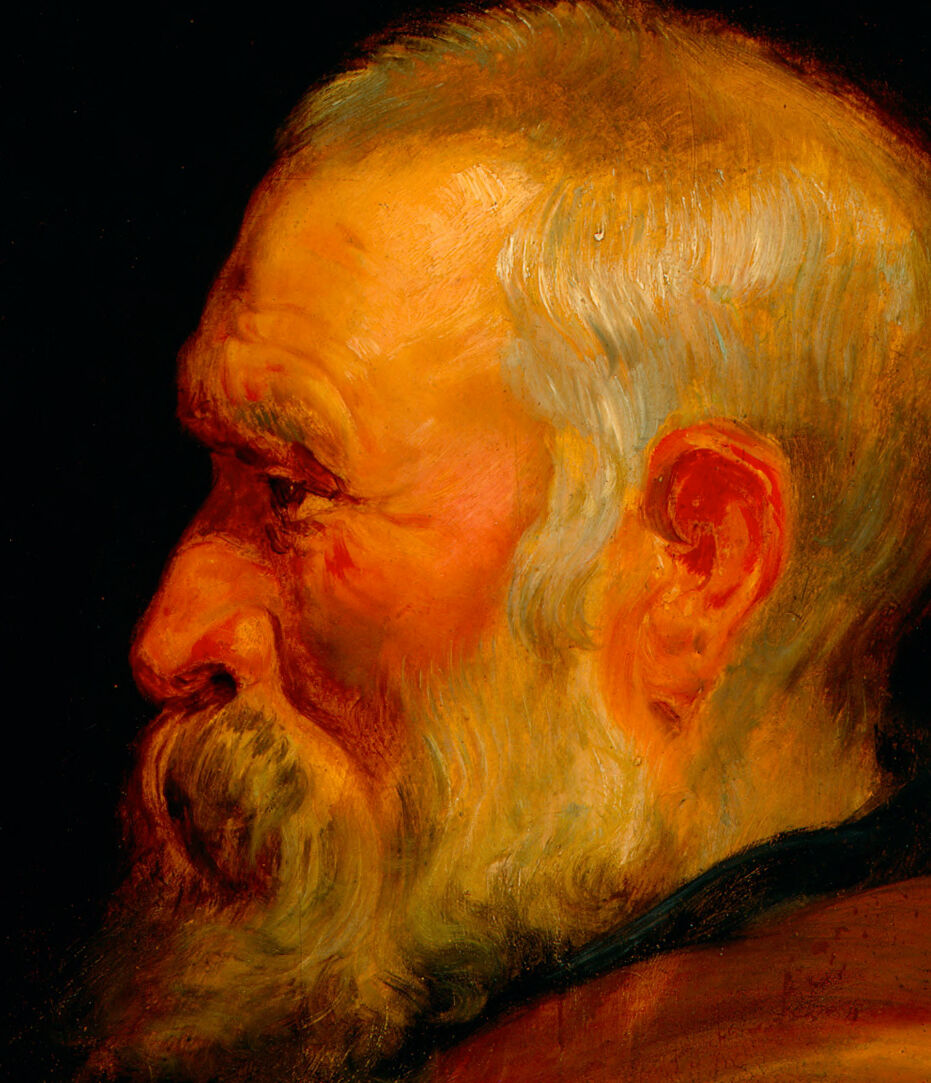

Face analysis

Amazon

AWS Rekognition

| Age | 48-66 |

| Gender | Male, 97.7% |

| Disgusted | 7% |

| Sad | 20.9% |

| Happy | 1% |

| Calm | 11.6% |

| Confused | 3.7% |

| Angry | 41.8% |

| Surprised | 1.4% |

| Fear | 12.5% |

Feature analysis

Amazon

| Person | 88.2% | |

Categories

Imagga

| people portraits | 29.4% | |

| food drinks | 27.5% | |

| paintings art | 19.3% | |

| events parties | 17.1% | |

| macro flowers | 5.8% | |

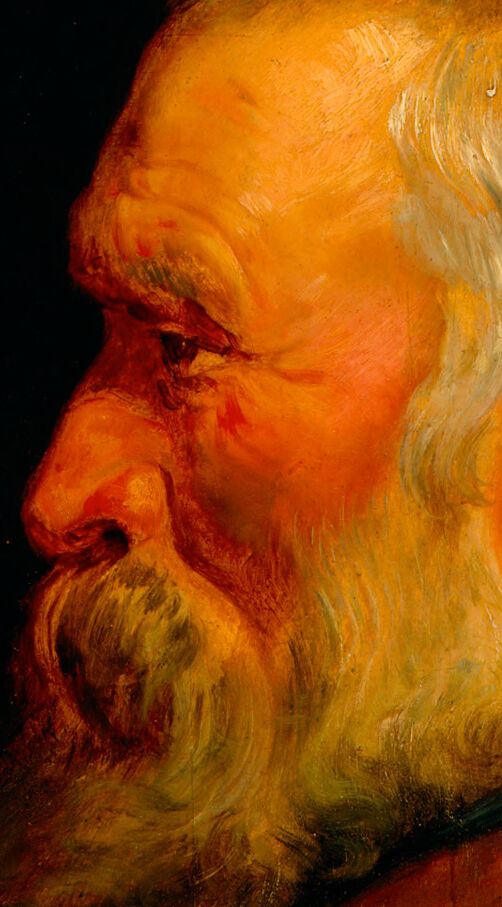

Captions

Microsoft

created on 2020-04-24

| a man looking at the camera | 46.1% | |

| the face of a man | 46% | |

| a close up of a man | 45.9% | |

OpenAI GPT

Created by gpt-4 on 2024-02-09

This image depicts a person with a bared, muscular back, draped in a flowing, orange-toned fabric that catches light and shadow dramatically. The subject is holding what appears to be a flute-like musical instrument close to their body with their right hand, fingers gripping the instrument's rods visibly. The artwork is rich with earthy tones and a stark contrast that brings out the curves and edges of the subject's musculature and the flowing fabric. The background is predominantly dark, creating a sharp contrast with the illuminated portions of the subject.

Anthropic Claude

Created by claude-3-haiku-48k-20240307 on 2024-03-30

The image appears to depict the profile of an elderly man with a long, white beard and intense, penetrating eyes. His face is illuminated by warm, reddish-orange tones, casting a dramatic and introspective mood. The man is wearing a robe or cloak that drapes over his shoulders, adding to the sense of age and wisdom. The overall composition and use of chiaroscuro lighting create a striking, almost mystical quality to the portrait.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-30

This image depicts a painting of an older man with a white beard and hair, wearing a brown robe. He is holding a black object in his right hand, which appears to be a gun or a tool. The background of the painting is dark, with the man's face and body illuminated by a warm light. The painting style suggests that it may be a work by a Baroque artist, possibly from the 17th century. The use of chiaroscuro, a technique that uses strong contrasts between light and dark to create a sense of volume and depth, is characteristic of Baroque art. The painting's subject matter and style are reminiscent of works by artists such as Rembrandt or Caravaggio. Overall, the image presents a striking and evocative portrait of an older man, with a sense of drama and intensity conveyed through the use of light and shadow.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-25

The image is a painting of an elderly man with a beard, holding a gun. The man has a white beard and hair, and is wearing a yellow robe. He is facing to the left, but his head is turned slightly towards the viewer. His right hand holds the gun, which is pointed downwards. The background of the painting is black. The painting is in the style of Rembrandt, a Dutch painter known for his use of light and shadow. The use of chiaroscuro, a technique that uses strong contrasts between light and dark to create a sense of volume in modeling three-dimensional objects and figures, is evident in the painting. The light source appears to be coming from the left side of the painting, casting a warm glow on the man's face and robe. The dark background helps to emphasize the light and shadow on the subject, creating a dramatic effect. Overall, the painting is a powerful portrait that captures the essence of the elderly man. The use of light and shadow creates a sense of depth and dimensionality, drawing the viewer's eye to the subject. The painting is a testament to Rembrandt's skill as a painter and his ability to capture the human form in a compelling and expressive way.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-05

This is an oil painting of an elderly man with a white beard and mustache. He is wearing a brown robe with a black collar. The man is looking to the left, and his right hand is holding a gun. The background is dark, and the man's face is illuminated by a light source, probably from the left. The painting has a blurry effect, which adds to the mysterious and dramatic atmosphere of the image.

Created by amazon.nova-pro-v1:0 on 2025-01-05

The painting depicts an old man with a white beard and white hair, wearing a brown robe. He is holding a trumpet in his left hand and looking to his left. The man's face is illuminated by a light source, possibly a lamp or the sun, casting a warm glow on his face. The background is dark, possibly a night sky or a cave. The painting is an oil painting on canvas.