Machine Generated Data

Tags

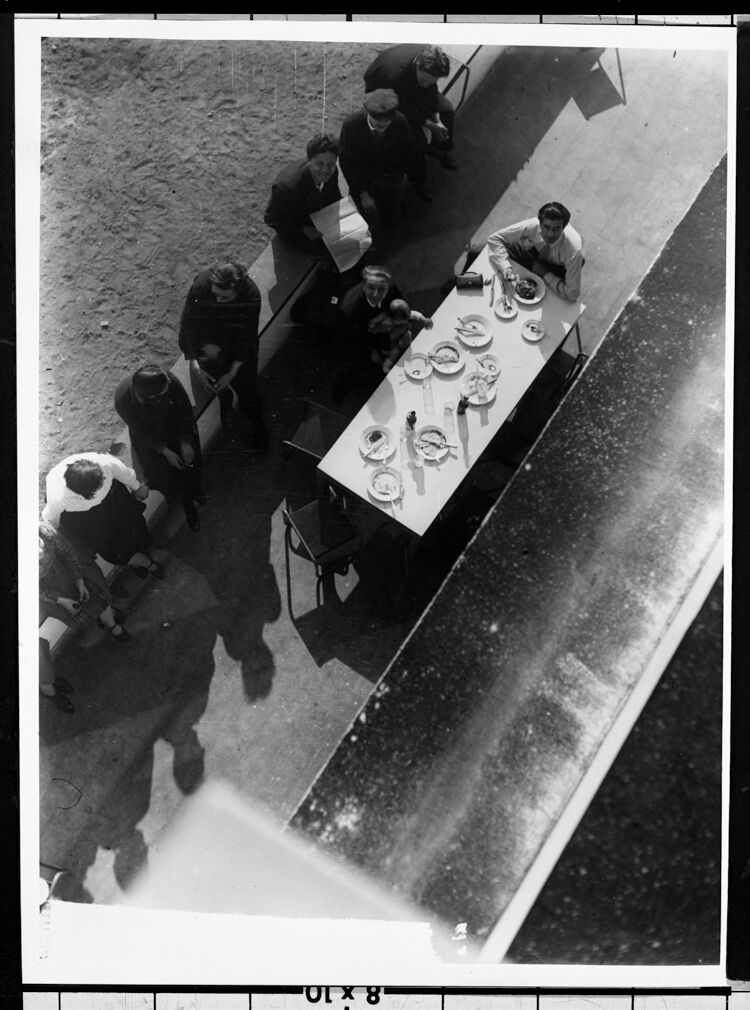

Amazon

created on 2022-06-18

| Restaurant | 99.6 | |

|

| ||

| Person | 97.3 | |

|

| ||

| Human | 97.3 | |

|

| ||

| Person | 96.2 | |

|

| ||

| Person | 95.3 | |

|

| ||

| Furniture | 93.3 | |

|

| ||

| Cafe | 93.3 | |

|

| ||

| Cafeteria | 92.4 | |

|

| ||

| Tabletop | 89.8 | |

|

| ||

| Person | 89.3 | |

|

| ||

| Table | 87.4 | |

|

| ||

| Dining Table | 87.1 | |

|

| ||

| Person | 84.1 | |

|

| ||

| Person | 77.5 | |

|

| ||

| Dog | 76.5 | |

|

| ||

| Mammal | 76.5 | |

|

| ||

| Animal | 76.5 | |

|

| ||

| Canine | 76.5 | |

|

| ||

| Pet | 76.5 | |

|

| ||

| Food Court | 75.8 | |

|

| ||

| Food | 75.8 | |

|

| ||

| Person | 66.5 | |

|

| ||

| Poster | 58.7 | |

|

| ||

| Advertisement | 58.7 | |

|

| ||

| Text | 57.9 | |

|

| ||

| Person | 48.3 | |

|

| ||

Clarifai

created on 2023-10-29

| people | 99.7 | |

|

| ||

| skateboard | 98.5 | |

|

| ||

| street | 98.2 | |

|

| ||

| one | 96.9 | |

|

| ||

| adult | 96.8 | |

|

| ||

| woman | 94.5 | |

|

| ||

| art | 93.7 | |

|

| ||

| group together | 93.6 | |

|

| ||

| man | 93.4 | |

|

| ||

| skate | 93.2 | |

|

| ||

| group | 92.2 | |

|

| ||

| wear | 89.7 | |

|

| ||

| monochrome | 89.2 | |

|

| ||

| illustration | 85.8 | |

|

| ||

| graffiti | 85.2 | |

|

| ||

| recreation | 84.5 | |

|

| ||

| vehicle | 82.7 | |

|

| ||

| two | 82.6 | |

|

| ||

| child | 81.9 | |

|

| ||

| many | 81.1 | |

|

| ||

Imagga

created on 2022-06-18

| black | 24.7 | |

|

| ||

| device | 24.3 | |

|

| ||

| support | 18.5 | |

|

| ||

| windowsill | 17.9 | |

|

| ||

| skateboard | 16.6 | |

|

| ||

| sill | 14.3 | |

|

| ||

| board | 14.1 | |

|

| ||

| man | 12.8 | |

|

| ||

| wheeled vehicle | 12.8 | |

|

| ||

| old | 12.5 | |

|

| ||

| silhouette | 12.4 | |

|

| ||

| light | 11.4 | |

|

| ||

| people | 11.1 | |

|

| ||

| vehicle | 11.1 | |

|

| ||

| structural member | 10.9 | |

|

| ||

| window | 10.3 | |

|

| ||

| vintage | 10.1 | |

|

| ||

| music | 9.9 | |

|

| ||

| art | 9.7 | |

|

| ||

| equipment | 9.7 | |

|

| ||

| metal | 9.6 | |

|

| ||

| urban | 9.6 | |

|

| ||

| headstock | 9.5 | |

|

| ||

| close | 9.1 | |

|

| ||

| business | 9.1 | |

|

| ||

| style | 8.9 | |

|

| ||

| home | 8.8 | |

|

| ||

| lighter | 8.6 | |

|

| ||

| male | 8.5 | |

|

| ||

| adult | 8.4 | |

|

| ||

| city | 8.3 | |

|

| ||

| telephone | 8.3 | |

|

| ||

| alone | 8.2 | |

|

| ||

| refrigerator | 8.1 | |

|

| ||

| transportation | 8.1 | |

|

| ||

| office | 8 | |

|

| ||

| fastener | 7.9 | |

|

| ||

| travel | 7.7 | |

|

| ||

| wall | 7.7 | |

|

| ||

| studio | 7.6 | |

|

| ||

| portrait | 7.1 | |

|

| ||

| modern | 7 | |

|

| ||

Google

created on 2022-06-18

| Chair | 86 | |

|

| ||

| Table | 82.6 | |

|

| ||

| Black-and-white | 82.2 | |

|

| ||

| Rectangle | 78 | |

|

| ||

| Desk | 77.2 | |

|

| ||

| Font | 77.1 | |

|

| ||

| Tints and shades | 76 | |

|

| ||

| Monochrome photography | 69.5 | |

|

| ||

| Monochrome | 69.2 | |

|

| ||

| Room | 65.6 | |

|

| ||

| Sitting | 59.7 | |

|

| ||

| Photographic paper | 59.5 | |

|

| ||

| History | 56.2 | |

|

| ||

| Art | 56.1 | |

|

| ||

| Recreation | 52.9 | |

|

| ||

| Motor vehicle | 51.4 | |

|

| ||

Microsoft

created on 2022-06-18

| text | 99.4 | |

|

| ||

| black and white | 98.5 | |

|

| ||

| handwriting | 97.4 | |

|

| ||

| person | 93.4 | |

|

| ||

| clothing | 93.3 | |

|

| ||

| street | 87.2 | |

|

| ||

| monochrome | 82.6 | |

|

| ||

| man | 74.6 | |

|

| ||

| footwear | 71.1 | |

|

| ||

| different | 41.2 | |

|

| ||

Color Analysis

Face analysis

Amazon

Imagga

AWS Rekognition

| Age | 28-38 |

| Gender | Male, 99.3% |

| Sad | 99.8% |

| Confused | 18.2% |

| Fear | 8% |

| Surprised | 7.3% |

| Calm | 2.3% |

| Angry | 1.3% |

| Disgusted | 1% |

| Happy | 0.6% |

AWS Rekognition

| Age | 30-40 |

| Gender | Female, 96.8% |

| Disgusted | 32.6% |

| Happy | 23.5% |

| Angry | 18.1% |

| Fear | 11% |

| Confused | 9.7% |

| Surprised | 7.5% |

| Sad | 3.3% |

| Calm | 0.4% |

AWS Rekognition

| Age | 23-31 |

| Gender | Male, 93.1% |

| Happy | 40.4% |

| Calm | 19.4% |

| Fear | 17% |

| Surprised | 15.3% |

| Angry | 3.9% |

| Confused | 3.9% |

| Sad | 2.8% |

| Disgusted | 1.2% |

AWS Rekognition

| Age | 22-30 |

| Gender | Male, 97.2% |

| Happy | 28.8% |

| Calm | 20.5% |

| Angry | 14.7% |

| Surprised | 10.2% |

| Disgusted | 9.2% |

| Confused | 8.7% |

| Fear | 7.6% |

| Sad | 6.2% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Imagga

| Traits | no traits identified |

Imagga

| Traits | no traits identified |

Feature analysis

Categories

Imagga

| paintings art | 90.9% | |

|

| ||

| interior objects | 5.7% | |

|

| ||

| streetview architecture | 1.8% | |

|

| ||

| food drinks | 1.4% | |

|

| ||

Captions

Text analysis

Amazon

8

10

8 x 10

x