Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Imagga

AWS Rekognition

| Age | 14-26 |

| Gender | Female, 50.2% |

| Happy | 45.1% |

| Disgusted | 45% |

| Angry | 45% |

| Calm | 45.9% |

| Sad | 53.9% |

| Fear | 45% |

| Confused | 45% |

| Surprised | 45% |

Feature analysis

Amazon

| Person | 99.1% | |

Categories

Imagga

| people portraits | 65.4% | |

| paintings art | 33.5% | |

Captions

Microsoft

created by unknown on 2020-04-24

Clarifai

created by general-english-image-caption-blip on 2025-04-28

| a photograph of a painting of a group of naked men | -100% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-29

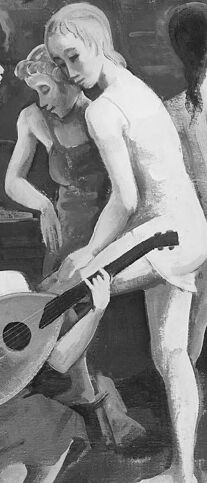

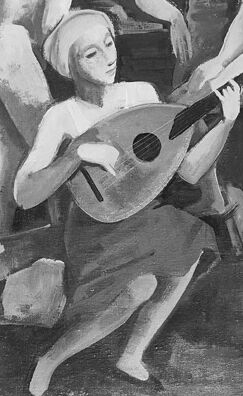

The image is a black and white painting depicting a serene outdoor scene in a natural setting with trees, rocks, and an expanse of sky. Four figures are depicted without clothes, engaged in some form of interaction. One figure is playing a guitar-like instrument while seated on a rock. The remaining figures are standing, with one holding a sheet or cloth. The overall atmosphere has a classical or pastoral feel, indicative of relaxation and possibly a communal activity.

Created by gpt-4 on 2024-12-03

The image depicts a group of four figures in a natural setting where trees, rocks, and a distant landscape can be observed. Three of the figures appear to be standing, with one holding up a cloth or garment. The individual in the foreground is seated, playing a stringed instrument that resembles a lute or guitar. There are some articles of clothing discarded on the ground, suggesting that the figures might be engaging in a leisurely, possibly musical, activity in an outdoor environment. The artwork is monochromatic, rendered in grayscale, which suggests it might be a painting or a black-and-white photograph. The style appears to be classically influenced, with an attention to form and anatomy that invokes a sense of Renaissance or neoclassical artwork.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-26

This appears to be a classical painting depicting several figures in a natural setting. The black and white or monochromatic image shows a group of people, likely bathers, in various poses near what appears to be a rocky outcropping or cliff. One figure is playing a lute or similar stringed instrument, while others are engaged in various activities around them. The scene has a pastoral, idyllic quality typical of Renaissance or Classical art. There are trees in the background, and some discarded clothing or fabric can be seen in the foreground. The composition creates a sense of harmony between the human figures and the natural landscape surrounding them.

Created by claude-3-haiku-20240307 on 2024-11-27

The image appears to be a black and white painting depicting a pastoral outdoor scene. Several nude human figures are visible, including what appears to be a musician playing a stringed instrument, surrounded by a wooded landscape with rocky formations in the background. The painting has an ethereal, dreamlike quality to it.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-23

This image is a black-and-white painting of five nude figures in a natural setting. The figures are arranged in a semi-circle, with one figure in the center and the others surrounding them. The central figure is a woman, who is being supported by two men on either side of her. She has her arms around their necks and appears to be leaning on them for support. The man on the left is holding a lute, while the man on the right is holding a cloth or fabric.

To the left of the central group, there is another woman who is standing with her back to the viewer. She is looking over her shoulder at the central group. On the right side of the image, there is a fifth figure who is partially obscured by the tree trunk. This figure appears to be a man, but it is difficult to make out any details due to the obstruction.

The background of the image features a rocky outcropping with trees and foliage. The sky above is cloudy and gray, which adds to the overall somber mood of the painting. The use of black and white tones gives the image a sense of timelessness and classicism, while the natural setting and nude figures evoke a sense of freedom and abandon. Overall, the image suggests a scene of intimacy and connection between the figures, set against a backdrop of natural beauty.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-23

The image is a painting of four nude women in a natural setting, with one woman playing a lute. The women are positioned in various poses, with one standing, one sitting, and two leaning against each other. The woman playing the lute is seated on the ground, surrounded by rocks and trees. The background of the painting features a rocky cliffside and a tree, creating a serene and idyllic atmosphere. The overall mood of the painting is one of tranquility and intimacy, capturing a moment of connection and camaraderie among the women.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-01-04

The image is a painting with a black and white color scheme. It depicts a group of people gathered outdoors, possibly in a forest or park. They are engaged in various activities, such as playing music and dancing. The people are dressed in different outfits, and some are barefoot. The painting has a dreamlike quality, with the figures appearing to be in motion and the background being somewhat blurred.

Created by amazon.nova-lite-v1:0 on 2025-01-04

The image is a black-and-white painting depicting a group of people in a forest setting. The painting is divided into two main sections: the foreground and the background. In the foreground, there are five individuals, all of whom are naked. One person is playing a lute, while another is holding a piece of fabric. The other three individuals are engaged in various activities, such as looking at the person playing the lute or examining the fabric. In the background, there is a rocky area with a tree and a house. The painting has a somewhat whimsical and playful atmosphere, with the figures appearing to be engaged in a lighthearted activity.

Google Gemini

Created by gemini-2.0-flash on 2025-04-26

Here is a description of the image:

The black and white painting depicts a scene with several figures set against a landscape. To the left, there's a stone structure that looks like old ruins. A woman is seated on a part of these ruins, playing a lute. She has short, light-colored hair and is wearing a simple dress.

Around her, there are other female figures. Some appear to be nude, while one is wearing some kind of dress. They are clustered together, creating a sense of a group interaction. One of the figures is holding up a white cloth or sheet, possibly drying it on trees on the right.

The background features a hilly landscape and a grove of trees, suggesting a natural setting for the scene. The overall impression is somewhat classical or mythological, reminiscent of scenes of nymphs or bathers.

Created by gemini-2.0-flash-lite on 2025-04-26

Here's a description of the image:

Overall Impression: The image is a black and white painting depicting a classical, possibly mythological, scene. It features a group of nude or partially clothed figures in a landscape setting. The style seems reminiscent of classical or Renaissance art, with stylized forms and a soft, muted color palette due to the monochrome treatment.

Composition:

- Figures: There are at least five figures present. They are primarily female. One figure is playing a stringed instrument (likely a lute) and appears to be seated. Other figures are positioned around her, some in conversation, and another holding a cloth. One figure, standing near a tree, appears to be drying or covering themselves.

- Setting: The scene takes place outdoors. There's a background of rocks or a stone structure, some trees, and a suggestion of hills or a distant landscape. The foreground is a natural area, possibly a clearing.

- Light and Shadow: The lighting appears to be diffused. There are shadows and variations in tone that give depth to the figures and the setting.

Style and Technique:

- The figures have simplified anatomical forms, with a focus on curves and fluidity.

- The brushwork appears soft and blended, contributing to a dreamlike or idealized effect.

- The use of a monochrome palette adds a sense of timelessness or historical distance.

Possible Interpretation:

The scene likely represents a leisure activity in a bucolic setting, possibly a gathering of nymphs or a similar mythical scene. The instrument being played and the gestures of the figures suggest music, conversation, and perhaps a moment of repose or shared intimacy.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-04-25

The image is a grayscale painting that appears to depict a classical or mythological scene. It features five figures, all of whom are nude or partially draped in cloth. The setting seems to be outdoors, with trees and a rocky landscape in the background.

Figures:

- Figure 1 (Seated with Lute): A figure is seated on the ground, playing a lute. This figure is positioned in the foreground to the left.

- Figure 2 (Standing with Cloth): Another figure stands to the right, holding a piece of cloth. This figure appears to be in a dynamic pose, possibly dancing or moving.

- Figure 3 (Standing and Interacting): A third figure stands in the center, interacting with the fourth figure. This figure seems to be embracing or conversing with the fourth figure.

- Figure 4 (Standing and Interacting): The fourth figure is also standing and appears to be engaged in conversation or an embrace with the third figure.

- Figure 5 (Seated): A fifth figure is seated on the ground, facing the first figure. This figure seems to be listening or observing the others.

Background:

- The background includes trees and a rocky landscape, suggesting a natural, outdoor setting. The trees are tall, and the rocky formations add depth to the scene.

Style:

- The painting has a classical style, reminiscent of Renaissance or Baroque art. The figures are rendered with attention to anatomy and form, and the composition is balanced.

Mood:

- The overall mood of the painting is serene and contemplative, with the figures engaged in peaceful activities such as playing music and conversing.

This painting likely represents a scene from mythology or a pastoral theme, common in classical art.