Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 22-34 |

| Gender | Male, 70.7% |

| Sad | 12.3% |

| Happy | 0.2% |

| Angry | 0.8% |

| Confused | 0.2% |

| Fear | 0.3% |

| Disgusted | 0.1% |

| Calm | 86% |

| Surprised | 0.1% |

Feature analysis

Amazon

| Person | 91.2% | |

Categories

Imagga

| pets animals | 78.1% | |

| paintings art | 21.5% | |

Captions

Microsoft

created on 2020-04-30

| an old photo of a person | 70.2% | |

| a photo of a person | 67.3% | |

| a close up of a person | 63.7% | |

OpenAI GPT

Created by gpt-4 on 2025-02-18

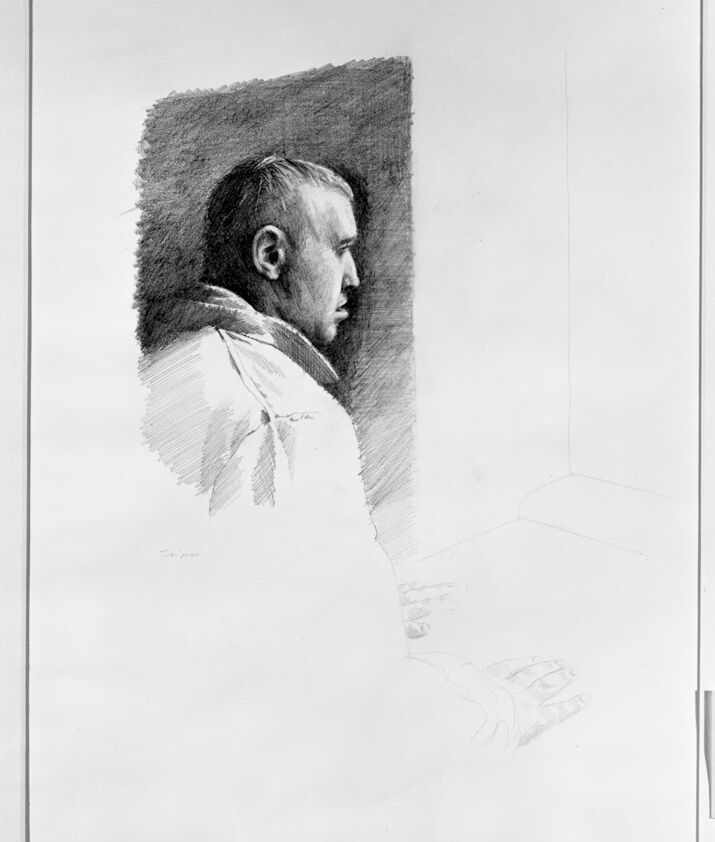

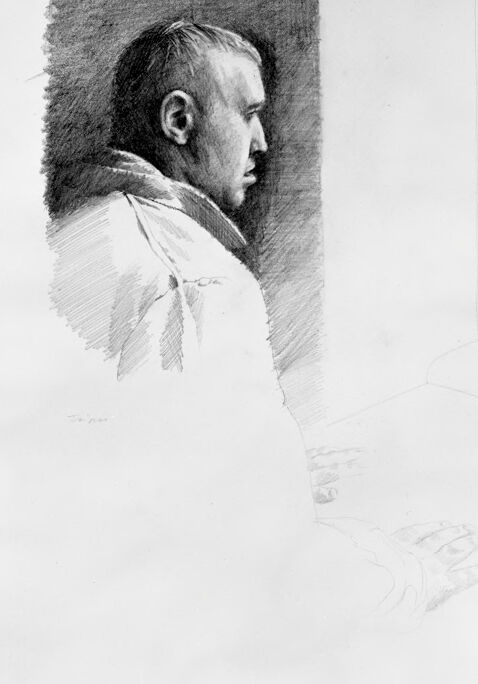

The image appears to be a black and white photograph of a drawing or sketch. In the artwork, you can see a side profile of a person up to the shoulder level, shown in a seated pose with the left hand suggestively resting on a surface, perhaps a table, though the hand appears faintly sketched in comparison to the shoulder and ear which are more defined. The person is wearing what seems to be a shirt with a collar. The background of the sketch is shaded darkly around the head, providing a contrast that highlights the outline of the person's profile. The drawing is contained within a border, likely indicating the margins of the paper it was drawn on. To the right, outside of this border, the paper is blank. There is a tag with writing in the lower-right corner of the photograph, possibly indicating catalog information or referencing the artist. The overall impression is that the drawing is incomplete or a work in progress, with finished details contrasting against more loosely defined areas.

Created by gpt-4o-2024-05-13 on 2025-02-18

The image is a black and white drawing of a person seated at a desk or flat surface, with their back partially turned towards the viewer. The individual is wearing a large, loose-fitting, white garment, possibly a shirt or a jacket. The drawing primarily focuses on the upper body and arms of the subject, with detailed shading and pencil strokes used to create texture and depth. The hands of the person rest on the desk, which seems to extend into the background. The right side of the drawing is less detailed, gradually fading out without complete lines or shading. The image also contains what appears to be a signature or marking on the lower-left side, and a marking on the lower-right border that reads "1974.297".

Anthropic Claude

Created by claude-3-haiku-20240307 on 2025-02-18

The image appears to be a black and white sketch or drawing of a person's head and shoulders, depicted in profile. The subject has distinctive facial features and a pensive or contemplative expression. The artwork captures the subject's features, including the shape of the head, the profile of the face, and the draping of the clothing around the shoulders. The overall style and execution of the drawing suggests it is a work of fine art or portraiture.

Created by claude-3-opus-20240229 on 2025-02-18

The image is a charcoal or pencil sketch portrait of a man, drawn from the side profile perspective. The man has short hair and appears to be wearing a collared shirt or jacket. The shading and line work give the drawing a detailed, realistic quality that captures the contours and shadows of the man's facial features. The sketch is centered on a plain white background.

Created by claude-3-5-sonnet-20241022 on 2025-02-18

This is a black and white pencil sketch or drawing showing a profile view of a person wearing what appears to be a light-colored coat or jacket. The drawing focuses on the upper body and head shown from the side, with the subject gazing to the left of the frame. The background is rendered in darker shading, creating contrast with the lighter tones of the clothing. The artist has used careful shading techniques to create depth and dimension, particularly in capturing the facial features and the folds of the garment. The composition is somewhat minimal, with much of the lower portion of the paper left blank or only lightly sketched, drawing attention to the detailed upper portion of the portrait.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-03-13

The image depicts a black-and-white sketch of a man, likely a self-portrait, rendered in pencil on paper. The subject is shown in profile, facing left, with short hair and wearing a collared shirt. The background is a simple, dark rectangle, while the foreground features a sketch of a hand holding a pencil, possibly the artist's own hand. The sketch is set against a white background, with a small piece of paper attached to the right side bearing handwritten text that reads "BZ-4-26." The overall effect is one of simplicity and focus on the subject, with the sketch appearing to be a study or practice piece rather than a finished work of art.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-03-13

The image depicts a pencil drawing of a man's head and shoulders, positioned in profile to the right. The man is attired in a collared shirt and appears to be seated at a table or desk, with his hands resting on the surface. The background of the drawing features a dark area behind the man's head, which gradually transitions into a lighter shade towards the bottom right corner. A small, illegible inscription is visible in the bottom-right corner of the image. The overall atmosphere of the drawing suggests that it may be a study or sketch created by an artist, possibly as part of their portfolio or for personal practice. The level of detail and realism in the drawing indicates that the artist has a high level of skill and attention to detail.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-18

The image depicts a black-and-white drawing of a man, possibly in a studio setting. The drawing is detailed and captures the man's face and upper body. He is wearing a white shirt and has a serious expression. The drawing is framed in a white border, and there is a small piece of paper with writing on it in the bottom right corner. The image has a vintage feel, and the drawing appears to be a study or a preliminary sketch.

Created by amazon.nova-pro-v1:0 on 2025-02-18

The image is a black-and-white pencil sketch of a man's profile. The sketch is framed by a white border, suggesting it might be a framed piece of art. The man's head is tilted slightly to the left, and he appears to be looking towards something or someone off-canvas. His hair is short and neatly styled, and he is wearing a collared shirt with a white collar. The background is intentionally left blank, focusing the viewer's attention solely on the subject. The sketch is detailed, capturing the texture of the man's skin and the contours of his face. There is a subtle shadow on the left side of the image, adding depth to the composition. The overall effect is a realistic and engaging portrait, highlighting the artist's skill in capturing human likeness and expression.