Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 29-45 |

| Gender | Male, 91.1% |

| Happy | 35.7% |

| Calm | 24% |

| Angry | 10.6% |

| Disgusted | 0.9% |

| Fear | 3.1% |

| Sad | 4.2% |

| Confused | 4.3% |

| Surprised | 17.1% |

Feature analysis

Amazon

| Person | 97.8% | |

Categories

Imagga

| paintings art | 61.4% | |

| people portraits | 32.8% | |

| streetview architecture | 3.9% | |

Captions

Microsoft

created by unknown on 2020-04-24

| a group of people looking at a book | 31.3% | |

Clarifai

created by general-english-image-caption-blip on 2025-04-29

| a photograph of a painting of a man playing a flute | -100% | |

OpenAI GPT

Created by gpt-4 on 2024-02-07

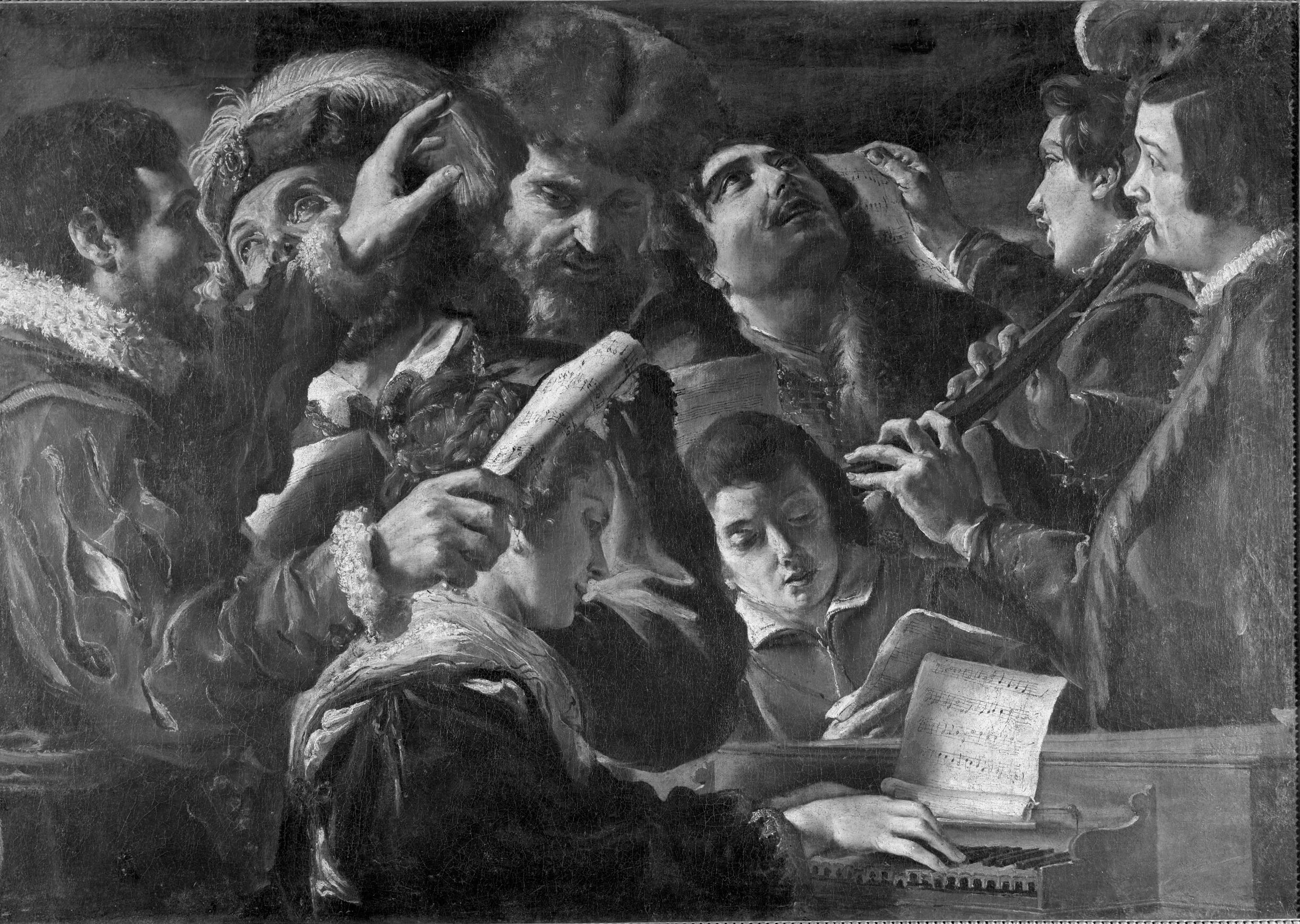

This image is a grayscale painting depicting a group of individuals engaged in a musical performance. A person in elaborate clothing, adorned with ruffles at the neck and wrist, is visible playing a stringed instrument, while another is seated at a keyboard instrument, likely a harpsichord, with sheet music before them. The scene is rendered with a focus on fine details and contrasts between light and dark, characteristic of chiaroscuro painting techniques. Additionally, there are several rectangular shapes obscuring parts of the composition. These shapes are uniformly filled with a solid color and appear to be added elements, not part of the original artwork.

Anthropic Claude

Created by claude-3-haiku-48k-20240307 on 2024-03-30

The image depicts a dramatic, black and white scene of a group of people in what appears to be a religious or academic setting. At the center is a bearded man, surrounded by others who seem to be engaged in deep discussion or debate. Some of the figures have pained or anguished expressions, while others appear focused on reading or writing. The overall atmosphere conveys a sense of intense intellectual and emotional engagement. The image has a strong chiaroscuro effect, with dramatic contrasts of light and shadow that add to the sense of drama and tension in the scene.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-29

The image is a black-and-white painting of a group of people gathered around a table, with one person holding a sheet of music and another playing a musical instrument. The painting appears to be an old masterwork, possibly from the Renaissance or Baroque period.

Key Elements:

- A group of people gathered around a table

- One person holding a sheet of music

- Another person playing a musical instrument

- The painting is in black and white

- The style suggests an old masterwork from the Renaissance or Baroque period

Possible Interpretation:

The painting may depict a scene of music-making or a gathering of musicians. The presence of a sheet of music and a musical instrument suggests that the group is engaged in a musical activity. The formal attire and serious expressions of the individuals suggest that the scene may be a formal or professional setting, such as a concert or a rehearsal.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-02

The image is a black and white painting of a group of people gathered around a table, with one person holding a piece of paper and another person holding a book. The atmosphere appears to be one of discussion or debate, with the individuals engaged in conversation and gesturing to each other. The overall mood of the scene is one of intensity and focus, as if the individuals are deeply invested in the topic at hand. The use of dark colors and dramatic lighting adds to the sense of drama and tension, creating a powerful and evocative image.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-05

The image depicts a group of musicians gathered around a harpsichord, engaged in a lively musical performance. The musicians are dressed in period clothing, with the man playing the harpsichord wearing a ruffled collar and a ruff around his neck. The other musicians, including a woman with a flute and a man with a trumpet, are also dressed in period clothing. The image is a black-and-white drawing, with a monochromatic color scheme. The musicians are depicted in a dynamic and lively manner, with their hands and instruments in motion, creating a sense of energy and movement. The drawing captures the essence of a musical performance, with the musicians working together to create a harmonious sound.

Created by amazon.nova-pro-v1:0 on 2025-01-05

The image is a black-and-white painting of a group of people. They are probably having a musical performance. The man in the middle is playing the flute. The woman beside him is playing the piano. The man on the left is holding a stick and probably singing. The man on the right is holding a piece of paper, probably a music sheet. The man in the back is looking at the man in the middle.

Google Gemini

Created by gemini-2.0-flash on 2025-04-27

Certainly! Here's a description of the image you sent:

General Impression:

The image appears to be a black and white reproduction of a baroque or early classical period painting. It depicts a group of people, presumably musicians, engaged in a performance. The composition is dynamic and somewhat crowded, with many figures tightly arranged around a central musical instrument.

Elements and Details:

Musical Instrument: The focus seems to be on a keyboard instrument, possibly a harpsichord or a small organ. One of the figures is seated and playing the instrument.

Musicians: There are several figures depicted, some of whom appear to be singing or playing other instruments (possibly a flute). Their expressions and postures suggest that they are deeply involved in the music.

Costumes: The figures are dressed in attire that is characteristic of the era in which the painting was likely created. They have elaborate collars, hats, and hairstyles, which is typical of the fashion of that period.

Light and Shadow: The artist has used light and shadow to create depth and to emphasize certain figures and aspects of the scene. The play of light and shadow adds to the dramatic effect of the composition.

Overall:

The painting conveys a sense of energy and passion, reflecting the lively atmosphere of a musical performance. It provides a glimpse into the musical culture and artistic conventions of the time period.

Created by gemini-2.0-flash-lite on 2025-04-27

This black and white image depicts a group of men, likely from the Renaissance or Baroque period, engaged in a musical performance. They are clustered closely together, creating a dynamic composition filled with emotion and action.

Several men appear to be singing, their faces tilted upwards in expressions of passion and concentration. One man is playing a flute, while another is at the keyboard of a small instrument that looks like an early form of a harpsichord or organ. The artist masterfully captures the expressions of the figures, with some looking at the sheet music, others looking to the sky and the hands playing music.

The painting is characterized by its dramatic use of light and shadow, creating depth and highlighting the figures. The clothing and accessories suggest an upper-class setting. The overall feeling is one of lively camaraderie and intense focus on the music.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-04-26

This image is a black-and-white reproduction of a painting that depicts a group of musicians engaged in a musical performance. The scene appears to be from the Baroque period, characterized by elaborate clothing and dramatic expressions. Here's a detailed breakdown of the image:

Composition: The painting is crowded with figures, all of whom are focused on the musical activity. The figures are closely packed, creating a sense of intimacy and interaction.

Characters:

- Foreground: A woman is seated at a keyboard instrument, possibly a harpsichord or organ, playing from sheet music. Her eyes are downcast, suggesting concentration.

- Middle Ground: Several figures are gathered around the keyboard player. One man appears to be singing, holding a sheet of music. Another man is playing a violin, while others are looking at the sheet music or engaged in conversation.

- Background: Additional figures are visible, some of whom are also singing or playing instruments. The expressions and gestures of the figures convey a sense of lively interaction and enjoyment.

Clothing: The attire of the figures is elaborate and ornate, typical of the Baroque period, with rich fabrics, lace, and decorative elements.

Setting: The setting appears to be indoors, possibly in a grand room or hall, suggested by the architectural elements visible in the background.

Mood: The overall mood of the painting is one of joy and camaraderie, with the figures appearing to be deeply engaged in the music and each other's company.

This painting captures a moment of musical performance and social interaction, highlighting the importance of music and communal activities in the Baroque era.